Chapter 263: ESP32-S3 Neural Network Acceleration

Chapter Objectives

By the end of this chapter, you will be able to:

- Understand the context of AI on microcontrollers, often called “Edge AI” or “TinyML.”

- Describe the specific hardware features of the ESP32-S3 that accelerate machine learning tasks.

- Explain the role of the ESP-DL software library in leveraging this hardware.

- Configure an ESP-IDF project for an AI application, such as face detection.

- Walk through the typical workflow of running a neural network model on the ESP32-S3.

- Quantify the performance difference between hardware-accelerated and software-only inference.

Introduction

The field of Artificial Intelligence (AI) and Machine Learning (ML) is no longer confined to powerful cloud servers and high-end computers. A new frontier, known as Edge AI or TinyML, focuses on running intelligent algorithms directly on low-power, resource-constrained microcontrollers like the ESP32. This approach offers significant advantages, including lower latency, improved privacy (as data is processed locally), and reduced power consumption and cost (by eliminating constant cloud communication).

While it’s possible to run simple ML models on most microcontrollers, the computational demands of tasks like image recognition or voice command detection often push them to their limits. The ESP32-S3 marks a pivotal evolution in the ESP32 family by incorporating specialized hardware instructions explicitly designed to accelerate the mathematical operations at the core of neural networks.

This chapter will demystify these hardware features and introduce you to Espressif’s ESP-DL library, the software key that unlocks this power. We will build a practical face detection application, demonstrating how the ESP32-S3 can perform complex AI tasks with remarkable efficiency.

Theory

What is a Neural Network?

At a very high level, a neural network is a computational model inspired by the human brain. It’s composed of interconnected “neurons” organized in layers. By training the network with a vast amount of data (e.g., thousands of images of faces), it “learns” to recognize patterns. Once trained, it can make predictions or classifications on new, unseen data. This process of feeding new data through the trained network to get a result is called inference.

The fundamental mathematical operations involved in inference are matrix multiplications and convolutions, which are computationally intensive.

The Challenge of AI on Microcontrollers

Running inference on a typical microcontroller presents several challenges:

- Limited Processing Power: Standard MCUs lack the raw clock speed to perform billions of calculations quickly.

- Limited Memory (RAM): Neural network models and the data they process (like images) can consume many kilobytes or even megabytes of RAM, often exceeding the internal SRAM of an MCU.

- Energy Constraints: Performing these complex calculations with a general-purpose processor can be very energy-inefficient, which is critical for battery-powered devices.

The ESP32-S3 Solution: Hardware Acceleration

The ESP32-S3’s dual-core Xtensa LX7 processor includes extensions to its instruction set specifically to address these challenges. These are not separate co-processors but are built into the main CPU cores.

- Vector Instructions (SIMD): The key feature is support for Single Instruction, Multiple Data (SIMD) operations. Imagine you need to add two arrays of eight numbers each. A standard CPU would perform eight separate addition operations. With SIMD, the CPU can execute a single instruction that performs all eight additions simultaneously. This provides a massive speedup for the vector and matrix math that dominates neural networks.

- AI-Specific Instructions: Beyond generic vector operations, the ESP32-S3 includes specialized instructions for common ML building blocks, such as dot products and convolutions, further enhancing performance.

graph TD

subgraph "Standard CPU Operation (One at a time)"

direction LR

A1[Data 1] --> OP1{Add};

B1[Data 2] --> OP1;

OP1 --> R1[Result 1];

A2[Data 3] --> OP2{Add};

B2[Data 4] --> OP2;

OP2 --> R2[Result 2];

A3[...] --> OP3{...};

B3[...] --> OP3;

OP3 --> R3[...];

end

subgraph "ESP32-S3 SIMD Operation (All at once)"

direction LR

subgraph "Input Data Array"

direction TB

D1[Data 1]

D2[Data 2]

D3[Data 3]

D4[...]

end

subgraph "Single SIMD Instruction"

direction TB

S_OP{Vector Add}

end

subgraph "Output Result Array"

direction TB

O1[Result 1]

O2[Result 2]

O3[Result 3]

O4[...]

end

D1 & D2 & D3 & D4 --> S_OP --> O1 & O2 & O3 & O4;

end

style A1 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style B1 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style A2 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style B2 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style A3 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style B3 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style OP1 fill:#FEF3C7,stroke:#D97706,stroke-width:1px,color:#92400E

style OP2 fill:#FEF3C7,stroke:#D97706,stroke-width:1px,color:#92400E

style OP3 fill:#FEF3C7,stroke:#D97706,stroke-width:1px,color:#92400E

style R1 fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46

style R2 fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46

style R3 fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46

style D1 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style D2 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style D3 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style D4 fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF

style S_OP fill:#EDE9FE,stroke:#5B21B6,stroke-width:2px,color:#5B21B6

style O1 fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46

style O2 fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46

style O3 fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46

style O4 fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46

The ESP-DL Library: The Software Bridge

Having powerful hardware is only half the battle; you need optimized software to use it. This is where Espressif’s ESP-DL library comes in.

ESP-DL is a deep learning library tailored for ESP32 chips. It provides:

| Feature | Description | Benefit for Edge AI |

|---|---|---|

| Optimized Kernels | Implementations of neural network layers (Convolution, Pooling, etc.) written to use the ESP32-S3’s specific AI instructions. | Maximizes performance by using hardware acceleration, leading to significantly faster inference times compared to standard C code. |

| Quantization Support | Tools and functions to work with models converted from 32-bit float to 8-bit integer (int8) format. | Reduces model size, lowers RAM usage, and increases speed, as the S3’s hardware is highly optimized for 8-bit integer math. |

| Simple API | A high-level, easy-to-use interface for defining models, loading weights, and executing inference. | Abstracts away the low-level hardware details, allowing developers to focus on the application logic rather than complex optimizations. |

| Graceful Fallback | On chips without AI instructions (like the original ESP32 or S2), the library automatically uses a standard C implementation. | Ensures code portability across the ESP32 family, although performance will be much lower on non-accelerated hardware. |

The TinyML Development Workflow

You do not train a neural network on the ESP32 itself. The workflow is a multi-stage process:

- Training: A data scientist trains a model on a powerful PC using a standard framework like TensorFlow or PyTorch.

- Conversion & Quantization: The trained model is converted into a format compatible with the embedded world (e.g., TensorFlow Lite) and quantized to int8.

- Deployment: The quantized model is embedded into the ESP32 firmware as an array of constants. The ESP-DL library is then used to load this model and perform inference on the device.

flowchart TD

A["Start: Define AI Goal<br>e.g., Detect Faces"] --> B{Train Model on PC};

B -- Frameworks like<br>TensorFlow / PyTorch --> C["Trained Model<br><i>(32-bit float)</i>"];

C --> D{Convert & Quantize};

D -- "e.g., TensorFlow Lite" --> E["Quantized Model<br><b>(int8 format)</b>"];

E --> F["Embed Model in Firmware<br><i>(as a C array)</i>"];

F --> G{Deploy to ESP32-S3};

G --> H[Run Inference on Device<br>using ESP-DL Library];

H --> I(("End: Get Prediction<br>e.g., Face Found!"))

classDef startNode fill:#EDE9FE,stroke:#5B21B6,stroke-width:2px,color:#5B21B6;

classDef processNode fill:#DBEAFE,stroke:#2563EB,stroke-width:1px,color:#1E40AF;

classDef decisionNode fill:#FEF3C7,stroke:#D97706,stroke-width:1px,color:#92400E;

classDef checkNode fill:#FEE2E2,stroke:#DC2626,stroke-width:1px,color:#991B1B;

classDef endNode fill:#D1FAE5,stroke:#059669,stroke-width:2px,color:#065F46;

class A startNode;

class B,D,F,G,H processNode;

class C,E checkNode;

class I endNode;

Variant Notes

The term “Neural Network Acceleration” is almost exclusively relevant to the ESP32-S3 within the Espressif ecosystem.

| ESP32 Variant | CPU Core | Hardware AI/Vector Acceleration | Relative ML Performance |

|---|---|---|---|

| ESP32-S3 | Dual-Core Xtensa LX7 | Yes (SIMD & AI Instructions) | Highest |

| ESP32-S2 | Single-Core Xtensa LX7 | No | Medium |

| ESP32 (Original) | Dual-Core Xtensa LX6 | No | Low |

| ESP32-C3 / C6 | Single-Core RISC-V | Partial (RISC-V ‘V’ extension on C6) | Varies (S3 is generally faster) |

- ESP32-S3: The Primary Target. Its dual-core Xtensa LX7 CPU with integrated AI and vector instructions makes it the ideal choice for high-performance Edge AI applications. It delivers performance an order of magnitude faster than its predecessors.

- ESP32-S2: Features the same Xtensa LX7 core but lacks the AI and vector instruction extensions. ESP-DL will run on it, but it will use a non-accelerated C-only implementation, resulting in significantly slower inference times.

- Original ESP32: Based on the older Xtensa LX6 core. It has no AI acceleration. Performance will be the slowest of the three for ML tasks.

- ESP32-C3 / C6 / H2 (RISC-V Variants): These chips use a different CPU architecture (RISC-V). While ESP-DL has been ported to support them, and some (like the C6) have RISC-V vector extensions, they do not share the same acceleration architecture as the S3. For the ML workloads covered by ESP-DL, the ESP32-S3 remains the top performer.

Warning: Running an example designed for the ESP32-S3 on another variant is possible, but do not expect the same performance. The speed difference is not a bug; it is a direct result of the hardware architecture.

Practical Example: Human Face Detection

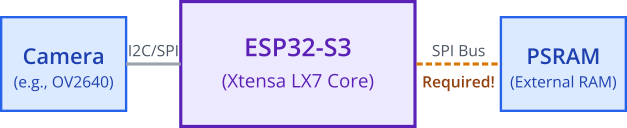

Let’s implement a classic Edge AI application: detecting human faces using a camera connected to an ESP32-S3. We will use an example from the esp-who repository, which contains pre-trained models ready for deployment.

Prerequisites:

- An ESP32-S3 development board (e.g., ESP32-S3-EYE, ESP32-S3-DevKitC with a camera).

- The board must have PSRAM, as image processing is memory-intensive.

- A compatible camera module (e.g., OV2640).

1. Project Setup and Configuration

The easiest way to start is by using Espressif’s official example.

- Clone the ESP-WHO repository:

git clone --recursive https://github.com/espressif/esp-who.git - Navigate to the face detection example:

cd esp-who/examples/human_face_detection - Set your target to ESP32-S3:

idf.py set-target esp32s3 - Open the configuration editor:

idf.py menuconfig- Under

Component config->ESP32S3-specific, ensure thatSPIRAM config->Support for external SPI RAMis enabled. - Under

Component config->ESP WHO->Camera Configuration, select the correct camera model for your board. - Review other settings to ensure they match your hardware. Save and exit.

- Under

2. Code Walkthrough

Let’s examine the key sections of the human_face_detection.cpp file. The code uses C++, but the principles are the same.

The application works by setting up two tasks running on the two cores of the ESP32-S3:

- Core 0: Runs

app_camera_main, which continuously captures frames from the camera and places them in a queue. - Core 1: Runs

app_inference_main, which retrieves frames from the queue, runs the face detection model, and prints the results.

sequenceDiagram

participant Cam as Camera Module

participant Core0 as Core 0 (app_camera_main)

participant Q as Frame Queue

participant Core1 as Core 1 (app_inference_main)

participant Log as Serial Monitor

rect rgb(219, 234, 254)

note over Core0, Core1: ESP32-S3 Dual-Core Processor

end

loop Continuous Operation

Core0->>Cam: Request new frame

Cam-->>Core0: Provides frame data

Core0->>Q: Enqueue frame

end

loop Continuous Operation

Core1->>Q: Dequeue frame

note right of Core1: Run Face Detection Model<br>(Hardware Accelerated)

Core1->>Core1: model.run(frame)

alt Face(s) Detected

Core1->>Log: Print Bounding Box & Score

else No Face Detected

Core1->>Log: (Silent or prints "No face")

end

Core1->>Core0: Return frame buffer

end

Here is a simplified look at the inference task logic:

// Simplified pseudo-code for the inference task

// A pre-instantiated face detection model object

static HumanFaceDetect model;

void app_inference_main(void)

{

// 1. Load the model from flash

// The model data is linked into the firmware as a binary blob.

// The constructor of the HumanFaceDetect class handles loading.

while (true) {

// 2. Get the next camera frame from the queue

camera_fb_t *fb = esp_camera_fb_get();

// 3. Run inference on the frame

// The model.run() method is the core of the ESP-DL operation.

// It takes the camera frame buffer as input.

dl_matrix3du_t *image_matrix = dl_matrix3du_alloc(1, fb->width, fb->height, 3);

// ... code to format the frame into the image_matrix ...

std::list<dl::detect::result_t> &results = model.run(image_matrix, { ... thresholds ... });

// 4. Process the results

// The 'results' list contains bounding boxes for any detected faces.

if (results.size() > 0) {

printf("FACE DETECTED! Count: %d\n", results.size());

for (auto const& res : results) {

printf(" Box: [x:%d, y:%d, w:%d, h:%d], Score: %f\n",

res.box[0], res.box[1], res.box[2], res.box[3], res.score);

}

}

// 5. Return the frame buffer to the camera driver

esp_camera_fb_return(fb);

// Free memory used for the matrix

dl_matrix3du_free(image_matrix);

}

}

The model.run() call is where the magic happens. This function, provided by ESP-DL, leverages the ESP32-S3’s hardware acceleration to rapidly process the entire image and find faces.

3. Build, Flash, and Observe

- Connect your ESP32-S3 board.

- Run the build command:

idf.py build - Flash the project:

idf.py flash monitor - Point the camera at your face. You should see output in the serial monitor similar to this:

I (12345) Cam: Taking picture...

I (12380) INFERENCE: FACE DETECTED! Count: 1

I (12382) INFERENCE: Box: [x:85, y:50, w:92, h:92], Score: 0.987654

Common Mistakes & Troubleshooting Tips

| Mistake / Issue | Symptom(s) | Troubleshooting / Solution |

|---|---|---|

| Out of Memory | Device continuously reboots. Guru Meditation Error related to memory allocation. E (123) heap_caps: Failed to allocate ... |

|

| Extremely Slow Performance | Inference takes seconds instead of milliseconds. Frame rate is very low (< 1 FPS). |

|

| Model Fails to Detect | The system runs without errors, but no faces (or objects) are ever detected, even when they are clearly in view. |

|

| Camera Initialization Failed | Error messages like Camera probe failed or Failed to init camera. |

|

Exercises

- Measure Performance: Add code to measure and print the inference time. Use

esp_timer_get_time()before and after themodel.run()call to calculate the duration in milliseconds. Compare this time to the camera’s frame rate to see if you can achieve real-time detection. - Control an LED on Detection: Modify the code to turn on the board’s built-in LED whenever a face is detected and turn it off when no faces are in the frame. This provides simple visual feedback without needing a serial monitor.

- Run a Different Model: The ESP-WHO repository contains other models, such as cat face detection. Adapt the project to use the cat face detection model instead of the human one. This will involve changing which model is instantiated and potentially adjusting input image sizes or formats.

Summary

- The ESP32-S3 is uniquely equipped for Edge AI tasks due to its hardware-accelerated AI and vector instructions.

- These instructions perform SIMD (Single Instruction, Multiple Data) operations, dramatically speeding up the matrix math at the heart of neural networks.

- The ESP-DL library is the essential software component that provides an API to run neural network models using this hardware acceleration.

- A typical workflow involves training on a PC, quantizing the model to

int8, and deploying it to the ESP32-S3 for inference. - Due to memory requirements, AI applications involving cameras almost always require an ESP32-S3 with external PSRAM.

- While models can run on other ESP32 variants, they will be significantly slower as they lack the S3’s specific hardware acceleration features.

Further Reading

- ESP-DL Library Documentation: Espressif Deep Learning Library Guide

- ESP-WHO Application Repository: Espressif’s collection of ready-to-use AI examples

- TinyML Foundation: A great resource for learning about the broader field of Machine Learning on Microcontrollers