Chapter 1: What is Artificial Intelligence?

Chapter Objectives

Upon completing this chapter, you will be able to:

- Understand the formal definitions and philosophical foundations of Artificial Intelligence (AI) and differentiate it from conventional software engineering.

- Analyze the historical evolution of AI, identifying key milestones, intellectual shifts, and the factors contributing to “AI winters” and subsequent renaissances.

- Differentiate between the categories of AI—Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Superintelligence (ASI)—and evaluate their current state and future implications.

- Design a conceptual framework for evaluating an AI system’s “intelligence” using established thought experiments like the Turing Test and the Chinese Room Argument.

- Identify the major subfields of AI, including Machine Learning, Natural Language Processing, and Computer Vision, and explain their core objectives and interrelationships.

- Deploy foundational ethical reasoning to assess the societal impact and potential risks associated with advancing AI technologies.

Introduction

Welcome to the foundational chapter of your journey into Artificial Intelligence engineering. The term “Artificial Intelligence” has captured the human imagination for decades, evolving from science fiction to a driving force of modern technological and societal change. In today’s world, AI is not a distant concept; it is the engine behind personalized recommendation systems, the intelligence in autonomous vehicles, the diagnostic power in advanced medical imaging, and the creative partner in art and music generation. For an AI engineer, a deep, foundational understanding of what AI is—and what it is not—is the most critical first step. This chapter moves beyond the hype to establish a rigorous, technical, and philosophical understanding of the field. We will explore the fundamental question of what it means to create intelligence, tracing the intellectual lineage from early philosophical inquiries to the cutting-edge algorithms of today. By dissecting the core concepts, historical context, and ethical dimensions, you will build the essential mental models required to navigate the complexities of AI development. This chapter will equip you not just with definitions, but with the critical perspective needed to build, analyze, and deploy AI systems responsibly and effectively in a world increasingly shaped by them.

Technical Background

The pursuit of artificial intelligence is, at its core, an endeavor to understand and replicate the mechanisms of intelligence itself. This section delves into the technical and philosophical underpinnings of the field, establishing a firm conceptual bedrock upon which all subsequent, more specialized knowledge will be built. We will journey from the foundational definitions that delineate AI from traditional computing to the grand visions that drive its future development.

Defining Intelligence: The Core Pursuit

At first glance, defining Artificial Intelligence seems straightforward: it is intelligence demonstrated by machines. However, this definition immediately begs a more profound question: what is intelligence? In the context of AI engineering, intelligence is not merely about computation; it is about the ability to perceive, reason, learn, and act in complex environments to achieve specific goals. Traditional computer programs operate on explicit, pre-defined logic—if X, then do Y. They are deterministic and follow a path laid out by a programmer. An AI system, in contrast, is designed to operate in domains where the rules are not fully known or the environment is constantly changing.

The core distinction lies in the system’s capacity for adaptation and generalization. A simple calculator performs complex mathematical computations with perfect accuracy, yet we do not consider it intelligent. It cannot learn from its operations or apply its computational ability to a new, unforeseen problem, such as interpreting a sentence or recognizing a face. An AI, on the other hand, is designed to handle ambiguity and novelty. For instance, a machine learning model trained to identify cats in images learns a set of patterns and features from data. It can then generalize this learned knowledge to correctly identify a cat in a photograph it has never seen before, one that may be in a different pose, lighting condition, or breed than any in its training set.

Traditional Programming vs. Machine Learning

| Feature | Traditional Programming | Machine Learning |

|---|---|---|

| Approach | Programmer writes explicit, step-by-step rules. | System learns patterns and rules from data. |

| Logic | Deterministic. Input + Rules = Output. | Probabilistic. Input + Data = Model -> Predictions. |

| Data Usage | Program processes input data according to rules. | System is trained on data to create the rules (the model). |

| Adaptability | Low. New logic requires manual reprogramming. | High. Can adapt to new data by retraining the model. |

| Example | A calculator adding two numbers based on the ‘+’ rule. | A spam filter learning to identify junk mail from thousands of examples. |

This ability is often formalized through the concept of an intelligent agent. An agent is an entity that perceives its environment through sensors and acts upon that environment through actuators. A rational agent is one that acts to maximize its expected performance measure, given its perceptual history and built-in knowledge. This framework, central to modern AI, shifts the focus from abstract intelligence to goal-directed behavior. The “intelligence” of the system is thus measured by its effectiveness in achieving its objectives, whether that objective is winning a game of Go, navigating a self-driving car through city traffic, or translating human language. This pragmatic, performance-oriented definition provides a measurable and engineering-focused approach to a concept that has been debated by philosophers for centuries.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph LR

subgraph "AI Agent (Adaptive Loop)"

direction LR

A1(Environment) -- Sensed Data --> A2{Perceive}

A2 --> A3{Reason & Learn}

A3 -- Action --> A4(Actuators)

A4 -- Affects --> A1

A3 --> A3

end

subgraph "Traditional Program (Fixed Logic)"

direction LR

P1[Start] --> P2{Process Data with<br><b>Hard-Coded Rules</b>} --> P3[Output]

end

style P1 fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee

style P2 fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style P3 fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

style A1 fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style A2 fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style A3 fill:#e74c3c,stroke:#e74c3c,stroke-width:1px,color:#ebf5ee

style A4 fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

linkStyle 0 stroke-width:2px,fill:none,stroke:gray;

linkStyle 1 stroke-width:2px,fill:none,stroke:gray;

linkStyle 2 stroke-width:2px,fill:none,stroke:gray;

linkStyle 3 stroke-width:2px,fill:none,stroke:gray;

linkStyle 4 stroke-width:2px,fill:none,stroke:gray;

linkStyle 5 stroke-width:2px,fill:none,stroke:gray;

linkStyle 6 stroke-width:2px,fill:none,stroke:gray,stroke-dasharray: 5 5;

The Spectrum of AI: From Narrow to Superintelligent

The concept of AI is not monolithic. In practice and in theory, AI systems are categorized along a spectrum of capability and generality. Understanding this spectrum is crucial for contextualizing current technologies and appreciating the long-term ambitions of the field.

The vast majority of AI in existence today falls under the category of Artificial Narrow Intelligence (ANI), also known as Weak AI. ANI systems are designed and trained to perform a single, specific task. While they can exhibit superhuman performance in their designated domain, their intelligence is brittle and does not transfer to other tasks. For example, the AI that powers Google’s search engine is incredibly sophisticated at indexing and retrieving information from the web, but it cannot use that capability to diagnose a medical condition or compose a symphony. Similarly, an AI that excels at playing chess cannot adapt its strategies to play checkers, let alone drive a car. ANI is the workhorse of the modern AI revolution, powering everything from spam filters and recommendation engines to language translation services and stock trading bots. The engineering challenge in ANI is to design specialized architectures and training methodologies that optimize performance for one well-defined problem.

The next frontier, and the subject of intense research and speculation, is Artificial General Intelligence (AGI), or Strong AI. An AGI would possess the ability to understand, learn, and apply its intelligence to solve any intellectual task that a human being can. It would not be confined to a single domain but would exhibit cognitive flexibility, common sense reasoning, and the ability to transfer knowledge between disparate contexts. An AGI could, in theory, read a medical textbook and then reason about a patient’s symptoms, or watch a video of a mechanic and learn how to repair an engine. We have not yet achieved AGI. The challenges are immense, spanning not only computational hurdles but also fundamental gaps in our understanding of consciousness, learning, and common sense. Creating an AGI is not simply a matter of scaling up current ANI systems; it likely requires new paradigms and breakthroughs in areas like unsupervised learning, causal reasoning, and cognitive architectures.

Beyond AGI lies the theoretical concept of Artificial Superintelligence (ASI). An ASI is a hypothetical agent that would possess intelligence far surpassing that of the brightest and most gifted human minds in virtually every field, including scientific creativity, general wisdom, and social skills. The path from AGI to ASI could be extraordinarily rapid, a phenomenon known as an “intelligence explosion.” An AGI, with its ability to learn and reason across all domains, could potentially improve its own algorithms and hardware, creating a recursive self-improvement loop that quickly results in superintelligence. The emergence of ASI carries profound, civilization-altering implications, raising critical questions about control, ethics, and the future of humanity itself. While a distant prospect, considering the trajectory toward ASI informs the safety and ethics research that is becoming an increasingly vital part of the AI engineering discipline.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph LR

subgraph AI Spectrum

direction LR

ANI(<b>Artificial Narrow Intelligence</b><br><i>Weak AI</i><br><br>Performs a single, specific task.<br>Examples: Spam filters, Image recognition)

AGI(<b>Artificial General Intelligence</b><br><i>Strong AI</i><br><br>Can understand, learn, and apply<br>knowledge across diverse domains,<br>like a human.)

ASI(<b>Artificial Superintelligence</b><br><i>Hypothetical</i><br><br>Intelligence far surpassing the<br>brightest human minds in all fields.)

end

ANI --> AGI --> ASI

style ANI fill:#78a1bb,stroke:#283044,stroke-width:2px,color:#283044

style AGI fill:#f39c12,stroke:#283044,stroke-width:2px,color:#283044

style ASI fill:#e74c3c,stroke:#283044,stroke-width:2px,color:#ebf5ee

The Philosophical Arenas: Testing for Thought

Before we could build intelligent machines, we first had to grapple with the question of how we would even recognize success. The philosophical debates surrounding AI are not mere academic exercises; they shape the goals of the field and provide the critical frameworks for evaluating our creations. Two thought experiments, in particular, have defined the conversation for over half a century.

The first and most famous is the Turing Test, proposed by Alan Turing in his 1950 paper, “Computing Machinery and Intelligence.” Turing sought to sidestep the ambiguous question “Can machines think?” by proposing a practical, operational test. In his “imitation game,” a human interrogator engages in a text-based conversation with two unseen entities: a human and a machine. If the interrogator cannot reliably distinguish the machine from the human, the machine is said to have passed the test. The Turing Test is significant because it defines intelligence in terms of behavior. It doesn’t care about the internal mechanisms of the machine or whether it possesses consciousness; it only cares if the machine’s conversational performance is indistinguishable from a human’s. For decades, this has been a benchmark for a specific kind of AI—one focused on mimicking human language and thought patterns. However, critics argue that the Turing Test is a test of deception, not genuine intelligence. A program could potentially pass by using clever tricks and a vast database of canned responses without any real understanding.

This critique is powerfully articulated in John Searle’s Chinese Room Argument, proposed in 1980. Searle imagines himself, a non-Chinese speaker, alone in a room. He is given a large rulebook (the “program”) and batches of Chinese characters (the “input”). By following the rules in the book, he can manipulate the characters and pass out other batches of Chinese characters (the “output”) that are indistinguishable from those a native Chinese speaker would produce. From the outside, it appears the room “understands” Chinese. Yet Searle himself has zero understanding of the conversation. He is simply manipulating formal symbols according to a syntactic rulebook. Searle uses this argument to contend that a computer running a program, no matter how sophisticated, can never have a “mind” or “consciousness” in the way humans do. It can manipulate symbols (syntax), but it can never understand the meaning behind them (semantics). This argument strikes at the heart of the Strong AI hypothesis, suggesting that even a system that passes the Turing Test might be nothing more than an empty shell, a simulation of intelligence without any genuine cognitive states. For AI engineers, these debates highlight the difference between building systems that simulate intelligent behavior and those that might one day replicate the underlying cognitive processes.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph TD

subgraph "Turing Test (Behavioral)"

direction TB

I(Interrogator) -- "Asks Questions" --> W(Wall)

W -- "Receives Answers" --> I

subgraph "Behind the Wall"

H(Human) -- "Answer" --> W

M(Machine) -- "Answer" --> W

end

I -- "Can't distinguish H from M?" --> R1{Passes Test}

end

subgraph "Chinese Room Argument (Semantic)"

direction TB

Input[Chinese Symbols In] --> Room

subgraph "The Room (Syntax Manipulation)"

direction TB

P(Person<br><i>No Chinese knowledge</i>)

RB(Rulebook<br><i>The Program</i>)

P -- "Follows" --> RB

end

Room --> Output[Valid Chinese Symbols Out]

Output -- "Is this 'Understanding'?" --> R2{No Semantics}

end

classDef interrogator fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044;

classDef entity fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044;

classDef system fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee;

classDef result_pos fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee;

classDef result_neg fill:#d63031,stroke:#d63031,stroke-width:2px,color:#ebf5ee;

class I interrogator;

class H,M,P,RB entity;

class W,Room system;

class R1 result_pos;

class R2 result_neg;

The Major Subfields: Pillars of Modern AI

The vast landscape of Artificial Intelligence is organized into several major subfields, each focusing on a different facet of intelligence. While they are distinct disciplines, they are deeply interconnected, and modern AI systems often integrate techniques from multiple areas.

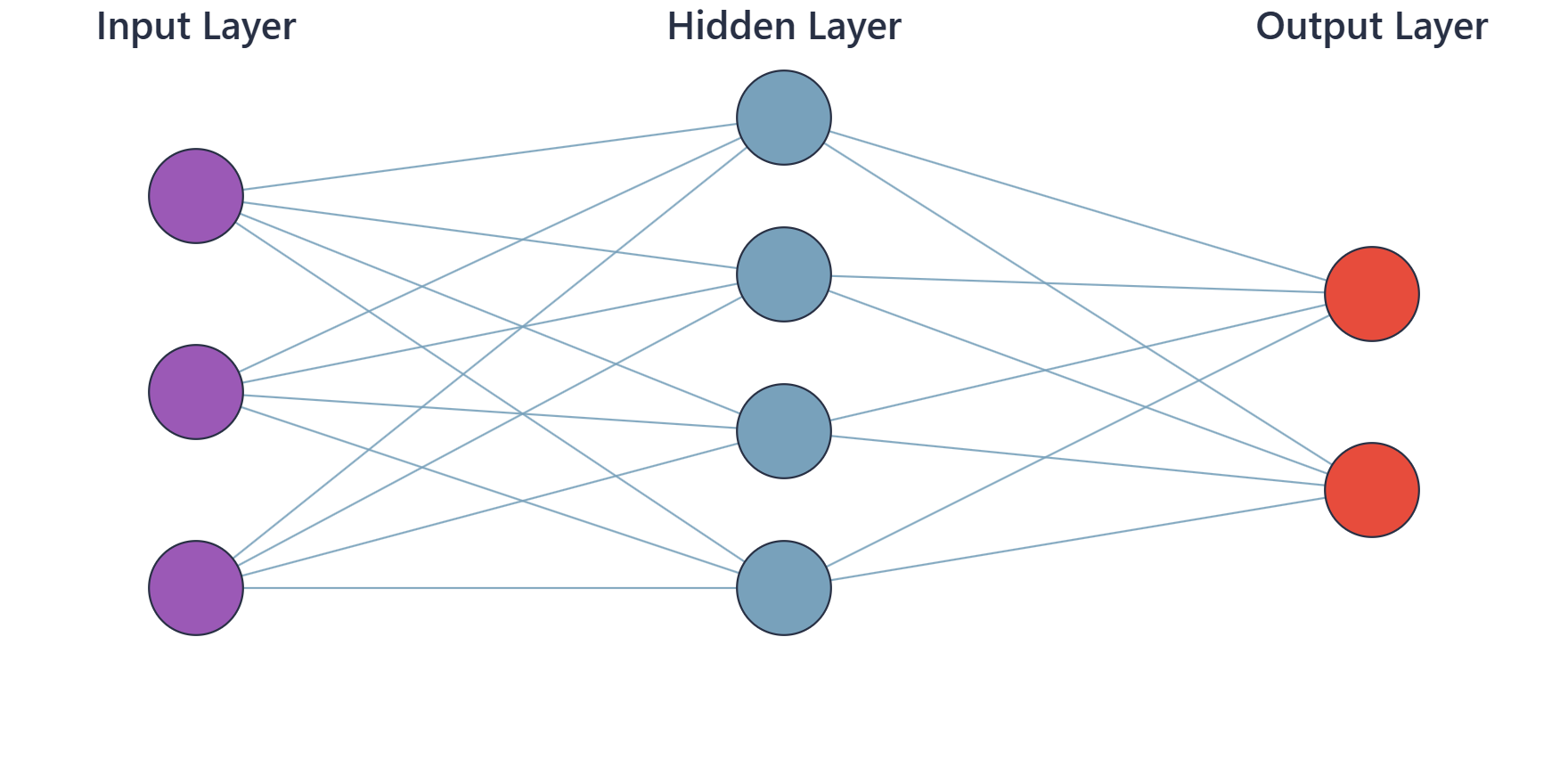

Machine Learning (ML) is arguably the most dominant and impactful subfield of AI today. It is the science of getting computers to act without being explicitly programmed. Instead of hard-coding rules, an ML model learns patterns directly from data. This learning process can be supervised, where the model is trained on a dataset containing labeled examples (e.g., images of cats labeled “cat”). It can be unsupervised, where the model must find hidden structures in unlabeled data (e.g., clustering customers into different market segments based on their purchasing behavior). Or it can be reinforcement learning, where an agent learns to take actions in an environment to maximize a cumulative reward (e.g., training an AI to play a video game by rewarding it for high scores). The rise of deep learning, a subset of ML that uses multi-layered neural networks, has been the primary driver of recent breakthroughs in AI.

Natural Language Processing (NLP) is concerned with giving computers the ability to understand, interpret, and generate human language. This is an incredibly challenging domain due to the ambiguity, context-dependency, and creativity inherent in language. NLP encompasses a wide range of tasks, including text classification (e.g., spam detection), machine translation (e.g., Google Translate), sentiment analysis (e.g., determining if a product review is positive or negative), and question answering. Modern NLP heavily relies on deep learning models, particularly architectures like Transformers, which have enabled the development of large language models (LLMs) like GPT and BERT that can generate remarkably coherent and contextually relevant text.

Computer Vision (CV) aims to replicate the capabilities of human vision, allowing machines to “see” and interpret the visual world. This involves processing and understanding information from images and videos. Core CV tasks include image classification (assigning a label to an image), object detection (locating and identifying multiple objects within an image), image segmentation (partitioning an image into meaningful parts, often at the pixel level), and facial recognition. Like NLP, computer vision has been revolutionized by deep learning, specifically Convolutional Neural Networks (CNNs), which are designed to process grid-like data such as images. CV is the enabling technology behind autonomous vehicles, medical image analysis, and automated surveillance.

These three pillars—ML, NLP, and CV—are complemented by other important areas such as robotics (which integrates AI with physical actuators to perform tasks in the real world), knowledge representation and reasoning (which focuses on how to store information and make logical inferences), and planning and optimization (which deals with finding optimal sequences of actions to achieve goals). A comprehensive AI system, such as a humanoid robot, would need to integrate all these capabilities: using computer vision to see, NLP to communicate, machine learning to adapt, and robotics to act.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph TD

subgraph "Artificial Intelligence (AI)"

direction TB

ML[<b>Machine Learning</b><br>Learn from data]

subgraph Machine Learning

DL[<b>Deep Learning</b><br>Multi-layered Neural Networks]

end

ML --> DL

end

AI --> NLP[<b>Natural Language Processing</b><br>Understand & generate language]

AI --> CV[<b>Computer Vision</b><br>Perceive & interpret visual world]

AI --> Robotics[<b>Robotics</b><br>Act in the physical world]

AI --> KR[<b>Knowledge Representation</b><br>Store & reason about information]

style AI fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee

style ML fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style DL fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style NLP fill:#e74c3c,stroke:#e74c3c,stroke-width:1px,color:#ebf5ee

style CV fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style Robotics fill:#2d7a3d,stroke:#2d7a3d,stroke-width:1px,color:#ebf5ee

style KR fill:#f1c40f,stroke:#f1c40f,stroke-width:1px,color:#283044

Conceptual Framework and Analysis

Building effective AI systems requires more than just technical skill; it demands a deep conceptual understanding of the different philosophies and strategies that have shaped the field. This section explores the theoretical frameworks that guide AI development, provides a comparative analysis of competing approaches, and uses conceptual scenarios to illuminate the principles of intelligent system design.

Theoretical Framework Application: Symbolic vs. Connectionist AI

The history of AI has been characterized by a long-standing debate between two fundamentally different approaches to creating intelligence: symbolic AI and connectionist AI. Understanding this dichotomy is essential for appreciating the strengths and weaknesses of various AI techniques and for making informed architectural decisions.

Symbolic AI, often referred to as “Good Old-Fashioned AI” (GOFAI), was the dominant paradigm from the 1950s to the late 1980s. This approach is rooted in logic, philosophy, and the belief that intelligence can be achieved by manipulating symbols according to a set of explicit rules. The core hypothesis is that all knowledge can be represented as a collection of symbols and the relationships between them. Thinking, therefore, is a process of formal reasoning, akin to mathematical deduction. An example of this approach is an expert system, a program designed to replicate the decision-making ability of a human expert in a narrow domain, like medical diagnosis. The system’s knowledge base is painstakingly built by interviewing experts and encoding their knowledge into a series of if-then rules.

- Scenario Application: Imagine building an AI to act as a mortgage loan officer. A symbolic approach would involve creating a complex rule-based system. The rules might look like this:

IF applicant_credit_score < 620 THEN deny_loan;IF debt_to_income_ratio > 0.43 AND loan_to_value_ratio > 0.95 THEN deny_loan. The system’s intelligence is explicit, transparent, and auditable. We can trace the exact rule that led to a decision. However, this approach is brittle. It cannot handle unforeseen circumstances not covered by the rules, and the process of creating and maintaining the rulebook is incredibly labor-intensive. What if a new type of credit report is introduced? The entire system may need to be re-engineered.

Connectionist AI, on the other hand, is inspired by the structure and function of the human brain. Instead of explicit rules, this approach uses networks of simple, interconnected processing units—artificial neurons. Intelligence is not programmed; it emerges from the interactions of these neurons as they learn from data. This is the foundation of modern machine learning and deep learning. The “knowledge” of a connectionist system is not stored in a readable rule but is distributed across the numerical weights of the connections between neurons.

- Scenario Application: To build the same mortgage loan officer AI with a connectionist approach, we would not write rules. Instead, we would gather a massive dataset of past loan applications, including the applicants’ financial details and the final outcome (approved or denied). We would then train a neural network on this data. The network would learn to identify the complex, non-linear patterns that correlate with loan defaults, without any explicit programming. It might discover subtle interactions between variables that a human expert would miss. The resulting system is robust and can adapt to new data, but it suffers from a lack of transparency. It becomes a “black box”; we know it works, but we may not know why it made a particular decision, which is a significant challenge in regulated industries like finance.

This fundamental tension between symbolic and connectionist approaches is a central theme in AI. Symbolic systems offer explainability and precision but are rigid. Connectionist systems offer adaptability and the ability to learn from raw data but can be opaque and require vast amounts of data. The future of AI likely lies in hybrid systems that combine the strengths of both: using connectionist models for perception and pattern recognition, and symbolic models for high-level reasoning, planning, and explainability.

Comparative Analysis: Approaches to Problem Solving

When an AI engineer faces a new problem, the choice of methodology is critical. The decision depends on the nature of the problem, the availability of data, and the requirement for explainability. Let’s analyze different problem-solving paradigms.

AI Problem-Solving Paradigm Comparison

| Approach | Core Principle | Best For | Strengths | Weaknesses |

|---|---|---|---|---|

| Rule-Based Systems | Knowledge encoded as explicit if-then rules. | Problems with a well-defined, stable set of rules (e.g., tax calculation, regulatory compliance). | High transparency, explainability, predictability. | Brittle, not adaptive, difficult to maintain for complex problems. |

| Search Algorithms | Exploring a state space of possibilities to find a solution path. | Puzzles, game playing (Chess, Go), route planning (GPS navigation). | Guaranteed to find optimal solution (if one exists and given time), systematic. | Computationally expensive (combinatorial explosion), requires a well-defined problem space. |

| Supervised Learning | Learning a mapping from input to output from labeled data. | Classification (spam vs. not spam), regression (predicting house prices). | High accuracy on specific tasks, can model complex patterns. | Requires large amounts of labeled data, can overfit, often lacks explainability. |

| Unsupervised Learning | Finding hidden structure in unlabeled data. | Customer segmentation, anomaly detection, topic modeling. | Does not require labeled data, can discover novel insights. | Results can be subjective to interpret, no clear “right” answer. |

| Reinforcement Learning | Learning through trial and error by maximizing a reward signal. | Robotics, game AI, resource management (e.g., data center cooling). | Can learn optimal strategies in complex, dynamic environments. | Very data-hungry (requires millions of trials), defining a good reward function is difficult. |

Decision Matrix for Selecting an Approach:

Consider designing an AI for a hospital to predict patient readmission risk.

- Data Availability: Do we have a large, historical dataset of patient records with clear labels (readmitted / not readmitted)? If yes, Supervised Learning is a strong candidate.

- Explainability Requirement: Do doctors and regulators need to understand why the model predicted a high risk for a specific patient? If yes, a pure “black box” deep learning model is problematic. A simpler, more interpretable model (like a logistic regression or decision tree) or a hybrid approach might be necessary. A Rule-Based System might even be used to handle certain high-stakes, clear-cut cases.

- Problem Complexity: Is the relationship between patient data and readmission risk simple and linear, or is it highly complex and non-linear? If complex, simple rule-based systems will fail. The pattern-finding power of Supervised Learning is superior.

- Dynamic Environment: Does the nature of treatments and patient populations change rapidly? If so, a model that can be easily retrained is needed. This favors ML approaches over rigid rule-based systems. Reinforcement Learning is not a good fit here, as we cannot ethically perform “trial and error” with patient health.

This analytical process demonstrates that there is no single “best” approach. The choice is a trade-off between performance, cost, transparency, and adaptability, guided by the specific constraints of the problem domain.

Conceptual Examples and Scenarios

To solidify these concepts, let’s explore scenarios that highlight the nuances of AI.

Scenario 1: The “Intelligent” Thermostat

A standard thermostat turns on the heat when the temperature drops below a set point. This is automation, not AI. It follows a simple, explicit rule.

An AI-powered smart thermostat (like Nest) goes further. It learns your daily schedule by observing when you adjust the temperature. It uses sensors to detect if anyone is home. It pulls weather data from the internet to anticipate temperature changes. It uses unsupervised learning to cluster your behavior into patterns (e.g., “weekday morning,” “weekend evening”) and supervised learning (regression) to build a predictive model of the optimal temperature setting to balance comfort and energy savings. It might even use reinforcement learning by observing how your subsequent adjustments “reward” or “punish” its choices, refining its model over time. This system is a microcosm of a rational agent: it perceives its environment (temperature, occupancy, time), reasons about its goal (energy efficiency and comfort), and acts (adjusts the heat).

Scenario 2: The Creative AI

Can an AI be creative? Consider an AI like DALL-E 2 or Midjourney, which generates images from text prompts. If you ask it for “a photograph of an astronaut riding a horse on Mars,” it produces a novel image that has never existed before. This appears to be a creative act.

A symbolic AI approach to this problem would be nearly impossible. One cannot write rules to define “art” or “creativity.”

The connectionist approach, however, excels. These models are trained on billions of image-text pairs from the internet. They don’t “understand” what an astronaut or a horse is in the human sense. Instead, they learn a statistical mapping in a high-dimensional space between the concepts (represented by text) and the visual patterns (represented by pixels). When you provide a new prompt, the model navigates this learned “latent space” to find a point that represents a plausible combination of the requested concepts and then decodes that point back into a pixel-based image.

Is this true creativity or sophisticated plagiarism? This is a modern philosophical debate. The AI is not creating from a vacuum; it is recombining and transforming patterns from its training data in novel ways. This forces us to question our own definition of creativity. Is human creativity not also, in part, the novel recombination of learned experiences and influences?

Analysis Methods and Evaluation Criteria

Evaluating an AI system goes beyond simple accuracy metrics. A comprehensive evaluation framework considers multiple dimensions.

- Performance Metrics: These are quantitative measures of success. For a classification model, this includes accuracy, precision, recall, and the F1 score. For a regression model, it’s Mean Squared Error (MSE) or R-squared. For a reinforcement learning agent, it’s the cumulative reward.

- Robustness and Reliability: How does the system perform on out-of-distribution data—data that looks different from its training set? How susceptible is it to adversarial attacks, where small, malicious perturbations to the input cause the model to fail catastrophically? A robust system should degrade gracefully, not fail silently.

- Efficiency and Scalability: What are the computational costs of training and inference? How much memory and processing power does it require? A model that takes weeks to train or requires a supercomputer to run may be impractical for real-world deployment. The algorithm’s computational complexity (e.g., Big O notation) is a key consideration.

- Explainability and Interpretability (XAI): How transparent are the model’s decisions? Can we understand why it made a particular prediction? For high-stakes applications like medical diagnosis or legal analysis, this is not a luxury but a requirement. Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are emerging to provide insights into black-box models.

- Fairness and Bias: Does the model perform equally well across different demographic groups? AI models trained on biased data will perpetuate and even amplify those biases. For example, a facial recognition system trained predominantly on images of white males may have a much higher error rate for women of color. Auditing for and mitigating bias is a critical part of responsible AI engineering.

- Ethical Impact: What are the broader societal consequences of deploying this system? Does it displace jobs? Does it impact user privacy? Does it have the potential for misuse? A holistic analysis must consider the full lifecycle and societal context of the AI.

Evaluating an AI is not a single step but a continuous process, from initial design through deployment and beyond, requiring a multi-faceted perspective that balances raw performance with safety, fairness, and real-world utility.

Industry Applications and Case Studies

Artificial Intelligence has moved from research labs to become a cornerstone of value creation across nearly every industry. Its applications are diverse, driving efficiency, enabling new products, and solving previously intractable problems.

Healthcare and Diagnostics: AI, particularly computer vision and NLP, is revolutionizing medical diagnostics. In radiology, deep learning models are trained on vast libraries of medical scans (X-rays, CTs, MRIs) to detect signs of disease, such as cancerous tumors or diabetic retinopathy, often with accuracy rivaling or exceeding that of human radiologists. For example, companies like PathAI use machine learning to assist pathologists in analyzing tissue samples, improving the accuracy and speed of cancer diagnosis. The business value lies in earlier disease detection, reduced diagnostic errors, and optimized workflows for medical professionals. The technical challenge is immense, requiring extremely high accuracy, robust validation on diverse patient populations, and full compliance with strict privacy regulations like HIPAA. The models must also be highly explainable to gain the trust of clinicians.

Finance and Algorithmic Trading: The financial sector was an early adopter of AI for its ability to analyze vast amounts of data in real-time. Reinforcement learning and predictive modeling are used to develop algorithmic trading strategies that can execute trades at superhuman speeds, reacting to market fluctuations faster than any human trader. Furthermore, AI is used for fraud detection, where unsupervised learning algorithms monitor streams of credit card transactions to identify anomalous patterns that indicate fraudulent activity. JPMorgan Chase utilizes an AI model called COiN (Contract Intelligence) to analyze thousands of legal documents and commercial loan agreements in seconds, a task that previously took lawyers hundreds of thousands of hours. This application of NLP drastically reduces costs and accelerates deal-making.

Machine Learning Algorithm Comparison

| Algorithm | Problem Type | Strengths | Weaknesses | Best Use Cases |

|---|---|---|---|---|

| Linear Regression | Supervised (Regression) | Simple, interpretable, fast. Good baseline model. | Assumes linear relationship. Sensitive to outliers. | Predicting continuous values like house prices, sales forecasts. |

| Logistic Regression | Supervised (Classification) | Interpretable, efficient. Outputs probabilities. | Assumes linear decision boundary. Can’t solve non-linear problems. | Binary classification like email spam detection, medical diagnosis (yes/no). |

| Decision Trees | Supervised (Class. & Reg.) | Highly interpretable (like a flowchart). Handles non-linear data. | Prone to overfitting. Can be unstable. | Credit scoring, customer churn prediction, when explainability is key. |

| Random Forest | Supervised (Class. & Reg.) | High accuracy, robust to overfitting. Handles missing values. | Less interpretable (“black box”). Slower to train than single trees. | Complex classification/regression tasks, fraud detection, stock market analysis. |

| Support Vector Machines (SVM) | Supervised (Classification) | Effective in high-dimensional spaces. Memory efficient. | Doesn’t perform well on large/noisy datasets. Choosing the right kernel can be tricky. |

Text classification, image recognition, bioinformatics. |

| K-Means Clustering | Unsupervised (Clustering) | Simple to implement, fast. Scales to large datasets. | Must specify number of clusters (K). Sensitive to initial cluster centers. | Customer segmentation, document clustering, image compression. |

| Convolutional Neural Networks (CNN) | Supervised (Classification) | State-of-the-art for visual tasks. Learns spatial hierarchies of features. | Requires huge datasets and GPUs. “Black box” nature. | Image classification, object detection, medical image analysis. |

| Recurrent Neural Networks (RNN) | Supervised (Sequence) | Models sequential data. Has “memory” of past inputs. | Vanishing/exploding gradient problem. Hard to train. (Often replaced by LSTMs/Transformers). | Natural language processing, speech recognition, time-series forecasting. |

Retail and Supply Chain Optimization: E-commerce giants like Amazon have built their empires on AI. Their recommendation engines, which suggest products you might like, are a classic example of supervised learning (collaborative filtering) that drives a significant portion of their sales. In the background, AI optimizes every step of the supply chain. Predictive models forecast demand for millions of items, ensuring products are in stock without over-investing in inventory. In Amazon’s fulfillment centers, thousands of robots navigate autonomously, using a combination of computer vision and reinforcement learning to retrieve products and bring them to human workers. This level of automation and optimization would be impossible without a sophisticated, integrated AI ecosystem.

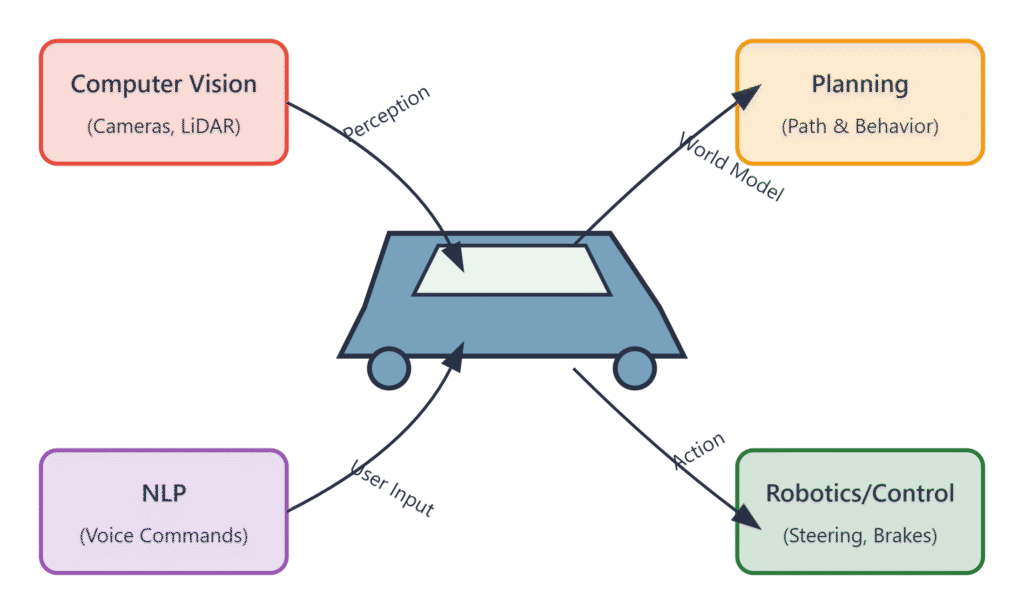

Automotive and Autonomous Driving: The development of self-driving cars by companies like Tesla, Waymo, and Cruise represents one of the most ambitious AI projects ever undertaken. An autonomous vehicle is a complex intelligent agent that must perceive its environment, predict the behavior of other agents (cars, pedestrians), and plan a safe path to its destination. It integrates nearly every subfield of AI: computer vision to interpret data from cameras, LiDAR, and radar; sensor fusion to combine these inputs into a coherent world model; predictive models to anticipate the actions of others; and complex planning algorithms to navigate. The primary business value is safety—reducing accidents caused by human error—and efficiency. The challenges are enormous, requiring near-perfect reliability and the ability to handle an infinite number of unpredictable “edge cases” on the road.

Best Practices and Common Pitfalls

Developing AI systems is a complex endeavor that goes beyond writing code. Adhering to best practices is essential for building robust, ethical, and valuable systems, while being aware of common pitfalls can save projects from failure.

- Start with the Problem, Not the Technology: A common mistake is to become enamored with a specific AI technique (e.g., “we need to use deep learning”) without first deeply understanding the business problem. The most effective AI projects are those that start with a clear, well-defined problem and then select the appropriate technology to solve it. Sometimes the best solution is a simple logistic regression model, not a massive neural network.

Warning: Avoid “AI for AI’s sake.” If a problem can be solved effectively with a simpler, non-AI approach, that is often the better engineering decision due to lower cost, higher transparency, and easier maintenance.

- Data is the Foundation: The adage “garbage in, garbage out” is amplified in AI. The performance of any machine learning model is fundamentally capped by the quality and quantity of its training data. A common pitfall is underestimating the effort required for data collection, cleaning, labeling, and augmentation. Invest heavily in data infrastructure and processes. Ensure your data is representative of the real-world scenarios your AI will encounter to avoid sampling bias.

- Embrace an Iterative, Experimental Workflow: AI development is not a linear process. It is a cycle of hypothesis, experimentation, and analysis. Be prepared to try multiple models, tune hyperparameters, and re-evaluate your approach based on empirical results. This is the core of the MLOps (Machine Learning Operations) philosophy, which applies DevOps principles to the machine learning lifecycle.💡 Tip: Use version control not just for your code, but for your data and models as well. Tools like DVC (Data Version Control) and MLflow are essential for reproducible and collaborative AI research and development.

- Prioritize Ethics and Fairness from Day One: Ethical considerations are not an afterthought; they must be integrated into the entire AI development lifecycle. This includes auditing datasets for bias, ensuring models are fair across different demographic groups, protecting user privacy, and being transparent about the system’s capabilities and limitations. A common pitfall is deploying a model without considering its potential for societal harm or unintended consequences.

Note: Establish an ethical review process for high-stakes AI projects. This should involve diverse stakeholders, including ethicists, legal experts, and representatives from the communities that will be affected by the system.

- Plan for Monitoring and Maintenance: Deploying an AI model is not the end of the project. Models can degrade in performance over time as the real-world data they encounter drifts away from the data they were trained on (a phenomenon known as model drift or concept drift). A best practice is to implement robust monitoring systems that track model performance, data distributions, and prediction fairness in production. Plan to periodically retrain and redeploy your models to maintain their accuracy and relevance.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph TD

A[Start: Define Business Problem] --> B(Data Engineering<br><i>Collection, Cleaning, Labeling</i>);

B --> C{Model Development<br><i>Training, Tuning, Experimentation</i>};

C --> D(Model Validation<br><i>Testing, Fairness & Bias Audit</i>);

D --> E((Deployment<br><i>Release to Production</i>));

E --> F(Monitoring & Observability<br><i>Track Performance, Detect Drift</i>);

F -- Retrain Signal --> B;

F -- New Insights --> A;

classDef start fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee;

classDef data fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee;

classDef model fill:#e74c3c,stroke:#e74c3c,stroke-width:1px,color:#ebf5ee;

classDef process fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044;

classDef decision fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044;

classDef deploy fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee;

class A start;

class B,F data;

class C model;

class D,E process;

Hands-on Exercises

These exercises are designed to reinforce the theoretical concepts of this chapter through analysis and design, without requiring extensive coding.

- Individual Exercise: Designing a Turing Test

- Objective: To understand the practical challenges and philosophical implications of the Turing Test.

- Task: You are tasked with designing the protocol for a modern Turing Test. Describe the following:

- The rules of engagement for the interrogator, the human foil, and the AI.

- The duration of the test and the topics of conversation allowed.

- At least five specific, challenging questions you would ask to probe for genuine understanding versus clever mimicry. Explain your reasoning for each question.

- The criteria for “passing” the test. Is a 51% deception rate enough?

- Success Criteria: A well-defined protocol that addresses potential loopholes and demonstrates a critical understanding of the test’s limitations.

- Individual Exercise: AI System Classification

- Objective: To practice identifying and categorizing different types of AI systems and their underlying approaches.

- Task: For each of the following systems, classify it as primarily Rule-Based, Supervised Learning, Unsupervised Learning, or Reinforcement Learning. Justify your classification in 2-3 sentences, and identify whether it is an example of ANI, AGI, or ASI.

- A system that recommends movies on Netflix based on your viewing history.

- The AI opponent in a chess application that adjusts its difficulty based on your skill level.

- A banking system that sorts customer support emails into categories like “Account Inquiry,” “Loan Application,” and “Complaint.”

- A software program that calculates federal income tax based on user-entered financial data.

- Success Criteria: Correct classification and a clear, concise justification for each system that demonstrates an understanding of the core principles of each AI paradigm.

- Team-Based Exercise: Ethical AI Review Board

- Objective: To apply ethical frameworks to a real-world AI application and practice stakeholder analysis.

- Task: Your team is an ethical review board at a large city. A proposal has been submitted to deploy an AI-powered facial recognition system in public spaces to identify individuals on a police watchlist.

- Identify at least four key stakeholders (e.g., law enforcement, civil liberties advocates, citizens, the technology vendor).

- For each stakeholder, describe their primary interests, potential benefits, and potential harms from this system.

- Debate the pros and cons, considering issues of bias, privacy, and potential for misuse.

- Draft a recommendation to the city council: should the system be approved, approved with specific conditions, or rejected? Justify your decision.

- Success Criteria: A balanced analysis that considers multiple perspectives and a final recommendation supported by a well-reasoned ethical argument.

Tools and Technologies

While this chapter is theoretical, understanding the landscape of tools is crucial for any aspiring AI engineer. These are the foundational technologies upon which modern AI is built.

- Programming Languages: Python is the undisputed lingua franca of AI and Machine Learning. Its simple syntax, extensive libraries, and strong community support make it the ideal language for everything from data analysis to building complex deep learning models. Key libraries include NumPy for numerical computation, Pandas for data manipulation, and Scikit-learn for traditional machine learning algorithms. While Python dominates, languages like R (for statistics), C++ (for performance-critical robotics and game AI), and Julia (an emerging language for high-performance scientific computing) are also used in specific niches.

- Deep Learning Frameworks: For any serious work in modern AI, you will use a deep learning framework. These frameworks provide the building blocks for creating and training neural networks, handling complex mathematics like automatic differentiation under the hood. The two dominant frameworks are:

- TensorFlow (Google): A powerful and flexible ecosystem with strong support for production deployment (via TensorFlow Extended – TFX) and mobile devices (TensorFlow Lite).

- PyTorch (Meta): Known for its intuitive, Python-native feel and flexibility, making it a favorite in the research community. It has rapidly gained ground in production environments as well.

- Cloud Platforms: Training large-scale AI models requires immense computational power. Cloud providers offer specialized AI/ML platforms that provide access to GPUs and TPUs, as well as managed services for the entire MLOps lifecycle. The major players are:

- Amazon Web Services (AWS): Offers Amazon SageMaker, a comprehensive platform for building, training, and deploying ML models.

- Google Cloud Platform (GCP): Features the Vertex AI platform, with strong integration with TensorFlow and access to Google’s proprietary TPUs.

- Microsoft Azure: Provides Azure Machine Learning, a collaborative environment with tools for developers and data scientists of all skill levels.

Note: Familiarity with at least one major cloud provider’s AI platform is becoming a standard requirement for AI engineering roles.

Summary

This chapter established the foundational concepts of Artificial Intelligence. You have built a framework for understanding not just what AI is, but also its historical context, its different forms, and the ethical responsibilities that come with building it.

- Defining AI: You learned that AI is distinct from traditional programming, defined by its ability to perceive, reason, learn, and act in complex environments, often framed through the concept of a rational agent.

- The AI Spectrum: You can now differentiate between the practical reality of Artificial Narrow Intelligence (ANI), the ambitious goal of Artificial General Intelligence (AGI), and the hypothetical future of Artificial Superintelligence (ASI).

- Philosophical Foundations: You analyzed the Turing Test and the Chinese Room Argument, two pivotal thought experiments that shape how we define and evaluate machine intelligence.

- Core Subfields: You identified the major pillars of modern AI: Machine Learning, Natural Language Processing, and Computer Vision, and understand their primary objectives.

- Competing Paradigms: You compared the symbolic (rule-based) and connectionist (data-driven) approaches to AI, understanding the trade-offs between explainability and adaptability.

- Real-World Impact: You explored case studies from healthcare, finance, and retail, connecting theoretical concepts to tangible business value and technical challenges.

- Responsible Development: You have been introduced to the best practices of AI engineering, emphasizing a problem-first mindset, data-centric workflows, and the critical importance of ethics and fairness.

Further Reading and Resources

- Russell, S., & Norvig, P. (2020). Artificial Intelligence: A Modern Approach (4th ed.). The definitive university textbook on AI, covering all topics in this chapter and beyond with immense depth.

- Turing, A. M. (1950). Computing Machinery and Intelligence. Mind, 59(236), 433-460. The original paper proposing the Turing Test. A foundational and surprisingly readable text.

- Searle, J. R. (1980). Minds, brains, and programs. Behavioral and Brain Sciences, 3(3), 417-457. The paper that introduced the Chinese Room Argument. Essential for understanding the critique of Strong AI.

- DeepLearning.AI – AI For Everyone (Coursera Course by Andrew Ng): An accessible, non-technical introduction to AI strategy and terminology from one of the field’s leading educators.

- Distill.pub: An online academic journal dedicated to clear, visual, and interactive explanations of machine learning research. An excellent resource for intuitive understanding of complex topics. https://distill.pub/

- Stanford Encyclopedia of Philosophy – “Artificial Intelligence”: A rigorous and comprehensive overview of the philosophical history and ongoing debates within the field of AI. https://plato.stanford.edu/entries/artificial-intelligence/

- The Allen Institute for AI (AI2): A leading research institute with a mission to contribute to humanity through high-impact AI research. Their publications and blog are excellent sources for cutting-edge work. https://allenai.org/

Glossary of Terms

- Artificial General Intelligence (AGI): A hypothetical form of AI that possesses the ability to understand or learn any intellectual task that a human being can. Also known as Strong AI.

- Artificial Narrow Intelligence (ANI): AI that is specialized for a specific task. All currently existing AI is ANI. Also known as Weak AI.

- Artificial Superintelligence (ASI): A hypothetical AI that possesses intelligence far surpassing that of the brightest human minds.

- Chinese Room Argument: A thought experiment by John Searle arguing that programming a computer can never give rise to genuine understanding or consciousness, only a simulation of it.

- Connectionism: An approach to AI that models intelligence using artificial neural networks, inspired by the structure of the brain. Knowledge is learned from data and stored in the weights of connections.

- Expert System: A classic example of symbolic AI that emulates the decision-making ability of a human expert in a narrow domain using a set of

if-thenrules. - Machine Learning (ML): A subfield of AI focused on building systems that learn patterns from data without being explicitly programmed.

- Natural Language Processing (NLP): A subfield of AI concerned with the interaction between computers and human language.

- Rational Agent: An entity that perceives its environment and acts to maximize its expected performance measure. A central concept in modern AI.

- Symbolic AI: The classical approach to AI that represents knowledge using symbols and manipulates them with formal rules of logic.

- Turing Test: A test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.