Using AI for Predictive Maintenance in Edge Devices

1. Introduction: The Need for Smarter Maintenance

The landscape of industrial operations and the Internet of Things (IoT) is undergoing a significant transformation, driven by the need for greater efficiency, reliability, and cost-effectiveness. Central to this evolution is the concept of Predictive Maintenance (PdM), a strategy poised to redefine how organizations care for their critical assets. Moving beyond traditional reactive or scheduled approaches, PdM leverages the power of real-time data and artificial intelligence to anticipate equipment issues before they lead to costly failures.

1.1 Defining Predictive Maintenance (PdM) in the IoT Era

Predictive Maintenance is a proactive maintenance strategy that utilizes data collected from sensors embedded in or attached to equipment, combined with advanced analytics and machine learning (AI/ML) algorithms, to predict when a piece of machinery is likely to require maintenance.1 Unlike traditional methods that rely on fixed schedules or react to breakdowns, PdM focuses on the actual condition of the equipment, enabling interventions only when necessary.3 It represents a shift towards condition-based maintenance, driven by continuous monitoring and data analysis.4

The core components enabling modern PdM systems include 6:

- IoT Sensors: Devices that continuously collect real-time data on various equipment parameters such as temperature, vibration, pressure, humidity, acoustics, and power consumption.3

- Data Communication: Networks (wired or wireless like Wi-Fi, Bluetooth, Cellular IoT, Ethernet) that transmit sensor data from the equipment to a processing location.6

- Data Storage: Centralized or distributed systems, often involving cloud platforms or local edge storage, to manage the collected data.6

- Predictive Analytics Engine: Software, frequently powered by AI and ML algorithms, that analyzes the data to identify patterns, detect anomalies, predict potential failures, and estimate the remaining useful life (RUL) of components.1

While the concept of predicting failures isn’t entirely new, the convergence of affordable IoT sensors, robust communication networks, scalable cloud computing, and sophisticated AI/ML algorithms has made PdM significantly more feasible, accurate, and scalable across various industries.3 It thrives on leveraging continuous, real-time data streams to gain insights into equipment health.6

1.2 The Critical Importance of PdM in Modern Industrial and IoT Systems

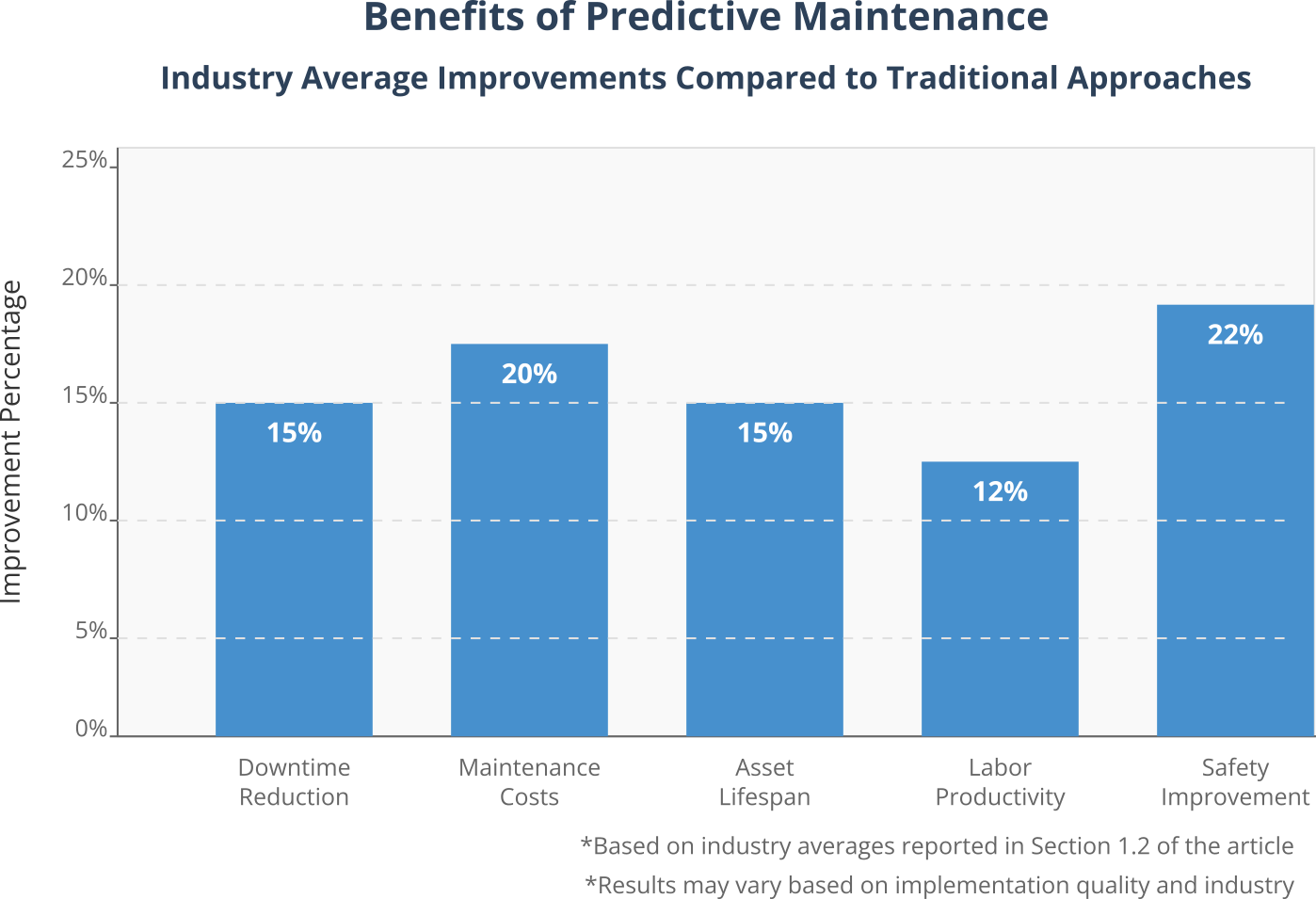

The adoption of PdM is driven by compelling economic and operational imperatives. Unplanned equipment downtime in industrial settings can be extraordinarily costly, leading to lost production, expensive emergency repairs, disruptions in supply chains, and potential safety hazards.2 For instance, unexpected outages can cost energy companies millions and lead to customer dissatisfaction, while manufacturing equipment failures significantly increase unit costs.2 Studies have indicated that PdM can yield substantial improvements, such as a 5-15% reduction in facility downtime and a 5-20% increase in labor productivity.2

PdM directly addresses these challenges by minimizing unexpected failures.1 By predicting potential issues, maintenance can be scheduled proactively during planned windows, avoiding costly disruptions and optimizing resource allocation.2 This proactive approach extends beyond just preventing downtime; it offers a range of benefits including:

- Extended Equipment Lifespan: Addressing issues early prevents minor problems from escalating into major failures, thus prolonging the operational life of assets.8

- Improved Safety: Identifying potential hazards before they lead to equipment malfunction enhances workplace safety.10

- Enhanced Operational Efficiency: Keeping equipment running optimally and reducing unexpected stops leads to smoother, more predictable operations.8

- Optimized Resource Allocation: Maintenance efforts are focused where and when they are needed, improving the efficiency of technicians and spare parts inventory management.7

The move towards PdM signifies more than just adopting new technology; it represents a fundamental shift in operational philosophy. Transitioning from reactive or time-based preventive maintenance to a predictive approach requires a change in mindset, moving from managing failures after they occur to proactively managing asset health based on continuous data streams.2 This often necessitates adjustments in maintenance workflows, requires new skill sets for data interpretation and system management, and fosters closer integration between operational technology (OT) and information technology (IT) teams.2

Furthermore, the value proposition of PdM extends beyond immediate cost savings. While reducing maintenance expenditures and downtime is a primary driver 8, the resulting improvements in equipment reliability 4, product quality 9, and operational consistency provide significant strategic advantages. Enhanced reliability can improve customer satisfaction, for example, by ensuring fewer service disruptions in utilities or telecommunications.2 In some cases, the ability to guarantee uptime, backed by robust PdM, can even enable new service-based business models for equipment manufacturers.14

2. Maintenance Strategies: A Comparative Overview

Understanding the value of predictive maintenance requires comparing it with other common maintenance strategies. Each approach has its place, but they differ significantly in methodology, cost implications, and impact on operational reliability.

2.1 Reactive Maintenance: The “Run-to-Failure” Approach

Reactive maintenance, also known as corrective or breakdown maintenance, involves repairing equipment only after it has failed or malfunctioned.12 It is the simplest strategy, requiring minimal upfront planning or investment.13 The primary perceived benefit is maximizing the utilization of an asset’s lifespan by running it until it breaks.16

However, this approach carries significant drawbacks, especially for critical equipment. Unplanned downtime is a major consequence, disrupting production schedules and potentially causing cascading failures that damage other components.15 Emergency repairs are often more expensive than planned maintenance due to urgency, overtime labor, and expedited parts shipping.12 Reactive maintenance can also pose safety risks if equipment fails unexpectedly.13 Furthermore, it often leads to treating only the symptoms of a problem (e.g., repeatedly fixing an overheated bearing) rather than addressing the root cause, resulting in recurring issues and “band-aid” fixes.16 Consequently, while upfront costs are low, the long-term costs associated with downtime, repairs, and potential secondary damage can be very high.13 This strategy is generally only suitable for non-critical, low-cost, or easily replaceable assets where failure has minimal operational impact.13

2.2 Preventive Maintenance: Scheduled Interventions

Preventive maintenance (PM) takes a more proactive stance, aiming to prevent failures before they occur by performing maintenance tasks at predetermined intervals.3 These intervals can be based on time (e.g., monthly checks) or usage (e.g., after a certain number of operating hours or cycles).12 PM is generally considered superior to a purely reactive strategy because it reduces the likelihood of unexpected breakdowns and allows for maintenance activities to be planned and scheduled.15

Despite its advantages over reactive maintenance, PM has limitations. Its core drawback is that maintenance is performed based on averages or manufacturer recommendations, not the actual condition of the specific piece of equipment.5 This can lead to two types of inefficiency:

- Over-maintenance: Replacing components or performing service that is not yet needed, wasting resources and potentially introducing risks associated with unnecessary intervention.16 It’s estimated that a significant portion of scheduled maintenance might be futile.9

- Under-maintenance: Equipment might still fail between scheduled PM intervals if its condition degrades faster than anticipated.

Preventive maintenance still requires scheduled downtime for inspections and service 19, and it provides limited insight into the real-time health of the machinery.9

2.3 Predictive Maintenance: Data-Driven Foresight

Predictive Maintenance (PdM) represents the most advanced of these common strategies. It leverages continuous monitoring through IoT sensors and applies data analytics, particularly AI/ML, to predict when maintenance will be required based on the asset’s real-time condition.2 The focus shifts entirely from fixed schedules to condition-based interventions, performing maintenance precisely when needed – before failure occurs but as close to the end of optimal component life as possible.4

The benefits of PdM are substantial. It optimizes maintenance timing, drastically reducing unplanned downtime and associated costs.8 By intervening only when necessary, it avoids the waste inherent in preventive maintenance and maximizes the useful life of components.8 Studies and reports suggest significant cost savings compared to other strategies, potentially 8-12% over preventive and up to 40% over reactive approaches 17, with other sources citing ranges like 10-40% overall cost reduction.13 PdM also enhances safety by catching potential failures early 10 and provides deep insights into equipment health and performance trends.2

However, implementing PdM involves challenges. It requires a higher initial investment in sensors, data acquisition systems, analytics software, and potentially network infrastructure upgrades.2 Effective PdM relies on substantial amounts of high-quality historical and real-time data.2 Furthermore, it demands specialized skills in data science, ML model development, and system integration and management.2 The complexity of setting up and integrating a PdM system can also be a barrier.2

2.4 Table 1: Comparison of Maintenance Strategies

| Feature | Reactive Maintenance | Preventive Maintenance | Predictive Maintenance |

|---|---|---|---|

| Approach Basis | Equipment Failure | Predetermined Schedule | Real-time Asset Condition & Data Analysis |

| Timing | After Failure Occurs | Fixed Intervals | Just-in-Time (Based on Prediction) |

| Cost Profile | Low Upfront, High & Unpredictable Repair | Moderate Upfront & Ongoing, Potential Waste | High Initial Investment, Optimized Long-Term |

| Key Pros | Minimal Planning, Max Initial Use | Reduces Unexpected Failures, Allows Planning | Minimizes Downtime, Optimizes Resources, Max Asset Life |

| Key Cons | High Downtime, High Repair Costs, Safety Risks, Unpredictable | Unnecessary Maintenance, Potential Failures Between Intervals | High Initial Cost & Complexity, Data Requirements, Needs Expertise |

| Best Suited For | Non-critical, Low-cost Assets | Moderate Criticality Assets, Compliance Req. | Critical Assets, High Downtime Cost Scenarios |

This comparison highlights that predictive maintenance is not merely an incremental improvement over preventive maintenance; it represents a paradigm shift. While both aim to be proactive 12, preventive maintenance operates on assumptions and statistical averages 5, often leading to suboptimal timing.9 Predictive maintenance, enabled by IoT and AI, bases decisions on the actual, continuously monitored condition of the specific asset.2 This move from generalized schedules to individualized, data-driven health assessment is the fundamental difference.

The decision between these strategies is ultimately an economic and risk-management choice, tailored to the specific operational context.3 Reactive maintenance might be acceptable for easily replaceable, non-critical components.13 Preventive maintenance often strikes a balance for assets where condition monitoring is difficult or cost-prohibitive. Predictive maintenance delivers the highest potential return on investment for critical, high-value assets where failure leads to significant financial losses or safety risks 3, justifying its higher initial investment and complexity.2 Often, the most effective overall maintenance program involves a hybrid approach, applying the most appropriate strategy to each asset based on its criticality and failure characteristics.13

3. Edge Computing: Enabling Real-Time Predictive Insights

While the concept of predictive maintenance is powerful, its practical implementation, especially at scale, faces challenges related to data volume, processing speed, and network limitations. This is where edge computing emerges as a critical enabling technology.

3.1 Understanding Edge Computing Architecture

Edge computing is a distributed computing paradigm that shifts computation and data storage closer to the sources of data generation – the “edge” of the network.20 Instead of sending vast amounts of raw data from sensors and devices across a network to a centralized data center or cloud for processing, edge computing performs analysis locally, on or near the device itself (e.g., on a smart sensor, a microcontroller, an industrial gateway, or a local edge server).14

flowchart LR

%% Equipment Section

EQUIP["<b>Equipment</b><br>────────────<br><i>• Motor<br>• Turbine<br>• Other Machinery</i>"]

TEMP["<b>Temp Sensor</b>"]

VIB["<b>Vibration Sensor</b>"]

EQUIP --> TEMP

EQUIP --> VIB

%% Edge Computing Section

EDGE["<b>Edge Computing</b><br>────────────<br><i>• Real-time Processing<br>• AI Inference<br>• Local Alerts</i>"]

TEMP --> EDGE

VIB --> EDGE

%% Cloud Computing Section

CLOUD["<b>Cloud Computing</b><br>────────────<br><i>• Data Storage<br>• Model Training<br>• Dashboards<br>• Deep Analytics</i>"]

EDGE -->|<b>Insights</b>| CLOUD

CLOUD -.->|<i>Model Updates</i>| EDGE

%% Styling

classDef equipment fill:#d97c5d,stroke:#333,color:#fff,font-weight:bold;

classDef sensor fill:#e1e1e1,stroke:#aaa,color:#000;

classDef edge fill:#4790cd,stroke:#2D4159,color:#fff;

classDef cloud fill:#f0f0f0,stroke:#ccc,color:#000;

classDef arrow stroke:#2D4159,stroke-width:2px;

class EQUIP equipment;

class TEMP,VIB sensor;

class EDGE edge;

class CLOUD cloud;

This contrasts sharply with traditional cloud computing models where raw sensor data is typically ingested into the cloud, processed, analyzed, and then results or commands might be sent back to the local environment.20 While the cloud offers immense storage and processing power, transmitting large volumes of high-frequency sensor data (common in PdM applications like vibration analysis) can introduce significant latency, consume substantial network bandwidth, and incur high costs.25

3.2 The Synergy between Edge Computing and PdM

Edge computing and predictive maintenance form a natural synergy. The effectiveness of PdM often hinges on the ability to monitor equipment and analyze data in real-time or near real-time to detect transient anomalies or make immediate control decisions.22 Waiting for data to travel to the cloud and back can be too slow for applications requiring rapid response, such as shutting down a machine to prevent catastrophic failure.30

Furthermore, industrial equipment monitored for PdM can generate enormous volumes of data (e.g., high-frequency vibration or acoustic data). Continuously streaming all this raw data to the cloud is often impractical due to bandwidth limitations or prohibitive costs, especially in environments with limited or expensive connectivity (e.g., remote sites, mobile assets).20

Edge computing addresses these issues by enabling data processing tasks – such as filtering, aggregation, feature extraction, anomaly detection, and even AI model inference – to occur locally at the edge.26 Only the processed results, meaningful insights, or critical alerts need to be transmitted to the cloud or central systems, dramatically reducing data volume and network traffic.20

3.3 Key Advantages of Edge Computing for PdM

Deploying PdM logic at the edge offers several compelling advantages:

- Real-Time Processing & Reduced Latency: By processing data locally, edge computing minimizes the delay between data acquisition and insight generation, enabling responses in milliseconds.20 This is crucial for time-sensitive PdM tasks and allows for immediate actions based on equipment conditions.27

- Bandwidth Savings & Reduced Cost: Processing data locally significantly reduces the amount of data that needs to be transmitted over the network to the cloud.20 This saves on bandwidth costs, alleviates network congestion, and makes PdM feasible in locations with poor or expensive connectivity.25

- Enhanced Privacy & Security: Sensitive operational data can be analyzed and potentially anonymized or aggregated at the edge before being transmitted.20 Keeping data local minimizes exposure during transit and reduces reliance on potentially vulnerable cloud connections, aiding compliance with data privacy regulations like GDPR or data sovereignty rules.25

- Improved Reliability & Offline Operation: Edge devices can often continue performing critical monitoring and analysis functions even if the connection to the cloud is temporarily lost or intermittent.20 This resilience is vital for mission-critical industrial processes where continuous operation is paramount.29

It is important to recognize that edge computing typically complements, rather than replaces, cloud computing in PdM architectures. A hybrid approach is often optimal.14 The edge excels at real-time processing, immediate anomaly detection, data filtering, and local control actions.26 The cloud remains indispensable for aggregating data from multiple assets or sites, performing computationally intensive AI model training on large datasets, long-term data archiving, fleet-wide analytics, and providing centralized dashboards and management tools.2 Major cloud providers like AWS, Microsoft Azure, and IBM offer integrated IoT platforms that facilitate this hybrid cloud-edge model, providing services for device management, data ingestion, edge runtime deployment, and cloud-based analytics.25

| Feature | Edge Processing | Cloud Processing | Hybrid Approach |

|---|---|---|---|

| Latency |

Very Low Millisecond response time suitable for real-time monitoring and critical alerts |

High Seconds to minutes depending on connectivity, unsuitable for time-critical applications |

Critical alerts handled at edge, deeper analysis in cloud |

| Network Bandwidth |

Low Usage Only transmits insights or anomalies, reducing bandwidth by 90%+ |

High Usage Requires continuous transmission of raw sensor data (can be GBs per day per device) |

Filtered and pre-processed data sent to cloud, significantly reducing bandwidth |

| Processing Power |

Limited Constrained by edge hardware capabilities (CPU, RAM, power) |

Virtually Unlimited Scalable resources for complex analysis and large datasets |

Simple models at edge, complex training and analytics in cloud |

| Operational Reliability |

High Continues functioning during network outages or disconnections |

Dependent Requires stable network connection; vulnerable to service disruptions |

Critical functions remain operational during outages, with syncing when connection restores |

| Security & Privacy |

Enhanced Sensitive data stays local; reduced attack surface and transmission vulnerabilities |

Challenging Data in transit and at rest must be secured; compliance issues with data leaving premises |

Sensitive data processed locally, anonymized or aggregated data sent to cloud |

| Model Training |

Limited Resource constraints make complex model training difficult |

Excellent Ideal for training large models on historical data from multiple sources |

Training in cloud, deployment of optimized models to edge |

| Scalability |

Hardware-Dependent Requires physical deployment and maintenance of edge devices |

Highly Scalable Can easily scale processing resources as needs grow |

Leverages benefits of both approaches for optimal scalability |

| Fleet-wide Analysis |

Challenging Limited capacity to analyze patterns across multiple assets |

Excellent Ideal for analyzing patterns across entire fleet of equipment |

Local insights combined with global pattern recognition |

| Cost Structure |

Higher Upfront Higher initial hardware investment, lower ongoing costs |

Usage-Based Lower initial investment, ongoing costs scale with usage |

Balanced investment with optimized ongoing operational costs |

| Ideal For | Time-critical applications, bandwidth-constrained environments, remote locations, privacy-sensitive operations | Complex analytics, historical trend analysis, fleet-wide optimization, model training | Most production PdM systems, balancing real-time alerts with deep insights |

The specific motivation for adopting edge computing in a PdM scenario can also vary. While low latency is a frequently cited benefit 20, some large-scale enterprise deployments prioritize overcoming network bandwidth limitations or ensuring operational continuity during cloud connectivity outages above all else.29 Understanding the primary bottleneck – whether it’s latency, bandwidth, cost, reliability, or security – is crucial for designing an effective edge architecture tailored to the specific PdM application.

4. AI/ML at the Edge: The Intelligence Behind PdM

While edge computing provides the necessary infrastructure for local processing, Artificial Intelligence (AI) and Machine Learning (ML) supply the intelligence needed to transform raw sensor data into meaningful predictive insights. Running AI/ML models directly on edge devices – a concept known as Edge AI – is revolutionizing predictive maintenance.

4.1 Leveraging AI/ML for Sensor Data Analysis

Industrial equipment generates complex streams of sensor data (e.g., vibration patterns, temperature fluctuations, acoustic signatures, electrical current variations).3 Simply monitoring these values against static thresholds is often insufficient to detect the subtle, early signs of impending failure. Failures often manifest as complex patterns or gradual drifts hidden within noisy data.2

AI/ML algorithms excel at learning these intricate patterns from historical operational data and identifying deviations in real-time sensor streams that signal potential problems.9 By training models on data representing both healthy and faulty equipment states, AI can learn to recognize the signatures of developing issues long before they become critical.

Edge AI specifically refers to the deployment and execution of these trained AI/ML models directly on edge hardware, such as microcontrollers, gateways, or dedicated AI accelerators, located close to the data source.26 This allows the intelligent analysis to happen locally, enabling the real-time decision-making benefits offered by edge computing.

4.2 Core Tasks Enabled by Edge AI in PdM

Edge AI empowers several key functionalities crucial for effective predictive maintenance:

- Anomaly Detection: This is often the first line of defense. AI models analyze incoming sensor data streams and identify data points or sequences that deviate significantly from the established “normal” operating baseline learned during training.14 These anomalies can be early indicators of wear, malfunction, or changing operating conditions requiring attention.

- Trend Analysis: AI models can identify gradual drifts or changing trends in sensor readings over time. For instance, a slow increase in motor temperature or vibration levels might not trigger an immediate threshold alert but could indicate progressive degradation that an AI model can flag for future investigation.

- Failure Signature Identification: More sophisticated models can be trained to recognize specific patterns or “signatures” in the sensor data that are known to correlate with particular failure modes (e.g., the distinct vibration frequencies associated with inner race vs. outer race bearing faults).

4.3 Advanced Applications

Beyond basic detection, Edge AI enables more advanced predictive capabilities:

- Remaining Useful Life (RUL) Estimation: This involves predicting the amount of time (or operating cycles) left before a component or machine is likely to fail, given its current condition and historical usage patterns.4 RUL estimation typically requires more complex time-series forecasting models (e.g., regression models, recurrent neural networks) and provides valuable information for optimizing maintenance scheduling and inventory management.

- Fault Classification: Once an anomaly is detected, classification models can attempt to identify the specific type of fault that is occurring (e.g., distinguishing between bearing wear, shaft misalignment, or electrical issues in a motor). This helps maintenance teams diagnose the problem more quickly and bring the correct tools and parts for repair.

- Prescriptive Analytics: Representing the next frontier, prescriptive analytics aims to move beyond predicting failure to actively recommending the optimal course of action.36 This could involve suggesting specific maintenance procedures, adjusting operating parameters to mitigate stress on a component, or optimizing the timing of an intervention based on predicted severity and operational impact.

By executing these AI-driven tasks locally, Edge AI transforms the edge device from a simple data collector into an intelligent node capable of autonomous monitoring and assessment. Simple threshold-based alerting on raw sensor data often fails to capture the complexity of real-world equipment behavior. AI/ML models running at the edge 26 can perform sophisticated feature extraction (e.g., analyzing frequency spectra from vibration data), recognize complex temporal patterns, and make inferences directly on the device.4 This local intelligence is fundamental to achieving the low-latency anomaly detection or RUL estimation 4 promised by edge computing, without the need to constantly stream massive raw datasets to the cloud.22

The sophistication of the AI model deployed at the edge is, however, directly tied to the specific PdM objective and the capabilities of the edge hardware. Basic anomaly detection might be achievable with lightweight statistical methods or simpler ML models that can run on resource-constrained microcontrollers. In contrast, accurate RUL estimation 4 or fine-grained fault classification using techniques like deep learning (e.g., LSTMs for time series, CNNs for image-like representations of sensor data) often demands significantly more computational power and memory.28 This creates a crucial design trade-off between the desired level of predictive insight and the constraints imposed by the edge platform’s cost, power consumption, and processing capabilities.

5. Implementing Edge AI for Predictive Maintenance: The Workflow

Successfully deploying an Edge AI solution for predictive maintenance involves a systematic workflow, typically encompassing data collection, local preprocessing, model training, and edge inference. While specifics vary, the general steps are as follows:

5.1 Step 1: Data Collection from Sensors

The foundation of any PdM system is reliable data. This step involves:

- Identifying Key Parameters: Determining which physical parameters are most indicative of the health and potential failure modes of the target equipment. This requires domain knowledge about the machinery. Common parameters include vibration, temperature, pressure, acoustic emissions, electrical current/voltage, oil debris, humidity, etc..3

- Selecting Sensors: Choosing appropriate sensors based on the required accuracy, measurement range, sampling frequency, environmental robustness (temperature, moisture, vibration tolerance), cost, and ease of integration.3 Sensor placement is critical to ensure meaningful data capture.

- Interfacing with Edge Device: Connecting the selected sensors to the edge computing device (e.g., microcontroller, single-board computer, or gateway). This involves using appropriate hardware interfaces like I2C, SPI, UART, Analog-to-Digital Converters (ADCs), Modbus, CAN bus, or industrial protocols like OPC-UA.

5.2 Step 2: Edge-Based Data Preprocessing

Raw sensor data is often noisy, contains irrelevant information, or is not in the optimal format for AI/ML models. Preprocessing is essential and performing it at the edge offers significant advantages:

- Purpose: To clean the data, reduce its dimensionality, extract meaningful features, and prepare it for efficient model inference, all while minimizing the amount of data that needs to be stored or transmitted.30

- Common Edge Preprocessing Tasks:

- Filtering: Applying digital filters (e.g., low-pass, high-pass, band-pass) to remove noise or isolate specific frequency bands of interest.

- Normalization/Scaling: Adjusting data values to a common scale (e.g., 0 to 1 or standard normal distribution) to improve model training and performance.

- Feature Extraction: Calculating higher-level features from raw data. For example, from raw vibration time-series data, features like Root Mean Square (RMS), peak-to-peak amplitude, kurtosis, skewness, or spectral features derived from a Fast Fourier Transform (FFT) can be computed. This step often dramatically reduces data volume while retaining predictive information.

- Segmentation/Windowing: Dividing continuous time-series data into fixed-size windows or segments, which are then fed into the model one by one.

5.3 Step 3: Model Training Strategies

Once data is collected and preprocessing steps are defined, the AI/ML model needs to be trained. The training process typically occurs in one of two ways:

- Cloud-Based Training (Most Common): This is the standard approach for complex models or large datasets. Preprocessed data (or sometimes raw data, if edge preprocessing is minimal) is collected from edge devices and aggregated in a cloud environment. Powerful cloud computing resources (leveraging platforms like AWS SageMaker 34, Azure Machine Learning 33, or Google AI Platform) are used to train sophisticated ML models. Once trained, the model undergoes optimization for edge deployment.

- Edge-Based Training/Fine-tuning: For simpler models, scenarios requiring rapid adaptation to local conditions, or applications where data privacy restricts sending raw data to the cloud, some level of training or model fine-tuning might occur directly on the edge device (typically a more powerful gateway or edge server, rather than a microcontroller). However, this is often limited by the computational and memory constraints of edge hardware.28 Techniques like Transfer Learning (adapting a pre-trained cloud model with local data) or Federated Learning (collaborative training without sharing raw data, see Section 8) are emerging to make edge-based learning more practical.

5.4 Step 4: Deploying Models for Edge Inference

After training, the model must be deployed onto the edge device to perform real-time predictions (inference):

flowchart LR

%% Original Model

ORIG["<b>ORIGINAL MODEL</b><br>Cloud-trained<br>High Accuracy<br><i>Size: 100MB+</i>"]

class ORIG orig;

%% Optimization Techniques

QUANT["<b>QUANTIZATION</b><br>Reduce Precision<br>FP32 → INT8/FP16<br><i>~75% smaller</i>"]

PRUNE["<b>PRUNING</b><br>Remove Redundant<br>Weights/Neurons<br><i>~60% fewer params</i>"]

ARCH["<b>MODEL ARCHITECTURE</b><br>Use MobileNet, etc.<br><i>~7× smaller</i>"]

class QUANT,PRUNE,ARCH step;

%% Optimized Model

OPT["<b>EDGE-OPTIMIZED MODEL</b><br>Deployable on edge<br>Minimal Accuracy Loss<br><i>Size: 0.5–5MB</i>"]

class OPT optimized;

%% Flow arrows

ORIG --> QUANT

ORIG --> PRUNE

ORIG --> ARCH

QUANT --> OPT

PRUNE --> OPT

ARCH --> OPT

%% Optional: Size Comparison (visual metaphor)

SIZEBAR["<b>Size Comparison</b><br>⬛⬛⬛⬛⬛⬛⬛⬛⬛⬛<br> Original (100MB+)<br>⬛<br> Optimized (<5MB)<br><i>~95% reduction</i>"]

OPT --> SIZEBAR

%% Styling

classDef orig fill:#d97c5d,stroke:#333,color:#fff;

classDef step fill:#2D4159,stroke:#333,color:#fff;

classDef optimized fill:#4790cd,stroke:#333,color:#fff;

classDef textblock fill:#f9f9f9,stroke:#e0e0e0,color:#333;

class SIZEBAR textblock;

- Model Optimization: Trained models, especially deep learning models, are often too large and computationally intensive for resource-constrained edge devices. Optimization techniques are crucial:

- Quantization: Reducing the precision of model weights and activations (e.g., from 32-bit floats to 8-bit integers), significantly decreasing model size and speeding up computation with minimal accuracy loss.

- Pruning: Removing redundant or unimportant connections or parameters within the model to reduce complexity.

- Model Architecture Selection: Choosing inherently efficient model architectures designed for edge deployment. Frameworks like TensorFlow Lite provide tools for these optimizations.

- Deployment: The optimized model file is transferred and stored on the edge device’s non-volatile memory (e.g., flash memory).

- Inference Execution: An edge-optimized inference engine or runtime (e.g., TensorFlow Lite Interpreter, ONNX Runtime) is used on the edge device. This software loads the optimized model and efficiently executes it on the incoming, preprocessed sensor data.

- Actionable Output: The model produces predictions (e.g., an anomaly score, a probability of failure, an estimated RUL, or a classified fault type). These outputs can then be used locally to trigger alerts, log events, adjust control parameters, or send condensed messages to a central monitoring system or cloud backend.26 Only these high-level results, rather than raw data, typically leave the edge device.

5.5 Diagram 1: Edge AI PdM Workflow

flowchart TD

subgraph Equipment

A[Industrial Equipment]

end

subgraph Sensors

S1[Temperature Sensor]

S2[Vibration Sensor]

S3[Pressure Sensor]

S4[Acoustic Sensor]

end

subgraph "Edge Device"

DA[Data Acquisition]

DP[Data Preprocessing]

FE[Feature Extraction]

AI[AI Model Inference]

LA[Local Actions/Alerts]

end

subgraph "Cloud System"

CS[Data Storage]

MT[Model Training]

DA2[Deep Analytics]

DB[Dashboards/CMMS]

end

%% Equipment to Sensors connections

A --> S1

A --> S2

A --> S3

A --> S4

%% Sensors to Edge Device

S1 --> DA

S2 --> DA

S3 --> DA

S4 --> DA

%% Edge Device internal flow

DA --> DP

DP --> FE

FE --> AI

AI --> LA

%% Edge to Cloud

LA -- "Alerts/Summary Data" --> CS

FE -- "Filtered Data" --> CS

%% Cloud internal flow

CS --> MT

CS --> DA2

MT --> DA2

DA2 --> DB

%% Cloud back to Edge

MT -- "Optimized Models" --> AI

%% Styling

classDef equipment fill:#d97c5d,stroke:#333,stroke-width:1px;

classDef sensors fill:#e1e1e1,stroke:#aaa,stroke-width:1px;

classDef edge fill:#4790cd,color:white,stroke:#2D4159,stroke-width:1px;

classDef cloud fill:#f0f0f0,stroke:#ccc,stroke-width:1px;

class A equipment;

class S1,S2,S3,S4 sensors;

class DA,DP,FE,AI,LA edge;

class CS,MT,DA2,DB cloud;This workflow highlights the critical role of preprocessing at the edge. Performing tasks like feature extraction and noise reduction locally not only makes the data suitable for the AI model but is also a key enabler for efficiency.30 It significantly reduces the computational load on the inference engine and minimizes the data volume that might need to be transmitted, making complex AI feasible on constrained devices.

The decision on where to perform model training – primarily in the cloud or incorporating edge-based elements – involves trade-offs. Cloud training leverages virtually unlimited resources, ideal for complex models and large, diverse datasets.33 However, models trained exclusively on aggregated data might lack sensitivity to the unique operating characteristics or environmental factors of a specific machine at a specific site. Edge-based fine-tuning or emerging techniques like federated learning offer pathways to personalize models and adapt them to local nuances without compromising data privacy or requiring massive data uploads, addressing a key challenge in deploying generalized models across heterogeneous edge environments.28

6. Common Techniques, Tools, and Hardware

Implementing Edge AI for predictive maintenance requires selecting appropriate algorithms, software tools, and hardware platforms. The choices depend heavily on the specific application requirements, including the complexity of the analysis, the target device’s constraints, and the development team’s expertise.

6.1 Key AI/ML Techniques for Edge PdM

A variety of AI/ML techniques can be adapted for edge deployment in PdM:

- Anomaly Detection:

- Statistical Methods: Techniques like Z-score or Interquartile Range (IQR) are simple and computationally light, suitable for basic outlier detection.

- Clustering: Algorithms like K-Means or DBSCAN can group normal data points together; points falling outside clusters are considered anomalies.

- Density-Based Methods: Algorithms like Local Outlier Factor (LOF) identify anomalies based on the local density of data points.

- Tree-Based Methods: Isolation Forests are efficient at isolating anomalies by randomly partitioning the data.

- Autoencoders: Neural network architectures trained to reconstruct normal input data; poor reconstruction indicates an anomaly. Can capture complex patterns but are more computationally intensive.

- Regression Models (for RUL Estimation, Trend Prediction):

- Linear Regression: Simple model for predicting continuous values based on linear relationships.

- Support Vector Regression (SVR): Effective for non-linear relationships.

- Gaussian Processes: Probabilistic approach providing uncertainty estimates along with predictions.

- Recurrent Neural Networks (RNNs): Architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs) are well-suited for modeling sequential data and predicting future values in time series, making them popular for RUL estimation.4

- Classification Models (for Fault Type Identification):

- Logistic Regression: Simple binary classification algorithm.

- Support Vector Machines (SVM): Powerful classifiers effective in high-dimensional spaces.

- Decision Trees & Random Forests: Tree-based methods that are interpretable and often perform well. Random Forests combine multiple trees for robustness.

- K-Nearest Neighbors (KNN): Simple instance-based learning algorithm.

- Convolutional Neural Networks (CNNs): Highly effective for analyzing grid-like data, such as spectrograms generated from vibration or acoustic signals, often used for complex fault classification.

| Technique Type | Algorithm | Use Case in PdM | Edge Resource Requirements |

|---|---|---|---|

| Anomaly Detection | Statistical Methods (Z-score, IQR) | Basic outlier detection in sensor readings | Very Low |

| Clustering (K-Means, DBSCAN) | Grouping normal operational patterns | Low | |

| Density-Based Methods (LOF) | Detecting local deviations in data density | Medium | |

| Isolation Forest | Efficient isolation of anomalies by random partitioning | Medium | |

| Autoencoders | Complex pattern recognition in multi-sensor data | High | |

| RUL Estimation | Linear Regression | Simple degradation trend prediction | Very Low |

| Support Vector Regression (SVR) | Non-linear degradation modeling | Medium | |

| Gaussian Processes | Probabilistic RUL estimation with uncertainty | Medium-High | |

| LSTM/RNN Networks | Complex time-series RUL prediction | Very High | |

| Fault Classification | Logistic Regression | Simple binary fault detection | Very Low |

| SVM | Multi-class fault type identification | Medium | |

| Decision Trees & Random Forests | Interpretable fault classification | Medium | |

| KNN | Simple instance-based fault identification | Medium (Memory-intensive) | |

| CNN | Complex fault pattern recognition from spectrograms | Very High |

6.2 Essential Software: Frameworks & Libraries

Specialized software is needed to develop, optimize, and deploy ML models on edge devices:

- Edge AI Frameworks & Runtimes:

- TensorFlow Lite (TFLite): Google’s leading framework for converting TensorFlow models for deployment on mobile, embedded, and IoT devices. Provides tools for optimization (quantization, pruning) and an efficient interpreter for running models on diverse hardware.

- PyTorch Mobile: Enables deployment of PyTorch models, primarily targeting mobile platforms but adaptable for some edge Linux environments. Uses TorchScript for model serialization.

- Edge Impulse: An end-to-end MLOps platform specifically designed for edge devices, particularly microcontrollers (TinyML). It streamlines data acquisition, labeling, model training, optimization (using TFLite or other backends), and deployment, lowering the barrier to entry.

- Other Options: ONNX Runtime (supports models from various frameworks), Apache TVM (optimizing compiler), NVIDIA TensorRT (for high-performance inference on NVIDIA GPUs).

- General ML Libraries (for Training/Prototyping):

- scikit-learn: A foundational Python library offering a wide array of traditional ML algorithms for classification, regression, clustering, dimensionality reduction, and preprocessing. Models trained with scikit-learn often serve as baselines or can sometimes be converted/re-implemented for edge deployment.

- TensorFlow & PyTorch: The primary deep learning frameworks used for training complex models (CNNs, LSTMs) in the cloud before conversion/optimization for the edge.

6.3 Suitable Hardware Platforms

The hardware choice is critical and depends on the computational demands of the AI model, power budget, cost constraints, and environmental conditions:

- Microcontrollers (MCUs): Ideal for low-power, low-cost applications running simpler ML models (TinyML).

- Examples: ESP32 (popular for its integrated Wi-Fi/Bluetooth), STM32 series (wide range of ARM Cortex-M cores), Nordic nRF series (low-power focus), Raspberry Pi Pico (RP2040).

- Characteristics: Limited RAM (KB range), limited Flash storage (KB to few MB), lower clock speeds. Suitable for keyword spotting, simple anomaly detection, basic sensor fusion.

- Single-Board Computers (SBCs): Offer more processing power and memory than MCUs, typically run Linux, providing greater flexibility.

- Examples: Raspberry Pi (various models like Pi 4, Pi 5), BeagleBone Black, NVIDIA Jetson Nano (includes GPU).

- Characteristics: More RAM (GB range), SD card storage, faster CPUs, networking interfaces. Can run more complex models, handle multiple sensor streams, act as local gateways. Consume more power than MCUs.

- Edge AI Accelerators & Systems-on-Chip (SoCs): Hardware designed specifically to accelerate ML inference, offering high performance per watt.

- Examples: Google Coral Edge TPU (accelerates TFLite models, available as USB/M.2/PCIe modules or on Dev Boards), NVIDIA Jetson family (powerful GPUs for AI, ranging from Jetson Nano to AGX Orin), Intel Movidius VPUs, NXP i.MX application processors with NPU (Neural Processing Unit).

- Characteristics: Dedicated silicon for ML tasks, significantly faster inference than CPU-only execution, often power-efficient for their performance level. Essential for real-time processing of complex models (e.g., computer vision, complex signal processing).

- Industrial Edge Computers/Gateways: Ruggedized, reliable computing platforms built for harsh factory or field environments.14

- Examples: Systems from manufacturers like Premio 26, Moxa 35, Advantech, Siemens, Dell.

- Characteristics: Often based on SBCs or industrial PCs, feature robust enclosures, wide operating temperature ranges, resistance to vibration/shock, diverse industrial I/O (e.g., serial ports, digital I/O, fieldbus interfaces), reliable connectivity options. May integrate AI accelerators. Act as aggregation points and processing hubs on the factory floor.

flowchart TB

%% Hardware Types

MCU["<b>MCUs</b><br>ESP32 / STM32 / Arduino<br><i>Low Cost, Low Power</i><br><br>• RAM: KBs<br>• Storage: Flash<br>• Use: Anomaly Detection<br><br>🔵<br> Performance: Low"]

SBC["<b>SBCs</b><br>Raspberry Pi / BeagleBone<br><i>Linux-based, More Power</i><br><br>• RAM: GBs<br>• Storage: SD/eMMC<br>• Use: Gateway, Models<br><br>🔵🔵🔵<br> Performance: Medium"]

AIHW["<b>AI Accelerators</b><br>NVIDIA Jetson / Coral / IPC<br><i>Dedicated AI Acceleration</i><br><br>• RAM: GBs + VRAM<br>• Storage: SSD/NVMe<br>• Use: Real-time DL<br><br>🔵🔵🔵🔵🔵<br> Performance: High"]

%% Use Case Complexity Boxes

UC1["<b>Basic Monitoring</b><br><i>Simple Thresholds</i><br><br>• Temp/Vibration<br>• Few sensors<br><br><span style='color:#4790cd'>✅ MCU is sufficient</span>"]

UC2["<b>Advanced Analytics</b><br><i>Multiple Sensors & ML</i><br><br>• Pattern Recognition<br>• Sensor Fusion<br><br><span style='color:#4790cd'>✅ SBC is recommended</span>"]

UC3["<b>Deep Learning PdM</b><br><i>Real-time + DL</i><br><br>• Vibration Spectral<br>• RUL with LSTM/CNN<br><br><span style='color:#4790cd'>✅ AI Accelerator required</span>"]

%% Arrows connecting Use Cases to Hardware

UC1 --> MCU

UC2 --> SBC

UC3 --> AIHW

%% Styling

classDef mcu fill:#e1e1e1,stroke:#aaa,color:#000;

classDef sbc fill:#f0f0f0,stroke:#aaa,color:#000;

classDef ai fill:#d5e5f5,stroke:#4790cd,color:#000;

classDef usecase fill:#f9f9f9,stroke:#ccc,color:#333;

class MCU mcu;

class SBC sbc;

class AIHW ai;

class UC1,UC2,UC3 usecase;

6.4 Table 2: Overview of Edge AI Tools, Libraries, and Hardware Platforms

| Category | Examples | Key Characteristics | Typical Use Cases in Edge PdM |

|---|---|---|---|

| Framework/Library | TensorFlow Lite, PyTorch Mobile, Edge Impulse, ONNX Runtime, scikit-learn | Model Optimization, Edge Inference Engine, Development Platform | Deploying/Running Models on Edge, Training Baselines |

| Microcontroller (MCU) | ESP32, STM32 series, Raspberry Pi Pico, Arduino Nano 33 BLE | Low Power, Low Cost, Limited Resources (RAM/Flash) | Simple Anomaly Detection, Basic Sensor Analysis (TinyML) |

| Single-Board Comp. (SBC) | Raspberry Pi 4/5, BeagleBone Black | Linux Capable, More RAM/CPU, Flexible I/O, Moderate Power | Moderate Complexity Models, Gateway Functionality, Prototyping |

| AI Accelerator | Google Coral Edge TPU, NVIDIA Jetson Nano/Orin | High Inference Speed, Power Efficient (for performance) | Complex Models (CNNs, LSTMs), Real-time Vibration/Acoustic Analysis |

| Industrial Gateway | Premio, Moxa, Advantech Industrial PCs | Ruggedized, Wide Temp Range, Industrial I/O, Reliable | Factory Floor Deployment, Data Aggregation, PLC Integration |

The selection process involves navigating critical trade-offs. A simple anomaly detector on an MCU might be the most cost-effective and power-efficient solution for monitoring numerous low-criticality assets. However, predicting the RUL of a mission-critical turbine using complex vibration analysis might necessitate a powerful industrial gateway equipped with an AI accelerator like an NVIDIA Jetson.26 Engineers must meticulously evaluate the ML model’s computational and memory footprint against the target hardware’s capabilities, considering the power budget, environmental conditions, required interfaces, development toolchain support, and overall system cost.28

Furthermore, the emergence of end-to-end platforms like Edge Impulse signifies a trend towards simplifying the complex workflow of edge AI development. By integrating data acquisition, labeling, training (often using AutoML or pre-built blocks), optimization, and deployment into a unified interface, these platforms aim to make edge AI more accessible, particularly for embedded engineers who may not have deep ML expertise. This helps address the skills gap often encountered when bridging the gap between traditional embedded systems and cutting-edge AI techniques.2

7. Benefits and Challenges of AI on the Edge

Deploying artificial intelligence directly on edge devices for predictive maintenance offers a compelling set of advantages, but it also introduces unique technical hurdles that must be carefully navigated.

7.1 Maximizing the Advantages

Running AI inference locally at the edge provides several key benefits, directly addressing limitations of purely cloud-based approaches:

- Low Latency: Enables near real-time analysis and response, critical for detecting transient events or triggering immediate protective actions.20

- Bandwidth Efficiency: Dramatically reduces the volume of data transmitted to the cloud, saving costs and alleviating network congestion, especially important with high-frequency sensor data.20

- Enhanced Privacy/Security: Keeps potentially sensitive operational data localized, reducing exposure during transmission and helping comply with data governance policies.20

- Improved Reliability: Allows critical monitoring and decision-making to continue even if network connectivity to the cloud is unstable or unavailable.20

- Operational Cost Savings: Beyond bandwidth savings, faster local decision-making can lead to quicker responses to potential issues, further reducing downtime and optimizing operations.21

7.2 Navigating the Hurdles

Despite the benefits, implementing AI on edge devices presents significant challenges:

- Limited Computational Resources: Edge devices, particularly low-cost MCUs, have inherent constraints on processing power (CPU speed), available RAM for model execution, and flash memory for storing the model and application code.28 This restricts the size and complexity of AI models that can be deployed.

- Model Size and Optimization: State-of-the-art AI models, especially deep neural networks, can be very large. They require aggressive optimization techniques (like quantization and pruning, discussed in Section 5.4) to shrink their footprint and computational requirements to fit within the tight constraints of edge hardware. Achieving significant size reduction without sacrificing too much predictive accuracy is a major engineering challenge.

- Power Consumption: Many edge devices, especially those in remote locations or attached to moving equipment, may be battery-powered or have strict power budgets. Continuously running complex AI inference algorithms can consume significant power. Optimizing both the AI model and the underlying hardware/software for energy efficiency is crucial.

- Data Management and Quality: Ensuring consistent, high-quality data from sensors at the edge is vital for reliable AI predictions. Handling data buffering, local storage limitations, data cleaning, and managing the synchronization of relevant data or model outputs between the edge and the cloud requires careful architectural design.

- Security for Edge AI: While edge processing enhances data transmission security, the edge devices themselves become potential targets. Protecting the device from physical tampering or network intrusion, securing the AI model against theft or modification, encrypting data stored locally, and ensuring secure communication channels are critical security considerations.20

- Scalability and Fleet Management: Deploying, monitoring, diagnosing, and updating AI models across potentially thousands or millions of distributed edge devices is a complex logistical challenge.34 Robust remote device management platforms and Over-The-Air (OTA) update mechanisms are essential for managing edge AI deployments at scale.

- Model Retraining and Updating: AI models can degrade in performance over time due to “model drift” (changes in data patterns) or “concept drift” (changes in the underlying relationships the model learned). Equipment behavior might change after maintenance, or new failure modes might emerge. Establishing a reliable workflow for monitoring model performance, retraining models (usually in the cloud with new data), validating them, and securely deploying updates to the entire edge fleet is critical for maintaining the long-term effectiveness of the PdM system.34

In essence, the advantages of edge AI directly tackle the inherent physical limitations of latency and bandwidth associated with sending all data to the cloud.20 However, this shift introduces new engineering complexities centered around optimizing sophisticated algorithms to run effectively on resource-constrained hardware.28 This necessitates the use of specialized software frameworks, optimization techniques, and sometimes dedicated hardware accelerators, adding layers to the development and deployment process.

Furthermore, the operational aspects of managing AI models once deployed at the edge are just as critical as the initial development. An edge AI system is not a “deploy-and-forget” solution. Continuous monitoring of model performance, managing updates across a distributed fleet, ensuring security, and adapting to changing conditions are ongoing tasks.34 This requires adopting robust MLOps (Machine Learning Operations) practices specifically tailored for the unique challenges of the edge environment, ensuring the long-term reliability and value of the predictive maintenance solution.

8. Putting It All Together: Use Case, Best Practices, and Future Trends

To illustrate the practical application of Edge AI for PdM, consider a common industrial scenario. By following best practices and keeping an eye on emerging trends, organizations can successfully leverage this technology.

Predictive Maintenance Use Cases by Industry

Common applications of Edge AI-powered Predictive Maintenance

| Manufacturing | Energy | Transportation |

|---|---|---|

CNC Machine Monitoring

Assembly Line Robotics

|

Wind Turbine Monitoring

Power Transformer Health

|

Railway Predictive Maintenance

Fleet Vehicle Maintenance

|

| Healthcare | Oil & Gas | Smart Buildings |

|---|---|---|

Medical Equipment Monitoring

|

Pump & Compressor Monitoring

|

HVAC System Optimization

|

8.1 Illustrative Use Case: AI-Powered Vibration Monitoring for Industrial Motor PdM

Scenario: A manufacturing plant relies on numerous critical induction motors for its production lines.4 Unplanned failures of these motors lead to significant downtime and production losses. Common failure modes include bearing degradation, rotor imbalance, and shaft misalignment, all of which manifest as changes in vibration patterns.

Problem: Implement a system for early detection of developing motor faults to enable proactive maintenance scheduling and prevent unexpected failures.

Edge AI Solution:

- Sensors: MEMS accelerometers (measuring vibration) are attached magnetically or bolted to the housing of each critical motor. A temperature sensor might also be included.

- Edge Device: Depending on complexity and power constraints:

- Option A (Simpler): A capable microcontroller board (e.g., STM32H7 or ESP32-S3 with sufficient processing power and ADC capabilities) directly connected to the sensors.

- Option B (More Complex): A small industrial gateway (like a Raspberry Pi Compute Module in an industrial enclosure or a dedicated device 26) connected to sensors, potentially incorporating an AI accelerator (e.g., Google Coral USB Accelerator) if complex models are needed.

- Data Preprocessing (Performed on the Edge Device):

- Continuously acquire raw vibration data (time-domain waveforms) at a suitable sampling rate (e.g., several kHz).

- Apply digital filtering to remove noise outside the frequency range of interest.

- Segment the data into overlapping windows (e.g., 1-second intervals).

- For each window, compute the Fast Fourier Transform (FFT) to obtain the vibration frequency spectrum.

- Extract key features from the time domain (e.g., RMS, peak, crest factor) and frequency domain (e.g., energy in specific frequency bands known to correlate with bearing faults, imbalance frequencies, etc.).

- AI Model (Trained in Cloud, Optimized & Deployed on Edge Device):

- Option 1 (Anomaly Detection): An Isolation Forest or an Autoencoder model trained on features extracted during periods of known healthy motor operation. The model runs on the edge device using TensorFlow Lite. It outputs an anomaly score for each new data window. Scores exceeding a predefined threshold indicate a deviation from normal behavior.

- Option 2 (Fault Classification/RUL): A more complex model, perhaps a Convolutional Neural Network (CNN) trained on spectrogram images (visual representations of the FFT over time) or a Random Forest trained on the extracted features, to classify the type of developing fault (e.g., “Bearing Wear Stage 1”, “Imbalance”, “Misalignment”). Alternatively, an LSTM-based regression model could be trained to predict the Remaining Useful Life (RUL) in operating hours.4 These models would likely require an SBC or accelerator.

- Workflow:

- The edge device continuously monitors sensors, preprocesses data, and extracts features.

- The optimized AI model runs inference on the features/spectrograms.

- If the anomaly score exceeds a threshold, a specific fault is classified, or the predicted RUL drops below a critical level, the edge device generates an alert.

- The alert (containing device ID, timestamp, anomaly score/fault type/RUL estimate, and perhaps key feature values) is sent via the plant’s network (e.g., MQTT over Wi-Fi/Ethernet) or cellular IoT to a central monitoring dashboard and potentially triggers a work order in the plant’s Computerized Maintenance Management System (CMMS).4

- Only alerts and summary data are sent; raw high-frequency vibration data stays local, minimizing network load.

- Benefits Realized: Early warning of potential motor failures allows maintenance to be scheduled during planned downtime, preventing costly emergency shutdowns. Maintenance targets specific issues identified by the AI (if using classification). Overall motor lifespan may be extended through timely interventions. Demonstrates key edge advantages: low-latency detection, significant bandwidth savings, and reliable local monitoring.

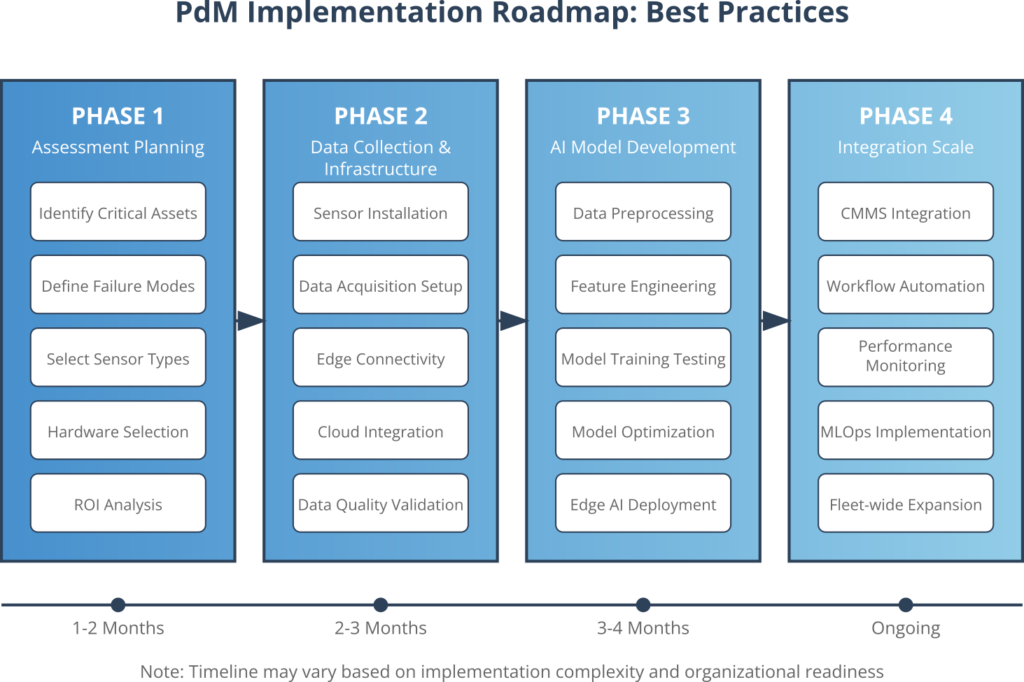

8.2 Best Practices for Successful Edge AI PdM Implementation

Deploying Edge AI for PdM effectively requires careful planning and execution:

- Start Small and Focused: Begin with a pilot project targeting a specific type of critical asset with well-understood failure modes. Define clear objectives and metrics for success. Prove the value proposition before attempting large-scale rollouts.

- Leverage Domain Expertise: Deep understanding of the equipment’s mechanics, operating environment, and common failure mechanisms is crucial for selecting the right sensors, identifying relevant data features, and interpreting model outputs correctly. Collaboration between maintenance engineers and data scientists is key.

- Prioritize Data Quality: Ensure sensors are correctly installed, calibrated, and provide reliable data. Implement robust data validation and cleaning steps, ideally at the edge, as AI models are sensitive to poor input data (“garbage in, garbage out”).

- Select Appropriate Edge Hardware: Carefully balance the computational requirements of the AI model with the cost, power consumption, environmental ratings, and connectivity needs of the target edge platform. Avoid over-provisioning or under-provisioning.

- Optimize Models Rigorously: Employ techniques like quantization, pruning, and knowledge distillation to create models that are both accurate and efficient enough to run within the constraints of the chosen edge hardware.

- Implement Robust MLOps for Edge: Plan the entire model lifecycle from the outset. Establish processes for deploying models to edge devices, monitoring their performance in real-time, logging relevant data and predictions, diagnosing issues remotely, and securely delivering OTA updates.

- Ensure End-to-End Security: Address security at all levels: physical device security, secure boot, data encryption (at rest and in transit), model integrity protection, secure communication protocols, and secure cloud backend integration.

- Plan for Integration: Determine how the insights generated by the edge AI system will integrate into existing operational workflows and systems, such as CMMS for automated work order generation, SCADA systems for operator alerts, or ERP systems for inventory planning.2

- Foster Cross-Functional Collaboration: Successful Edge AI PdM projects require close collaboration between OT teams (who understand the machines and maintenance processes) and IT/Data Science teams (who understand data, AI, and system integration).34

8.3 The Road Ahead: Future Trends

The field of Edge AI for PdM is rapidly evolving, with several key trends shaping its future:

- TinyML: Continued advancements in model optimization and hardware are enabling ML capabilities on even smaller, lower-power microcontrollers (MCUs). This allows for embedding intelligence directly into components or very small sensors, enabling PdM in applications previously thought impossible due to power or cost constraints.

- Federated Learning: A distributed ML approach where models are trained across multiple decentralized edge devices holding local data samples, without exchanging the raw data itself. Devices train models locally and share only model updates (e.g., gradients) with a central server for aggregation into a global model. This enhances data privacy, reduces communication overhead, and allows models to adapt to local conditions.

- Prescriptive Maintenance: Moving beyond prediction to recommendation. Future AI systems will increasingly suggest the optimal maintenance actions, timing, and necessary resources based on the predicted failure mode, severity, and operational context, potentially automating maintenance decisions.36

- Digital Twins: Creating dynamic virtual representations of physical assets, continuously updated with real-time data streamed from edge sensors.2 Edge AI provides the local processing needed to feed these digital twins with timely condition information. Digital twins allow for simulating “what-if” scenarios, testing maintenance strategies virtually, and gaining deeper insights into asset health and performance degradation.

- Enhanced Sensor Fusion: Combining data from multiple diverse sensors (e.g., vibration, temperature, acoustics, thermal imaging, current sensors) at the edge. AI models capable of fusing these multi-modal data streams can provide more accurate, robust, and comprehensive assessments of equipment health than relying on a single sensor type.

- Standardization and Interoperability: As edge deployments grow, there is increasing focus on developing standards for edge platforms, communication protocols (like OPC UA over MQTT), and data formats to simplify integration between different vendors’ hardware and software components and ensure better interoperability within industrial ecosystems.35

Successfully implementing Edge AI for PdM demands a blend of expertise spanning mechanical or electrical engineering (understanding the asset), data science (building the models), embedded systems engineering (deploying on constrained hardware), and IT/OT integration (connecting to plant systems).4 It is an inherently multidisciplinary endeavor where siloed approaches are unlikely to succeed.

Emerging trends like Federated Learning directly tackle the inherent challenges of data privacy and model personalization in distributed systems, while Prescriptive Maintenance promises to further automate and optimize the maintenance process itself.36 These advancements signal a maturation towards more intelligent, autonomous, and seamlessly integrated edge solutions that will continue to transform how industries manage their critical assets.

9. Conclusion: The Future is Predictive and Edged

Predictive maintenance, powered by artificial intelligence running on edge devices, represents a significant leap forward from traditional maintenance paradigms. By leveraging real-time sensor data and intelligent algorithms executed locally, organizations can move from reactive or scheduled interventions to truly condition-based maintenance, anticipating failures before they occur.

The advantages are clear and compelling: substantially reduced unplanned downtime, optimized maintenance scheduling leading to cost savings, extended equipment lifespan, enhanced operational efficiency, and improved safety.8 Edge computing is the critical enabler, overcoming the latency, bandwidth, privacy, and reliability challenges associated with sending massive amounts of industrial data solely to the cloud.20 AI/ML provides the analytical power to decipher complex patterns within sensor data, transforming raw measurements into actionable predictive insights directly where they are needed most.2

However, the journey to successful Edge AI implementation is not without its challenges. Navigating the constraints of edge hardware resources, optimizing complex AI models for efficiency, managing power consumption, ensuring robust security, and handling the lifecycle management of models across distributed fleets require careful engineering and strategic planning.28

Despite these hurdles, the transformative potential of Edge AI for predictive maintenance across diverse sectors – from manufacturing and energy to transportation and healthcare – is undeniable.3 As edge hardware continues to become more powerful and energy-efficient, AI models become leaner through techniques like TinyML, and MLOps tools mature to handle the complexities of edge deployments, the adoption of Edge AI-driven predictive maintenance is set to accelerate. It is rapidly moving from a niche capability to a standard practice for organizations seeking to maximize asset performance, ensure operational resilience, and maintain a competitive edge in an increasingly connected and data-driven world.

Reference Works

- www.ptc.com, access date April 30, 2025, https://www.ptc.com/en/blogs/iiot/what-is-iot-predictive-maintenance#:~:text=IoT%2Dbased%20predictive%20maintenance%20uses,maintenance%20costs%2C%20but%20also%20downtime.

- What is Predictive Maintenance? – IBM, access date April 30, 2025, https://www.ibm.com/think/topics/predictive-maintenance

- Leveraging Industrial IoT Applications for Predictive Maintenance and Enhanced Safety, access date April 30, 2025, https://www.numberanalytics.com/blog/industrial-iot-applications-predictive-maintenance-safety

- Industrial Iot and its maintenance – ElifTech, access date April 30, 2025, https://www.eliftech.com/insights/industrial-iot-predictive-maintenance-eliftech/

- Switching From Reactive to Predictive Maintenance – Kaizen Institute, access date April 30, 2025, https://kaizen.com/insights/reactive-predictive-maintenance/

- What Is IoT Predictive Maintenance? – PTC, access date April 30, 2025, https://www.ptc.com/en/blogs/iiot/what-is-iot-predictive-maintenance

- IoT Predictive Maintenance: Components, Use Cases & Benefits – Xyte, access date April 30, 2025, https://www.xyte.io/blog/iot-predictive-maintenance

- Predictive Maintenance in IIoT: Leveraging Time Series Data for Equipment Longevity, access date April 30, 2025, https://www.iiot-world.com/predictive-analytics/predictive-maintenance/predictive-maintenance-iiot-time-series-data/

- A Guide to Industry 4.0 Predictive Maintenance | IoT For All, access date April 30, 2025, https://www.iotforall.com/a-guide-to-industry-4-0-predictive-maintenance

- 5 Benefits of IoT Predictive Maintenance in Manufacturing | Inwedo Blog, access date April 30, 2025, https://inwedo.com/blog/iot-predictive-maintenance-in-manufacturing/

- IoT Predictive Maintenance | AspenTech, access date April 30, 2025, https://www.aspentech.com/en/cp/iot-predictive-maintenance

- CMMS Terms – Preventive, Corrective, Reactive, Predictive – Maintainly, access date April 30, 2025, https://maintainly.com/articles/demystifying-cmms-maintenance-terms

- Comparing Reactive vs. Preventive vs. Predictive Maintenance Strategies – Makula, access date April 30, 2025, https://www.makula.io/blog/reactive-maintenance-vs-predictive-analytics-vs-preventive-maintenance-comparing-strategies

- Cloud and Edge Computing: Making Best Use of Computing Resources – ISA Interchange, access date April 30, 2025, https://blog.isa.org/cloud-and-edge-computing-making-best-use-of-computing-resources

- Predictive vs. Preventive vs. Condition-based: The 3 Types of Proactive Maintenance, access date April 30, 2025, https://solutions.borderstates.com/blog/types-of-proactive-maintenance/

- Reactive Vs. Preventive Vs. Predictive Maintenance | Prometheus Group, access date April 30, 2025, https://www.prometheusgroup.com/resources/posts/reactive-vs-preventive-vs-predictive-maintenance

- Facility Maintenance: The Difference between Reactive, Preventative, and Predictive, access date April 30, 2025, https://www.gridpoint.com/blog/reactive-preventative-and-predictive-maintenance/

- Reactive Maintenance vs. Preventive Maintenance vs. Predictive Maintenance – City Facilities Management US, access date April 30, 2025, https://www.cityfm.us/blog/reactive-maintenance-vs-preventive-maintenance/

- Preventive Maintenance vs Predictive Maintenance vs Proactive Maintenance – Fluke Corporation, access date April 30, 2025, https://www.fluke.com/en-us/learn/blog/predictive-maintenance/preventive-vs-predictive-vs-proactive

- Edge Computing Guide: Transforming Real-Time Data Processing – Cavli Wireless, access date April 30, 2025, https://www.cavliwireless.com/blog/nerdiest-of-things/edge-computing-for-iot-real-time-data-and-low-latency-processing

- Edge Computing and Predictive Maintenance | Best Practices & Techniques, access date April 30, 2025, https://vtricks.in/blog/edge-computing-and-predictive-maintenance.html

- Edge computing is transforming the way we process data – SEEBURGER Blog, access date April 30, 2025, https://blog.seeburger.com/what-is-edge-computing-and-why-is-edge-computing-important-for-processing-real-time-data/

- Bringing IoT to the Edge: How Edge Computing is Transforming Data Analysis on the Cloud, access date April 30, 2025, https://blog.clearscale.com/bringing-iot-to-the-edge-how-edge-computing-is-transforming-data-analysis-on-the-cloud/

- The Rise of Edge Computing: Transforming the Future of Data Processing – Atlanta Technology Professionals, access date April 30, 2025, https://atpconnect.org/the-rise-of-edge-computing-transforming-the-future-of-data-processing/

- What is Edge Computing and Why is it Important? | CSA – Cloud Security Alliance, access date April 30, 2025, https://cloudsecurityalliance.org/articles/what-is-edge-computing-and-why-is-it-important

- Predictive Maintenance Enabled with Edge AI Computing & Industrial Com – Premio Inc, access date April 30, 2025, https://premioinc.com/blogs/blog/predictive-maintenance-enabled-with-edge-ai-computing-industrial-computers

- Edge Computing: Use Cases in Manufacturing and IoT – PubPub, access date April 30, 2025, https://ijgis.pubpub.org/pub/uuh6pipb

- What Is Edge AI? – IBM, access date April 30, 2025, https://www.ibm.com/think/topics/edge-ai

- The Emerging Landscape of Edge-Computing. – Microsoft, access date April 30, 2025, https://www.microsoft.com/en-us/research/wp-content/uploads/2020/02/GetMobile__Edge_BW.pdf

- Improve Predictive Maintenance | Edge and Cloud Computing | DesignSpark, access date April 30, 2025, https://www.rs-online.com/designspark/improve-predictive-maintenance-with-edge-and-cloud

- Edge Computing Technology Enables Real-time Data Processing and Decision-Making, access date April 30, 2025, https://www.scalecomputing.com/resources/edge-computing-technology-enables-real-time-data-processing-and-decision-making

- AI and Edge Computing – Industries with Smarter and Faster Decisions, access date April 30, 2025, https://www.travancoreanalytics.com/en-us/ai-edge-computing/

- Top IoT Platforms for Global Industrial Development – Codewave, access date April 30, 2025, https://codewave.com/insights/top-iot-platforms-industrial-development/

- Industrial IoT – From Condition Based Monitoring to Predictive Quality to digitize your factory with AWS IoT Services, access date April 30, 2025, https://aws.amazon.com/blogs/iot/industrial-iot-from-condition-based-monitoring-to-predictive-quality-to-digitize-your-factory-with-aws-iot-services/

- The rising role of Industrial Edge Computing in the IIoT, access date April 30, 2025, https://iebmedia.com/technology/edge-cloud/rising-role-industrial-edge-in-iiot/

- On the Edge: Real-World Applications of IoT Edge for Predictive Maintenance – Sixfab, access date April 30, 2025, https://sixfab.com/blog/applications-of-iot-edge-for-predictive-maintenance/

- How AI At The Edge Transforms Predictive Maintenance And Automation – Forbes, access date April 30, 2025, https://www.forbes.com/councils/forbestechcouncil/2024/08/12/harnessing-the-power-of-ai-at-the-edge-transforming-predictive-maintenance-and-automation/