Chapter 68: Pthreads: Mutexes for Protecting Critical Sections

Chapter Objectives

By the end of this chapter, you will be able to:

- Understand the concepts of race conditions and critical sections in multithreaded applications.

- Explain the role of a mutex in enforcing mutual exclusion and protecting shared resources.

- Implement thread-safe C programs using the core POSIX threads (pthreads) mutex API, including

pthread_mutex_init,pthread_mutex_lock,pthread_mutex_unlock, andpthread_mutex_destroy. - Compile and execute multithreaded applications on a Raspberry Pi 5, analyzing their behavior with and without mutex protection.

- Debug common synchronization problems such as deadlocks and improper mutex usage.

- Apply best practices for designing and managing synchronized access to shared data in embedded Linux systems.

Introduction

In the world of embedded systems, performance and responsiveness are paramount. Modern multi-core processors, like the one powering the Raspberry Pi 5, offer immense computational power, which developers harness through multithreading. By dividing tasks into concurrent threads, an embedded application can manage a complex array of responsibilities—monitoring sensors, updating a display, managing network communication, and executing control logic—all seemingly at once. However, this concurrency introduces a subtle but significant challenge: managing shared resources.

When multiple threads attempt to read and write to the same memory location or hardware register simultaneously, the system’s state can become unpredictable and corrupted. This scenario, known as a race condition, is one of the most insidious bugs in concurrent programming. It may not appear during initial testing, only to surface intermittently in the field, causing system failures that are difficult to reproduce and diagnose. The solution lies in a mechanism that ensures only one thread can access a shared resource at any given time. This principle is called mutual exclusion.

This chapter introduces the primary tool for achieving mutual exclusion in POSIX-compliant systems: the mutex. A mutex (short for mutual exclusion) acts as a gatekeeper for a “critical section” of code—a block of instructions that accesses a shared resource. Before entering this section, a thread must “lock” the mutex. If another thread already holds the lock, any subsequent threads are forced to wait until the lock is released. By systematically protecting shared data with mutexes, we can transform an unpredictable, error-prone application into a robust, reliable, and thread-safe system. Using the Raspberry Pi 5 as our development platform, we will explore the theory behind mutexes and then apply it to practical, real-world examples.

Technical Background

The Anatomy of a Race Condition

To fully appreciate the necessity of mutexes, we must first dissect the problem they solve: the race condition. A race condition occurs when the outcome of a computation depends on the non-deterministic sequence or timing of operations executed by concurrent threads. The “race” is between threads to access and modify a shared resource, and the “loser” of this race often ends up working with stale or incorrect data, leading to catastrophic failure.

Consider a simple embedded system that counts events, such as button presses or sensor triggers. A global integer variable, event_count, stores the total. One thread is responsible for detecting events and incrementing this counter, while another thread periodically reads the counter to report its value or perform an action.

The operation event_count++ seems simple, but at the machine level, it is not a single, indivisible action. It is an non-atomic operation that typically involves three distinct steps:

- Read: Load the current value of

event_countfrom memory into a CPU register. - Modify: Increment the value within the CPU register.

- Write: Store the new value from the register back into the memory location of

event_count.

Now, imagine two threads, Thread A and Thread B, trying to increment event_count at nearly the same time. Let’s say the initial value of event_count is 10. The following sequence of events could unfold:

- Thread A executes the Read step, loading the value 10 into its CPU register.

- The operating system’s scheduler preempts Thread A, pausing its execution to run Thread B. This context switch can happen at any moment.

- Thread B executes all three steps of its increment operation: it reads 10, modifies it to 11, and writes 11 back to

event_count. The shared variable now correctly holds the value 11. - The scheduler switches back to Thread A, which resumes exactly where it left off. It has already read the value 10 into its register.

- Thread A executes its Modify step, incrementing its local register value from 10 to 11.

- Thread A executes its Write step, storing the value 11 back into

event_count.

The final value of event_count is 11. However, two separate increment events occurred, so the correct value should be 12. One of the increments has been completely lost due to the unfortunate timing of the context switch. This is the essence of a race condition. The section of code where the shared resource (event_count) is accessed is known as the critical section. To prevent this data corruption, we must ensure that the three-step read-modify-write sequence is atomic—that is, it completes without interruption from other threads.

sequenceDiagram

participant TA as Thread A

participant TB as Thread B

participant SV as Shared Variable (count = 10)

rect rgba(253, 230, 138, 0.3)

Note over TA, TB: Both threads attempt to execute count++

end

TA->>SV: 1. Read value (10)

Note over TA: CPU Register A = 10

critical OS Scheduler Preemption

TA-->>TB: Context Switch

end

TB->>SV: 2. Read value (10)

Note over TB: CPU Register B = 10

TB->>TB: 3. Increment Register B (10 -> 11)

TB->>SV: 4. Write value (11)

Note over SV: count is now 11

critical OS Scheduler Resumes Thread A

TB-->>TA: Context Switch

end

TA->>TA: 5. Increment Register A (10 -> 11)

Note over TA: Resumes with stale data!

TA->>SV: 6. Write value (11)

rect rgba(239, 68, 68, 0.4)

Note over SV: Final Incorrect Value: 11<br>Expected Value: 12<br>An increment was lost!

end

The Mutex: A Lock for Critical Sections

A mutex is a synchronization primitive that provides a locking mechanism to enforce mutual exclusion. Think of it as a key to a room. The room is the critical section, and only the thread holding the key is allowed to enter. If a thread arrives and the room is locked, it must wait outside until the current occupant leaves and returns the key.

The POSIX standard defines a rich API for creating and managing threads, and with it, a set of functions for handling mutexes. The core of this API revolves around a special data type, pthread_mutex_t, and four main functions:

pthread_mutex_init(): Initializes a mutex object.pthread_mutex_lock(): Acquires the lock on a mutex.pthread_mutex_unlock(): Releases the lock on a mutex.pthread_mutex_destroy(): Cleans up and destroys a mutex object.

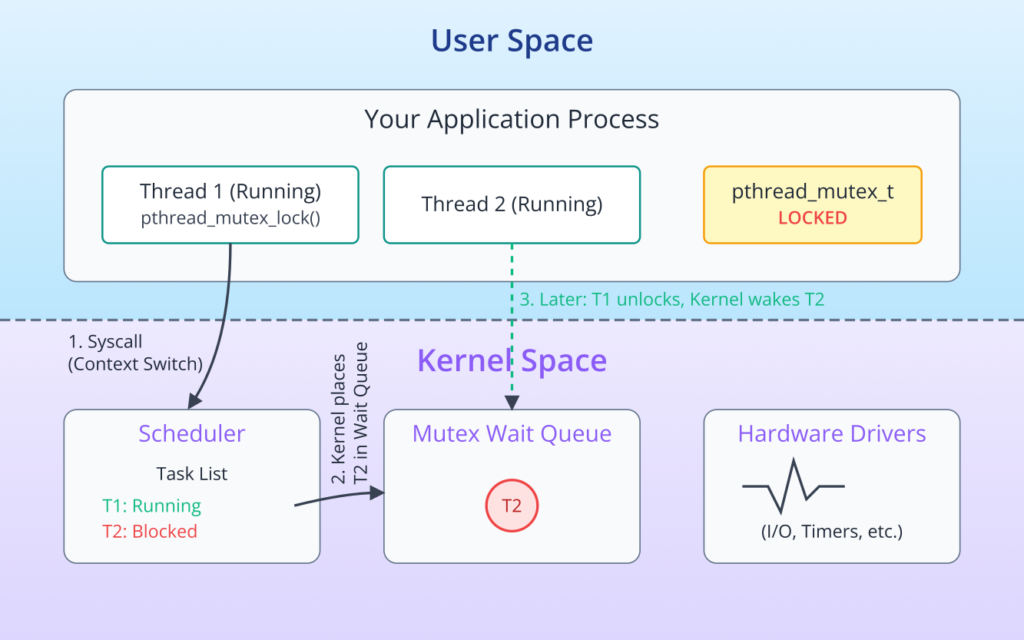

When a thread is about to enter a critical section, it must first call pthread_mutex_lock(). If the mutex is currently unlocked, the thread acquires the lock immediately and proceeds into the critical section. The operation is atomic. If the mutex is already locked by another thread, the calling thread will be put into a blocked (or sleeping) state by the kernel. It will not consume CPU cycles while it waits. The kernel will automatically wake the thread up when the mutex becomes available again.

Once the thread has finished its work within the critical section, it is crucial that it calls pthread_mutex_unlock(). This action releases the lock, making it available for other waiting threads. If a thread fails to unlock a mutex, any other thread waiting for that same mutex will be blocked indefinitely, a condition known as starvation.

graph TD

subgraph Thread A

A_Start[Start Increment]

A_TryLock{Attempt Lock<br>pthread_mutex_lock}

A_Crit[<b>Critical Section</b><br>1. Read count<br>2. Increment count<br>3. Write count]

A_Unlock[Unlock Mutex<br>pthread_mutex_unlock]

A_End[End Increment]

end

subgraph Thread B

B_Start[Start Increment]

B_TryLock{Attempt Lock<br>pthread_mutex_lock}

B_Crit[<b>Critical Section</b><br>1. Read count<br>2. Increment count<br>3. Write count]

B_Unlock[Unlock Mutex<br>pthread_mutex_unlock]

B_End[End Increment]

end

Mutex(fa:fa-lock Mutex)

A_Start --> A_TryLock

A_TryLock -- Lock Acquired --> Mutex -- Locked by A --> A_Crit

A_Crit --> A_Unlock

A_Unlock -- Lock Released --> Mutex

Mutex -- Unlocked --> A_End

B_Start --> B_TryLock

B_TryLock -- Blocked! --> Mutex

Mutex -- Woken up by Kernel --> B_Crit

B_Crit --> B_Unlock

B_Unlock -- Lock Released --> Mutex

Mutex -- Unlocked --> B_End

%% Styling

classDef default fill:#f8fafc,stroke:#64748b,stroke-width:1px,color:#1f2937

classDef startNode fill:#1e3a8a,stroke:#1e3a8a,stroke-width:2px,color:#ffffff

classDef endNode fill:#10b981,stroke:#10b981,stroke-width:2px,color:#ffffff

classDef process fill:#0d9488,stroke:#0d9488,stroke-width:1px,color:#ffffff

classDef decision fill:#f59e0b,stroke:#f59e0b,stroke-width:1px,color:#ffffff

classDef system fill:#8b5cf6,stroke:#8b5cf6,stroke-width:1px,color:#ffffff

class A_Start,B_Start startNode

class A_End,B_End endNode

class A_Crit,B_Crit process

class A_TryLock,B_TryLock decision

class A_Unlock,B_Unlock system

class Mutex system

This lock-unlock mechanism guarantees that the sequence of operations within the critical section is performed atomically from the perspective of other threads. By wrapping our event_count++ operation inside a mutex lock and unlock, we ensure that the read-modify-write sequence completes without interference.

The Lifecycle of a Mutex

A mutex, like any resource in a program, has a lifecycle. It must be created, used, and then properly disposed of.

Initialization

There are two primary ways to initialize a mutex. The first, and simplest, is to use a static initializer for mutexes declared as global or static variables. This is often sufficient for many applications.

pthread_mutex_t my_mutex = PTHREAD_MUTEX_INITIALIZER;

The PTHREAD_MUTEX_INITIALIZER macro expands to the values needed to initialize a pthread_mutex_t object with default attributes. This method is convenient but lacks flexibility.

For more control, or for mutexes allocated dynamically on the heap, you must use the pthread_mutex_init() function.

int pthread_mutex_init(pthread_mutex_t *restrict mutex, const pthread_mutexattr_t *restrict attr);

The first argument, mutex, is a pointer to the pthread_mutex_t object to be initialized. The second argument, attr, is a pointer to a mutex attributes object. If you pass NULL for attr, the mutex is initialized with default attributes, making it behave identically to one initialized with PTHREAD_MUTEX_INITIALIZER. This function returns 0 on success and a positive error number on failure.

Using an attributes object allows you to configure advanced properties of the mutex, such as its type. For example, you can create a “recursive” mutex that can be locked multiple times by the same thread without causing a deadlock, or an “error-checking” mutex that returns an error if a thread tries to lock a mutex it already owns. For most general-purpose use cases in embedded systems, the default attributes are appropriate.

Locking and Unlocking

The workhorses of the mutex API are the lock and unlock functions.

int pthread_mutex_lock(pthread_mutex_t *mutex);

int pthread_mutex_unlock(pthread_mutex_t *mutex);pthread_mutex_lock() attempts to acquire the lock. As discussed, if the mutex is unavailable, the thread blocks until the lock is released. pthread_mutex_unlock() releases the lock, potentially waking up a blocked thread. Both functions return 0 on success. A critical design principle is to keep the code within the critical section—the region between the lock and unlock calls—as short and efficient as possible. The longer a mutex is held, the more time other threads may spend waiting, which can harm the overall performance and responsiveness of the system. This is known as increasing lock contention.

In some scenarios, a thread cannot afford to block indefinitely. It might need to perform another task if the resource is not immediately available. For this, the pthreads library provides a non-blocking alternative:

int pthread_mutex_trylock(pthread_mutex_t *mutex);pthread_mutex_trylock() attempts to acquire the lock just like pthread_mutex_lock(). However, if the mutex is already locked, the function does not block. Instead, it returns immediately with the error code EBUSY. This allows the application to implement alternative logic, such as logging a message, yielding the CPU, or trying again later.

Destruction

When a mutex is no longer needed, its resources should be released back to the system. This is especially important for dynamically allocated mutexes to prevent memory leaks. The `pthread_mutex_destroy()` function handles this cleanup.

int pthread_mutex_destroy(pthread_mutex_t *mutex);This function should only be called on a mutex that is unlocked. Attempting to destroy a locked mutex results in undefined behavior, which could mean a resource leak or a program crash. For statically initialized mutexes (using PTHREAD_MUTEX_INITIALIZER), calling pthread_mutex_destroy() is good practice but not strictly required by the POSIX standard, as the resources are part of the program’s static memory. However, for dynamically initialized mutexes (using pthread_mutex_init()), destruction is mandatory.

A complete and safe lifecycle for a dynamically managed mutex would look like this:

- Declare a

pthread_mutex_tvariable. - Call

pthread_mutex_init()to initialize it. - Use

pthread_mutex_lock()andpthread_mutex_unlock()to protect critical sections. - When the mutex is no longer required, call

pthread_mutex_destroy()to clean it up.

This disciplined management ensures that your application remains robust and free from resource-related bugs.

graph TD

Start((Start)) --> InitChoice{How to Initialize?};

InitChoice -- Static/Global --> StaticInit[Static Initialization<br><i>pthread_mutex_t m =<br>PTHREAD_MUTEX_INITIALIZER;</i>];

InitChoice -- Dynamic/Heap --> DynamicInit["Dynamic Initialization<br><i>pthread_mutex_init(&m, NULL);</i>"];

subgraph "Usage Loop"

direction LR

Lock[Lock<br><b>pthread_mutex_lock</b>]

CritSection{Critical Section<br>Access Shared Data}

Unlock[Unlock<br><b>pthread_mutex_unlock</b>]

Lock --> CritSection --> Unlock --> Lock;

end

StaticInit --> Lock;

DynamicInit --> Lock;

Unlock --> CheckDone{Finished with Mutex?};

CheckDone -- No --> Lock;

CheckDone -- Yes --> Destruction;

subgraph "Cleanup"

Destruction["Destroy Mutex<br><b>pthread_mutex_destroy</b><br><i>(Required for dynamic init)</i>"]

end

Destruction --> End((End));

%% Styling

classDef default fill:#f8fafc,stroke:#64748b,stroke-width:1px,color:#1f2937

classDef startNode fill:#1e3a8a,stroke:#1e3a8a,stroke-width:2px,color:#ffffff

classDef endNode fill:#10b981,stroke:#10b981,stroke-width:2px,color:#ffffff

classDef process fill:#0d9488,stroke:#0d9488,stroke-width:1px,color:#ffffff

classDef decision fill:#f59e0b,stroke:#f59e0b,stroke-width:1px,color:#ffffff

classDef check fill:#ef4444,stroke:#ef4444,stroke-width:1px,color:#ffffff

class Start,End startNode

class InitChoice,CheckDone decision

class StaticInit,DynamicInit,Lock,CritSection,Unlock,Destruction process

Practical Examples

Theory provides the foundation, but true understanding comes from hands-on implementation. In this section, we will build, run, and analyze a C program on the Raspberry Pi 5 that demonstrates both the problem of race conditions and the mutex-based solution.

Hardware and Software Setup

- Hardware: Raspberry Pi 5

- Operating System: Raspberry Pi OS (or any other Linux distribution)

- Compiler: GCC (GNU Compiler Collection), which is pre-installed on Raspberry Pi OS.

- Connection: You will need to access the terminal on your Raspberry Pi 5, either directly with a monitor and keyboard or remotely via SSH.

No special hardware connections are needed for this software-based example.

Example 1: Demonstrating the Race Condition

First, we will write a program that intentionally creates a race condition. Two threads will concurrently increment a shared global counter a large number of times. Without synchronization, we expect the final result to be incorrect.

File Structure

Create a directory for our project and a single source file within it.

mkdir ~/pthread-mutex-demo

cd ~/pthread-mutex-demo

touch race_condition.c

Code: race_condition.c

Open race_condition.c in a text editor (like nano or vim) and enter the following code.

// race_condition.c

// Demonstrates a race condition with two threads.

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

// Define the number of increments each thread will perform.

// A large number makes the race condition more likely to manifest.

#define NUM_INCREMENTS 1000000

// The shared global variable that threads will access.

// 'volatile' tells the compiler that this variable can be changed

// by external factors (like other threads) and prevents certain

// optimizations that might hide the race condition.

volatile long long shared_counter = 0;

// The function that each thread will execute.

void* thread_function(void* arg) {

// The argument is not used in this example, but the function

// signature must match what pthread_create expects.

(void)arg;

printf("Thread starting to increment.\n");

for (long long i = 0; i < NUM_INCREMENTS; ++i) {

// This is the critical section.

// The read-modify-write operation on shared_counter is not atomic.

shared_counter++;

}

printf("Thread finished incrementing.\n");

return NULL;

}

int main() {

// Thread identifiers

pthread_t thread1, thread2;

printf("Starting program. Initial counter value: %lld\n", shared_counter);

printf("Each of the 2 threads will increment the counter %d times.\n", NUM_INCREMENTS);

// Create the first thread

if (pthread_create(&thread1, NULL, thread_function, NULL) != 0) {

perror("Failed to create thread 1");

return 1;

}

// Create the second thread

if (pthread_create(&thread2, NULL, thread_function, NULL) != 0) {

perror("Failed to create thread 2");

return 1;

}

// Wait for the first thread to finish

if (pthread_join(thread1, NULL) != 0) {

perror("Failed to join thread 1");

return 1;

}

// Wait for the second thread to finish

if (pthread_join(thread2, NULL) != 0) {

perror("Failed to join thread 2");

return 1;

}

// The expected final value is 2 * NUM_INCREMENTS.

// However, due to the race condition, the actual value will likely be less.

long long expected_value = 2 * NUM_INCREMENTS;

printf("\nProgram finished.\n");

printf("Expected final counter value: %lld\n", expected_value);

printf("Actual final counter value: %lld\n", shared_counter);

printf("Difference (lost increments): %lld\n", expected_value - shared_counter);

return 0;

}

Build and Run

1. Compile the code: Open a terminal on your Raspberry Pi 5. Navigate to the pthread-mutex-demo directory and compile the program using gcc. The -pthread flag is essential; it tells the compiler to link against the POSIX threads library.

gcc race_condition.c -o race_condition -pthread2. Run the program: Execute the compiled binary.

./race_conditionExpected Output

The exact output will vary slightly with each run due to the non-deterministic nature of thread scheduling, but it will look something like this:

Starting program. Initial counter value: 0

Each of the 2 threads will increment the counter 1000000 times.

Thread starting to increment.

Thread starting to increment.

Thread finished incrementing.

Thread finished incrementing.

Program finished.

Expected final counter value: 2000000

Actual final counter value: 1187432

Difference (lost increments): 812568

As predicted, the Actual final counter value is significantly less than the Expected final counter value. The program lost hundreds of thousands of increments because of the race condition. The two threads interfered with each other’s updates to shared_counter.

Example 2: Fixing the Race Condition with a Mutex

Now, let’s fix the problem. We will modify the program to include a mutex that protects the critical section.

File Structure

Create a new file in the same directory for the corrected version.

touch mutex_fix.c

Code: mutex_fix.c

Copy the contents of race_condition.c into mutex_fix.c and then modify it as follows. The changes are highlighted with comments.

// mutex_fix.c

// Fixes the race condition using a pthread mutex.

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#define NUM_INCREMENTS 1000000

volatile long long shared_counter = 0;

// --- CHANGE 1: Declare and initialize the mutex ---

// We use the static initializer for simplicity.

pthread_mutex_t counter_mutex = PTHREAD_MUTEX_INITIALIZER;

void* thread_function(void* arg) {

(void)arg;

printf("Thread starting to increment.\n");

for (long long i = 0; i < NUM_INCREMENTS; ++i) {

// --- CHANGE 2: Lock the mutex before the critical section ---

pthread_mutex_lock(&counter_mutex);

// This is the critical section.

// It is now protected by the mutex. Only one thread can

// execute this line at a time.

shared_counter++;

// --- CHANGE 3: Unlock the mutex after the critical section ---

pthread_mutex_unlock(&counter_mutex);

}

printf("Thread finished incrementing.\n");

return NULL;

}

int main() {

pthread_t thread1, thread2;

printf("Starting program. Initial counter value: %lld\n", shared_counter);

printf("Each of the 2 threads will increment the counter %d times.\n", NUM_INCREMENTS);

if (pthread_create(&thread1, NULL, thread_function, NULL) != 0) {

perror("Failed to create thread 1");

return 1;

}

if (pthread_create(&thread2, NULL, thread_function, NULL) != 0) {

perror("Failed to create thread 2");

return 1;

}

if (pthread_join(thread1, NULL) != 0) {

perror("Failed to join thread 1");

return 1;

}

if (pthread_join(thread2, NULL) != 0) {

perror("Failed to join thread 2");

return 1;

}

// --- CHANGE 4: Destroy the mutex when it's no longer needed ---

// Although not strictly necessary for statically initialized mutexes,

// it is good practice.

pthread_mutex_destroy(&counter_mutex);

long long expected_value = 2 * NUM_INCREMENTS;

printf("\nProgram finished.\n");

printf("Expected final counter value: %lld\n", expected_value);

printf("Actual final counter value: %lld\n", shared_counter);

printf("Difference (lost increments): %lld\n", expected_value - shared_counter);

return 0;

}

Build and Run

1. Compile the fixed version:

gcc mutex_fix.c -o mutex_fix -pthread2. Run the program:

./mutex_fixExpected Output

This time, the output will be consistent and correct every time you run it.

Starting program. Initial counter value: 0

Each of the 2 threads will increment the counter 1000000 times.

Thread starting to increment.

Thread starting to increment.

Thread finished incrementing.

Thread finished incrementing.

Program finished.

Expected final counter value: 2000000

Actual final counter value: 2000000

Difference (lost increments): 0

Success! By wrapping the shared_counter++ operation within pthread_mutex_lock() and pthread_mutex_unlock(), we have ensured that the increment operation is atomic. The final count is exactly as expected, and the race condition is eliminated. This simple example powerfully illustrates the role of mutexes in writing correct concurrent programs.

Tip: Notice that the protected version might run slightly slower than the unprotected one. This is because of the overhead of locking and unlocking the mutex. Synchronization always involves a trade-off between correctness and performance. In this case, correctness is non-negotiable.

Common Mistakes & Troubleshooting

Mutexes are powerful, but they also introduce new classes of potential errors. Understanding these common pitfalls is essential for building robust multithreaded applications.

sequenceDiagram

participant T1 as Thread 1

participant T2 as Thread 2

participant MA as Mutex A

participant MB as Mutex B

T1->>MA: 1. Locks Mutex A

note right of T1: Lock successful

T2->>MB: 2. Locks Mutex B

note left of T2: Lock successful

T1->>MB: 3. Attempts to lock Mutex B

note right of T1: BLOCKED! <br>Waiting for T2 to release.

T2->>MA: 4. Attempts to lock Mutex A

note left of T2: BLOCKED! <br>Waiting for T1 to release.

par

T1->>MB: ...waits forever...

and

T2->>MA: ...waits forever...

end

rect rgba(239, 68, 68, 0.4)

Note over T1,T2: DEADLOCK!<br>Neither thread can proceed.

end

Exercises

- Measure the Performance Overhead: Modify the

mutex_fix.cprogram to measure the time it takes to execute. Use theclock_gettime()function withCLOCK_MONOTONICto record the time before creating the threads and after joining them. Run both therace_conditionandmutex_fixversions several times and compare their average execution times. Quantify the performance overhead introduced by the mutex. - Create a Deadlock: Write a new program with two threads and two mutexes. Intentionally write the code so that Thread 1 locks mutex A then mutex B, while Thread 2 locks mutex B then mutex A. Use

printfstatements to show the order of lock attempts. Run the program and observe it hang. Then, fix the deadlock by enforcing a consistent lock order. - Protect a Data Structure: Create a program that uses a shared linked list. One thread should be responsible for adding new nodes to the list, while a second thread is responsible for removing nodes from it. Without synchronization, this will quickly corrupt the list’s pointers. Use a single mutex to protect all accesses (additions and removals) to the linked list to make the operations thread-safe.

- Implement a Bounded Buffer: A common producer-consumer problem involves a shared buffer of a fixed size. One or more “producer” threads add items to the buffer, and one or more “consumer” threads remove them. Producers must wait if the buffer is full, and consumers must wait if it is empty. Implement this using an array as the buffer, a shared counter for the number of items, and a single mutex to protect both the array and the counter. (Note: A more advanced solution would use condition variables, which will be covered in a later chapter, but a basic version can be built with just a mutex and busy-waiting).

- Explore Mutex Attributes: Modify the

mutex_fix.cprogram to use dynamic initialization withpthread_mutex_init(). Create apthread_mutexattr_tobject and usepthread_mutexattr_settype()to create an error-checking mutex (PTHREAD_MUTEX_ERRORCHECK). Now, add a bug to your code: try to lock the mutex twice in a row within the same thread. Observe howpthread_mutex_lock()now returns an error (EDEADLK) instead of causing a deadlock. This demonstrates how error-checking mutexes can help in debugging.

Summary

- Race Conditions occur when multiple threads access a shared resource concurrently, and the final outcome depends on the unpredictable timing of their execution, often leading to data corruption.

- A Critical Section is a block of code that accesses a shared resource and must not be executed by more than one thread at a time.

- A Mutex (Mutual Exclusion) is a synchronization primitive used to protect a critical section. It acts as a lock, ensuring only one thread can hold the lock at any given time.

- The core pthreads mutex API consists of

pthread_mutex_init()(orPTHREAD_MUTEX_INITIALIZER) to create a mutex,pthread_mutex_lock()to acquire it,pthread_mutex_unlock()to release it, andpthread_mutex_destroy()to clean it up. - Proper mutex management is crucial to avoid common pitfalls like deadlocks, thread starvation, and resource leaks.

- Enforcing a strict lock ordering policy is the primary strategy for preventing deadlocks in systems with multiple mutexes.

Further Reading

- The Single UNIX Specification (POSIX.1-2017): The official standard for pthreads. The pages for

pthread_mutex_init,pthread_mutex_lock, and other functions are the ultimate reference.- Available at: https://pubs.opengroup.org/onlinepubs/9699919799/

- “The Linux Programming Interface” by Michael Kerrisk: An exhaustive and highly respected guide to Linux and UNIX system programming. Chapter 30 provides an excellent in-depth discussion of mutexes.

- man pages(7) – pthreads: The Linux manual pages provide concise, authoritative documentation. On your Raspberry Pi, you can type

man pthread_mutex_lockfor detailed information. - “Advanced Programming in the UNIX Environment” by W. Richard Stevens and Stephen A. Rago: A classic text that provides deep insights into how UNIX-like systems work, including threading and synchronization.

- Raspberry Pi Foundation Documentation: While not specific to pthreads, the official documentation provides essential information about the hardware and operating system environment you are working in.

- Available at: https://www.raspberrypi.com/documentation/

- “Butenhof on Pthreads Programming” by David R. Butenhof: A comprehensive book dedicated entirely to POSIX threads programming.