Deploying TinyML Models on Microcontrollers – Running AI on Low-Power Embedded Devices

Introduction: The Rise of TinyML in Edge Computing

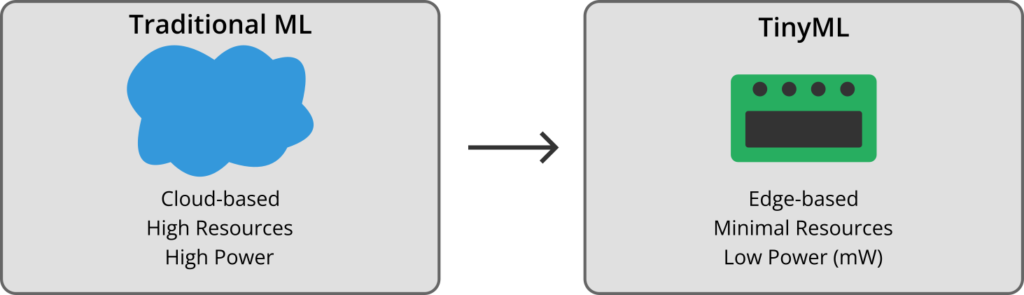

The field of machine learning has witnessed remarkable progress, permeating numerous aspects of modern technology and everyday life. From sophisticated cloud-based systems powering complex applications to embedded devices offering localized intelligence, the reach of artificial intelligence continues to expand. However, traditional machine learning often relies on substantial computational resources and consistent network connectivity, which can be limiting for certain applications.

Scenarios demanding real-time responsiveness, enhanced privacy, or operation in remote or disconnected environments necessitate a different approach. This need has spurred the development of Tiny Machine Learning (TinyML), a specialized subfield focused on bringing machine learning capabilities to resource-constrained edge devices, particularly microcontrollers.

Defining and Understanding TinyML

What is TinyML? Core Concepts and Definitions

TinyML represents a paradigm shift in machine learning, enabling the deployment and execution of machine learning models on devices with extremely limited computational resources. These devices are characterized by their small size, low power consumption (typically in the milliwatt range and below), and constrained processing capabilities.

This interdisciplinary field encompasses not only the development of lightweight algorithms but also the design of specialized hardware and efficient software frameworks capable of analyzing sensor data directly on these devices. By facilitating on-device sensor data analytics, TinyML moves the processing closer to the source of the data, residing at the edge of the network.

While sharing similarities with the broader concept of Edge AI, TinyML distinguishes itself by specifically targeting the smallest microcontrollers (MCUs), which possess even more restrictive resources compared to other edge computing devices. Often, the intelligence within TinyML solutions is driven by neural networks and deep learning techniques, carefully adapted and optimized to function within the severe limitations of these embedded environments.

The very essence of TinyML lies in the synergistic advancement of hardware, becoming more powerful yet energy-efficient, and software optimization, yielding lightweight algorithms and specialized frameworks. This convergence is the fundamental driving force behind the expanding capabilities and applications of TinyML.

The Significance and Benefits of Bringing AI to Microcontrollers

The ability to run machine learning models on microcontrollers unlocks vast potential for expanding the reach and applicability of AI into previously inaccessible domains. The projected exponential growth in TinyML unit volumes, from an estimated 15 million units in 2020 to a staggering 2.5 billion by 2030, underscores the anticipated transformative impact of this technology across numerous sectors.

This significant market validation is driven by a multitude of compelling benefits:

- Reduced Latency: By processing data locally on the device, TinyML eliminates the need to transmit data to remote servers for inference, thereby minimizing delays and enabling faster response times crucial for real-time applications.

- Energy Savings: Microcontrollers require minimal power to operate, allowing TinyML-powered devices to function for extended periods on small batteries or even through energy harvesting.

- Bandwidth Efficiency: The localized processing inherent in TinyML significantly reduces bandwidth requirements, as only relevant information or decisions, rather than raw sensor data, needs to be transmitted over networks.

- Enhanced Privacy: In an era of increasing concerns about data security, TinyML offers a significant advantage by enabling on-device processing, ensuring that sensitive data does not need to be stored or transmitted to potentially vulnerable cloud servers.

- Offline Operation: TinyML solutions can often function reliably even in the absence of network connectivity, enhancing their utility in remote or infrastructure-limited environments.

- “Always-On” Capabilities: The low power requirements facilitate “always-on” use cases, particularly for battery-operated sensors designed for continuous monitoring.

The Benefits of TinyML on Microcontrollers

Low Latency

Process data directly on device without network delays.

Privacy

Sensitive data stays on device, never sent to the cloud.

Energy Efficiency

Ultra-low power consumption for battery operation.

Offline Operation

Functions without internet connectivity.

Bandwidth Reduction

Only transmits results, not raw sensor data.

Cost Efficiency

Reduces cloud computing and bandwidth costs.

Market Impact:

TinyML devices are projected to grow from 15 million units in 2020 to 2.5 billion by 2030, representing one of the fastest-growing segments in AI technology deployment.

Diverse Applications of TinyML Across Industries

The versatility of TinyML is evident in its expanding range of applications across a multitude of industries. Initially finding traction in areas like agriculture, industrial predictive maintenance, and enhancing customer experiences, TinyML’s capabilities extend to computer vision tasks such as visual wake words and object detection, as well as audio processing applications like keyword spotting and gesture recognition.

Its impact is being felt across major sectors including:

Healthcare

- Vital signs monitoring

- Tracking the progression of degenerative health conditions

- Enhancing patient privacy

- Portable diagnostic devices for on-site analysis of medical images and tests

Environmental Monitoring

- Tracking air and water quality

- Monitoring noise pollution

- Observing wildlife activity in real-time

Industrial Applications

- Predictive maintenance systems to monitor machinery and anticipate potential failures

- Safety monitoring, such as detecting hazardous gases

- Automated quality control in manufacturing

- Equipment maintenance

Consumer Electronics

- Smartwatches and wearable health devices

- Home automation systems

- Voice recognition and gesture control

- Energy consumption optimization

Agriculture

- More efficient crop management

- Livestock tracking through real-time data monitoring

- Soil moisture monitoring

- Disease detection in crops

The sheer breadth of these applications highlights that TinyML is not a niche technology but a fundamental shift in how AI can be deployed and utilized, with the potential to revolutionize various aspects of our lives and industries.

Microcontroller Hardware for TinyML Deployment

Key Characteristics: Processing Power, Memory Constraints, and Energy Efficiency

Microcontrollers designed for TinyML applications are characterized by their inherently limited processing power, constrained memory resources (typically ranging from a few kilobytes of RAM to a few megabytes of flash memory), and a primary focus on ultra-low power consumption.

Unlike general-purpose processors found in computers and smartphones, MCUs are specifically optimized for executing dedicated tasks with maximum energy efficiency. TinyML targets devices operating at the very edge of the computing spectrum, often functioning within milliwatt power budgets.

Despite these limitations, advancements in both hardware and software have enabled low-power MCUs to effectively support AI-driven functionalities. Modern low-power MCUs are increasingly incorporating specialized hardware accelerators, such as Digital Signal Processors (DSPs) and Neural Processing Units (NPUs), to handle the computational demands of AI workloads more efficiently.

Energy efficiency remains a paramount design consideration for these devices, with features like deep sleep modes and dynamic voltage scaling implemented to minimize power consumption during operation and idle states.

Overview of Popular Microcontroller Families for TinyML

A diverse range of microcontroller families and development boards are available to facilitate TinyML development and deployment. The STM32 family of microcontrollers, including their ultra-low-power series like STM32L4 and STM32U5, are widely used in embedded systems and have strong support for TinyML through tools like STM32Cube.AI, which aids in optimizing models for their MCUs.

| MCU | Architecture | CPU Frequency | Flash Memory | RAM | ML Accelerator | Power Consumption | Typical Applications |

|---|---|---|---|---|---|---|---|

| STM32L4+ | ARM Cortex-M4F | Up to 120 MHz | Up to 2 MB | Up to 640 KB | DSP instructions | 35 μA/MHz | Audio processing, sensor fusion |

| ESP32-S3 | Dual-core Xtensa LX7 | Up to 240 MHz | Up to 16 MB | Up to 512 KB | Vector instructions | 5-20 mA | Voice assistants, smart sensors |

| Nordic nRF52840 | ARM Cortex-M4F | 64 MHz | 1 MB | 256 KB | No | 5.4 mA @ 3V | Wearables, BLE devices |

| MAX78000 | ARM Cortex-M4F | 100 MHz | 512 KB | 128 KB | CNN accelerator | 0.7 mA @ 1.8V | Vision/audio AI applications |

| Ambiq Apollo4 | ARM Cortex-M4F | Up to 192 MHz | Up to 2 MB | Up to 2.75 MB | No | < 1 mA/MHz | Ultra-low power wearables |

| RP2040 | Dual Cortex-M0+ | Up to 133 MHz | 2-16 MB external | 264 KB | No | ~20 mA | Hobby projects, sensors |

| NXP i.MX RT1050 | ARM Cortex-M7 | Up to 600 MHz | External Flash | 512 KB | No | ~100 mA | High-performance edge AI |

Companies like Ambiq have launched microcontroller families, such as the Apollo330, specifically engineered for always-on edge AI using innovative sub-threshold technology and ARM Cortex-M55 cores. Analog Devices offers AI microcontrollers like the MAX78000 and MAX78002, which incorporate built-in neural network hardware accelerators for accelerated inference.

The variety of microcontroller boards and families available for TinyML indicates a maturing ecosystem with options catering to different application needs and developer preferences. The active support for TinyML by major microcontroller manufacturers and the development of specialized tools signals a strong commitment from the hardware industry to facilitate the adoption of TinyML.

Considerations for Selecting the Right Microcontroller

Choosing the appropriate microcontroller for a TinyML project is a critical decision that significantly impacts the success of the deployment. The selection process is largely driven by the specific requirements of the intended application, including:

- The complexity of the machine learning model to be deployed

- The desired inference speed or latency

- The overall power budget for the device

- The necessary peripheral interfaces such as sensors and communication modules

Memory capacity, encompassing both RAM for runtime operations and flash memory for storing the model and firmware, is a fundamental constraint that must be carefully considered. Similarly, the processing power of the microcontroller will determine the feasibility of executing the model within the required latency constraints.

For many TinyML applications, especially those intended for battery-powered or always-on operation, energy efficiency is a paramount concern that will heavily influence the choice of microcontroller. Finally, the availability of robust software support, including compatible TinyML frameworks and libraries, for a particular microcontroller is a crucial factor that can significantly ease the development process.

Selecting a microcontroller for TinyML involves a multi-faceted decision-making process where various hardware characteristics must be carefully weighed against the specific demands of the intended application. There is no one-size-fits-all solution, and developers must carefully evaluate the trade-offs between performance, power consumption, cost, and software ecosystem.

The Workflow of Developing TinyML Models

flowchart TB

A[Data Collection] --> B[Data Preprocessing]

B --> C[Model Design]

C --> D[Model Training]

D --> E[Model Optimization]

E --> F[Model Conversion]

F --> G[Deployment to MCU]

G --> H[Testing & Validation]

H --> I{Performance\nAcceptable?}

I -->|No| J[Refine Model]

J --> D

I -->|Yes| K[Production Deployment]

subgraph Desktop/Cloud

A

B

C

D

E

F

end

subgraph Microcontroller

G

H

I

K

end

style Desktop/Cloud fill:#f5f5ff,stroke:#9999ff,stroke-width:2px

style Microcontroller fill:#f5fff5,stroke:#99ff99,stroke-width:2pxData Acquisition and Preparation for Resource-Constrained Environments

The development workflow for TinyML applications shares similarities with traditional machine learning pipelines but necessitates careful adaptation to the constraints of resource-limited devices. The initial step, data acquisition, typically involves gathering sensor data relevant to the specific application. This could include data from temperature sensors, accelerometers, microphones, or even low-resolution cameras.

Given the limited processing power and memory of microcontrollers, efficient data acquisition and preprocessing are paramount. Data preprocessing is a crucial stage where raw sensor data is cleaned, noise is reduced, and input features are normalized to prepare the data for effective model training on resource-constrained devices.

Strategies that minimize the amount of raw data collected and optimize its format for model training are essential for successful TinyML development. In scenarios where collecting large amounts of real-world data on edge devices might be challenging, techniques like data augmentation can be employed to artificially increase the size and diversity of the training dataset, thereby improving the robustness and generalization ability of the model.

The need for data preprocessing on resource-constrained devices might necessitate a trade-off between the complexity of preprocessing techniques and the available computational resources. Simpler, more efficient preprocessing methods might be preferred over computationally intensive ones to ensure real-time performance on the microcontroller.

Model Training Methodologies and Frameworks for TinyML

Machine learning models intended for TinyML deployment are often trained using traditional machine learning platforms like TensorFlow or PyTorch on more powerful computers or cloud-based systems. This separation of the training phase from the resource-constrained deployment environment allows developers to leverage the computational power needed for complex model training.

| Framework | Key Features | Supported Hardware | Optimization Techniques | Ease of Use | Integration | Best For |

|---|---|---|---|---|---|---|

| TensorFlow Lite Micro | – C++ library for microcontrollers – Broad ecosystem – Active community support | – ARM Cortex-M – ESP32 – Arduino – STM32 | – Post-training quantization – Pruning – Weight clustering | ⭐⭐⭐ | – TensorFlow ecosystem – Arduino IDE – Platformio | – Projects needing TensorFlow compatibility – Wide range of MCUs |

| Edge Impulse | – End-to-end development platform – No-code/low-code options – Built-in data collection | – ARM Cortex-M – ESP32 – Nordic nRF – Many others | – Automated optimization – Feature selection – Custom DSP blocks | ⭐⭐⭐⭐⭐ | – Web-based – CLI tools – Direct deployment | – Rapid prototyping – Custom sensor projects – Non-experts |

| uTensor | – Lightweight C++ inference – Smaller code size – Low memory footprint | – ARM Cortex-M – RISC-V – Limited support | – Manual optimization – Basic quantization | ⭐⭐ | – Mbed OS – Custom firmware | – Ultra-constrained devices – Specialized applications |

| Neuton TinyML | – Autonomous model design – Ultra-compact models – Self-optimization | – 8-bit AVR – ARM Cortex-M – ESP32 – STM32 | – Automatic architecture selection – Advanced quantization | ⭐⭐⭐⭐ | – Web platform – C/C++ code export | – Extremely resource-constrained MCUs – Simple deployment |

| STM32Cube.AI | – Optimized for STM32 – Hardware-specific tuning – Performance analysis | – STM32 family | – Hardware-aware optimization – Layer fusion – Quantization | ⭐⭐⭐ | – STM32CubeIDE – X-CUBE-AI | – STM32 development – Production-grade deployment |

| CMSIS-NN | – Low-level optimization – Efficient math operations – ARM architecture focus | – ARM Cortex-M – ARM Cortex-A | – Instruction-level optimization – Fixed-point arithmetic | ⭐⭐ | – ARM development tools – Used by other frameworks | – Maximum performance – Custom implementations |

Transfer learning can be a particularly valuable technique in TinyML development. By leveraging pre-trained models developed for similar tasks on larger datasets, developers can adapt these models for specific TinyML applications with significantly less data and computational effort.

TensorFlow Lite for Microcontrollers (TF Lite Micro) stands out as a popular framework specifically designed for implementing machine learning on embedded systems with limited memory. It provides optimized libraries and tools for running inference efficiently on various microcontroller architectures.

| Methodology | Pros | Cons | Best For |

|---|---|---|---|

| Transfer Learning | – Requires less data – Faster training – Leverages pre-trained knowledge | – May not work for specialized domains – Still requires optimization for MCUs | – Common computer vision – Audio tasks – Limited data availability |

| Quantization-Aware Training | – Better accuracy for quantized models – Smaller model size – Lower inference latency | – More complex training process – Requires framework support | – Production deployment – When accuracy is critical |

| Post-Training Quantization | – Simple to implement – Works with existing models – No retraining required | – Potentially higher accuracy loss – Less optimal for extreme quantization | – Quick prototyping – Initial deployments |

| Pruning & Compression | – Removes redundant parameters – Significant size reduction | – May require fine-tuning – Complex to implement manually | – Complex models – Severe memory constraints |

| Knowledge Distillation | – Compact student models – Better generalization – Focused learning | – Requires training two models – Careful teacher selection | – Complex tasks – When larger teacher models exist |

Other notable frameworks include uTensor and Edge Impulse, which also facilitate the optimization and deployment of models on low-power devices. Edge Impulse offers a comprehensive platform encompassing data collection, model training, and streamlined deployment to embedded targets. Neuton TinyML is another framework that supports a wide range of microcontrollers, including 8, 16, and 32-bit architectures, and aims to simplify the process of building and deploying neural networks with minimal coding required.

Crucial Model Optimization Techniques: Quantization, Pruning, and Compression

To enable the deployment of machine learning models on microcontrollers with their inherent limitations in memory and processing power, model optimization is an absolutely essential step. Several key techniques are employed to reduce the size and computational complexity of trained models without significant loss in accuracy:

- Quantization: A widely used method that reduces the precision of the model’s weights and activations, often converting them from 32-bit floating-point numbers to more compact 8-bit integers. This not only decreases the model’s memory footprint but also enhances the speed of inference and reduces power consumption.

- Pruning: An effective optimization technique that involves removing redundant or less significant parameters from the model, further shrinking its size and decreasing the computational resources required for execution.

- Knowledge Distillation: An alternative approach where a smaller, more efficient “student” model is trained to mimic the behavior and outputs of a larger, more complex “teacher” model.

- Other Techniques: Projection and data type conversion can also be employed to further tailor the model for resource-constrained environments.

flowchart TD

A[Full-size Neural Network]

B[Model Architecture Optimization]

C[Weight Pruning]

D[Knowledge Distillation]

E[Quantization]

F[Post-Training Quantization]

G[Quantization-Aware Training]

H[Weight Clustering]

I[Optimized TinyML Model]

A --> B

A --> C

A --> D

B --> E

C --> E

D --> E

E --> F

E --> G

E --> H

F --> I

G --> I

H --> I

subgraph "Step 1: Model Size Reduction"

B

C

D

end

subgraph "Step 2: Numerical Precision Optimization"

E

F

G

H

end

classDef primary fill:#3498db,stroke:#333,stroke-width:2px,color:white;

classDef secondary fill:#2ecc71,stroke:#333,stroke-width:2px,color:white;

classDef tertiary fill:#e74c3c,stroke:#333,stroke-width:2px,color:white;

class A,I primary;

class B,C,D secondary;

class E,F,G,H tertiary;Model optimization is not just about reducing model size; it’s a holistic process that aims to achieve the best possible balance between model accuracy, inference speed, and resource utilization on the target microcontroller.

Here is an example code:

import tensorflow as tf

import numpy as np

# Load your pre-trained model

model = tf.keras.models.load_model('my_model.h5')

# Define a representative dataset for quantization

def representative_dataset():

# Use a small subset of your training or validation data

# This helps the quantizer understand the typical input ranges

for i in range(100):

data = np.random.rand(1, 28, 28, 1) * 255 # Example for MNIST-like data

yield [data.astype(np.float32)]

# Convert the model to TensorFlow Lite format

converter = tf.lite.TFLiteConverter.from_keras_model(model)

# 1. Float16 Quantization - reduces model size by half with minimal accuracy loss

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float16]

float16_tflite_model = converter.convert()

# Save the float16 model

with open('model_float16.tflite', 'wb') as f:

f.write(float16_tflite_model)

print(f"Float16 model size: {len(float16_tflite_model) / 1024:.2f} KB")

# 2. Integer Quantization - reduces model size by 4x, more accuracy impact

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset

int8_tflite_model = converter.convert()

# Save the int8 model

with open('model_int8.tflite', 'wb') as f:

f.write(int8_tflite_model)

print(f"Int8 model size: {len(int8_tflite_model) / 1024:.2f} KB")

# 3. Full Integer Quantization - maximum size reduction for microcontrollers

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.int8 # or tf.uint8

converter.inference_output_type = tf.int8 # or tf.uint8

full_int8_tflite_model = converter.convert()

# Save the fully quantized model

with open('model_full_int8.tflite', 'wb') as f:

f.write(full_int8_tflite_model)

print(f"Fully quantized model size: {len(full_int8_tflite_model) / 1024:.2f} KB")

# Optional: Convert to C array for direct inclusion in microcontroller code

def convert_to_c_array(model_path, array_name):

with open(model_path, 'rb') as model_file:

model_data = model_file.read()

c_file = f"const unsigned char {array_name}[] = {{"

for i, byte_val in enumerate(model_data):

if i % 12 == 0:

c_file += '\n '

c_file += f"0x{byte_val:02x}, "

c_file += "\n};\n"

c_file += f"const unsigned int {array_name}_len = {len(model_data)};\n"

with open(f"{array_name}.h", 'w') as header_file:

header_file.write(c_file)

# Convert the fully quantized model to a C array

convert_to_c_array('model_full_int8.tflite', 'g_model')

print("Generated C header file for microcontroller deployment")This code demonstrates the essential process of optimizing a neural network model for deployment on microcontrollers using TensorFlow Lite. Here’s a breakdown of what each section accomplishes:

- Model Loading: The code begins by loading a pre-trained Keras model which serves as the starting point for optimization.

- Representative Dataset Creation: The function

representative_dataset()provides sample input data that helps the quantization process understand the typical range of values the model will encounter. This is crucial for maintaining accuracy during quantization. - Float16 Quantization: The first optimization technique converts 32-bit floating-point weights to 16-bit floating-point format. This reduces the model size by approximately 50% with minimal impact on accuracy, making it a good first step for model compression.

- Integer Quantization: The second technique applies 8-bit integer quantization to weights only. This results in a model roughly 75% smaller than the original with moderate accuracy impact. This approach maintains floating-point calculations for activations.

- Full Integer Quantization: The most aggressive optimization converts both weights and activations to 8-bit integers. This produces the smallest possible model size (often 25% of the original) and enables the use of integer-only hardware acceleration on microcontrollers. While this may have the largest accuracy impact, it’s typically the best choice for extremely constrained devices.

- C Array Conversion: The final step converts the optimized model into a C header file containing the model data as a byte array. This allows the model to be directly compiled into the microcontroller firmware, eliminating the need for file system operations on devices that may not support them.

Each optimization level represents a different trade-off between model size, inference speed, and accuracy. For TinyML applications, the fully quantized model is often preferred due to its minimal memory footprint and compatibility with integer-only arithmetic on low-power microcontrollers. The generated header file can be directly included in microcontroller projects using TensorFlow Lite Micro or similar embedded ML frameworks.

Deployment Strategies and Tools for Microcontrollers

Model Conversion and Optimization for Embedded Systems

Once a machine learning model has been trained and optimized, it needs to be converted into a format that can be efficiently interpreted and executed by the target microcontroller. This conversion process often involves further optimization steps specifically tailored for the architecture and capabilities of the embedded system.

Frameworks like TensorFlow Lite provide essential tools for converting trained TensorFlow models into optimized flat buffer formats, which are designed to be lightweight and can be readily loaded onto edge devices. A crucial aspect of this conversion involves selecting the most appropriate numerical representation for the model’s parameters and operations. This might entail choosing between different floating-point precisions or opting for integer arithmetic to optimize processor efficiency based on the specific requirements and capabilities of the microcontroller.

Here is an example code for MCUs:

The model conversion process is a critical step that bridges the gap between the training environment and the resource-constrained deployment environment. It often involves further quantization and other optimizations tailored to the specific microcontroller architecture.

#include <TensorFlowLite.h>

#include "tensorflow/lite/micro/all_ops_resolver.h"

#include "tensorflow/lite/micro/micro_error_reporter.h"

#include "tensorflow/lite/micro/micro_interpreter.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "tensorflow/lite/version.h"

// Include the generated model header

#include "model_full_int8.h" // Contains model array: g_model[]

// Include sensor interface for data collection

#include "accelerometer.h" // Hypothetical accelerometer interface

// Constants for inference

constexpr int kTensorArenaSize = 30 * 1024; // Allocate memory for tensor arena

constexpr int kFeatureSize = 128; // Input size for model

constexpr int kNumClasses = 6; // Number of activity classes

// Global variables

uint8_t tensor_arena[kTensorArenaSize];

// Inference results - activity types

const char* ACTIVITIES[] = {

"Walking", "Running", "Standing", "Sitting", "Upstairs", "Downstairs"

};

// TFLite globals

tflite::ErrorReporter* error_reporter = nullptr;

const tflite::Model* model = nullptr;

tflite::MicroInterpreter* interpreter = nullptr;

TfLiteTensor* input = nullptr;

TfLiteTensor* output = nullptr;

int8_t feature_buffer[kFeatureSize]; // Buffer to hold extracted features

int samples_collected = 0;

int32_t previous_time = 0;

// Preprocessing normalizing parameters (from training)

const float ACCEL_MEAN[3] = {0.0, 9.81, 0.0}; // x, y, z means

const float ACCEL_STD[3] = {3.5, 3.5, 3.5}; // x, y, z standard deviations

// Function prototypes

void setup_tflite();

void collect_accelerometer_data();

void extract_features();

void run_inference();

void handle_result(uint8_t predicted_activity, float confidence);

void setup() {

Serial.begin(115200);

while (!Serial);

Serial.println("TinyML Human Activity Recognition");

// Initialize accelerometer

if (!accelerometer_init()) {

Serial.println("Failed to initialize accelerometer!");

while (1);

}

// Initialize TensorFlow Lite model

setup_tflite();

// Set initial timestamp

previous_time = millis();

}

void loop() {

// Collect sensor data at appropriate intervals

if (millis() - previous_time >= 20) { // 50Hz sampling rate

collect_accelerometer_data();

previous_time = millis();

}

// When we have enough samples, extract features and run inference

if (samples_collected >= 128) { // We use a window of 128 samples

extract_features();

run_inference();

samples_collected = 0; // Reset counter

}

}

void setup_tflite() {

// Set up logging

static tflite::MicroErrorReporter micro_error_reporter;

error_reporter = µ_error_reporter;

// Map the model into a usable data structure

model = tflite::GetModel(g_model);

// Verify the model schema version

if (model->version() != TFLITE_SCHEMA_VERSION) {

error_reporter->Report("Model schema version mismatch!");

return;

}

// Create an all operations resolver (potentially large code size)

// In production, use a selective resolver with only needed ops

static tflite::AllOpsResolver resolver;

// Build an interpreter to run the model

static tflite::MicroInterpreter static_interpreter(

model, resolver, tensor_arena, kTensorArenaSize, error_reporter);

interpreter = &static_interpreter;

// Allocate memory from the tensor arena for model tensors

TfLiteStatus allocate_status = interpreter->AllocateTensors();

if (allocate_status != kTfLiteOk) {

error_reporter->Report("AllocateTensors() failed");

return;

}

// Get pointers to input and output tensors

input = interpreter->input(0);

output = interpreter->output(0);

// Confirm input dimensions match our expectations

if (input->dims->size != 2 || input->dims->data[0] != 1 ||

input->dims->data[1] != kFeatureSize) {

error_reporter->Report("Input tensor has incorrect dimensions");

return;

}

// Check input type - should match the quantization settings

if (input->type != kTfLiteInt8) {

error_reporter->Report("Input tensor has incorrect type");

return;

}

Serial.println("TensorFlow Lite model initialized");

}

void collect_accelerometer_data() {

// Get accelerometer data

float ax, ay, az;

accelerometer_read(&ax, &ay, &az);

// Store raw data for feature extraction

if (samples_collected < 128) {

// Store data in a buffer for feature extraction

// This is simplified - in real application, you would store

// all samples and then extract features

// Here we're just calculating a very simple feature -

// the normalized accelerometer values

feature_buffer[samples_collected] = (int8_t)constrain(

((ax - ACCEL_MEAN[0]) / ACCEL_STD[0]) * input->params.scale * 127.0,

-128, 127);

samples_collected++;

}

}

void extract_features() {

// In a real application, this would extract meaningful features from

// the raw accelerometer data - like frequency domain features,

// statistical measures, etc.

// For this example, we're using the already extracted simple features

// directly stored in the feature_buffer

// Copy feature buffer to model input tensor

for (int i = 0; i < kFeatureSize; i++) {

input->data.int8[i] = feature_buffer[i];

}

Serial.println("Features extracted and prepared for inference");

}

void run_inference() {

// Run the model on the input data

TfLiteStatus invoke_status = interpreter->Invoke();

if (invoke_status != kTfLiteOk) {

error_reporter->Report("Invoke failed");

return;

}

// Get the highest probability activity class

int8_t max_score = output->data.int8[0];

int max_index = 0;

for (int i = 1; i < kNumClasses; i++) {

if (output->data.int8[i] > max_score) {

max_score = output->data.int8[i];

max_index = i;

}

}

// Convert from int8 to floating point confidence

float confidence = (max_score - output->params.zero_point) * output->params.scale;

// Handle the result

handle_result(max_index, confidence);

}

void handle_result(uint8_t predicted_activity, float confidence) {

// Log the prediction result

Serial.print("Predicted activity: ");

Serial.print(ACTIVITIES[predicted_activity]);

Serial.print(" (Confidence: ");

Serial.print(confidence * 100);

Serial.println("%)");

// Take action based on the detected activity

// For example, adjust power modes, trigger alerts, etc.

if (confidence > 0.8) {

// High confidence prediction - could trigger specific actions

if (predicted_activity == 1) { // Running

// Perhaps enter a high-sampling-rate mode

Serial.println("Detected running with high confidence!");

} else if (predicted_activity == 2 || predicted_activity == 3) { // Stationary

// Perhaps enter a power-saving mode

Serial.println("Detected stationary state, reducing sampling rate");

}

}

}This code demonstrates a practical implementation of TinyML for human activity recognition on a microcontroller. Here’s a breakdown of what each section accomplishes:

- Setup and Initialization: The code begins by including necessary TensorFlow Lite for Microcontrollers libraries and setting up the environment. It allocates memory for the tensor arena (the workspace where TensorFlow Lite performs its operations) and defines constants for the model’s input and output characteristics.

- TensorFlow Lite Model Loading: In the

setup_tflite()function, the code loads the pre-compiled model from a header file, initializes the interpreter, and allocates tensors. The model was previously converted to a C array using the optimization techniques shown in the previous code example. - Sensor Data Collection: The

collect_accelerometer_data()function gathers raw accelerometer readings at a regular interval (50Hz sampling rate) and applies initial preprocessing, including normalization and quantization to match the model’s int8 input requirements. - Feature Extraction: While this example uses simple normalized values, the

extract_features()function represents where more sophisticated feature engineering would occur in a real-world application. Features are copied into the model’s input tensor in preparation for inference. - Inference Execution: The

run_inference()function executes the TinyML model on the prepared input data. It then processes the quantized output to find the most probable activity class and converts the confidence score back to a floating-point representation. - Result Handling: The

handle_result()function demonstrates how inference results can be used to make decisions in an embedded system. In this case, it adapts the device’s behavior based on the detected activity, such as adjusting sampling rates for different activity levels. - Main Processing Loop: The

loop()function orchestrates the entire process, collecting data until enough samples are gathered for a complete inference, then triggering the feature extraction and inference steps.

This implementation showcases key aspects of TinyML deployment on microcontrollers: efficient memory usage through quantization, real-time sensor data processing, and adaptive device behavior based on AI-driven insights. The code balances performance requirements with the severe resource constraints typical of microcontroller environments.

Firmware Integration and Deployment Processes

The actual deployment of a TinyML model onto a microcontroller involves integrating the converted and optimized model into the device’s firmware. This process typically requires utilizing the integrated development environment (IDE) and toolchain provided by the microcontroller manufacturer or the specific TinyML framework being used.

Developers write code that handles the input of data from sensors, feeds this data to the deployed machine learning model, and then acts upon the model’s output or predictions. Before deploying to physical hardware, hardware-in-the-loop (HIL) simulation can be a valuable technique. HIL simulation allows developers to simulate and test their TinyML models in a virtual real-time environment that closely represents the physical system. This enables thorough validation of the model’s performance and identification of potential issues before the model is actually deployed to the target microcontroller.

Firmware integration requires a good understanding of both the machine learning model and the embedded software development process. Thorough testing and validation are crucial to ensure that the deployed TinyML model performs correctly and reliably in the target environment.

Popular Deployment Frameworks and Libraries

Several popular frameworks and libraries significantly simplify the deployment of TinyML models on microcontrollers:

- TensorFlow Lite for Microcontrollers (TF Lite Micro): A leading framework that provides the necessary tools to convert trained models and deploy them for efficient on-device inference. Written in C++ 11, TF Lite Micro is highly compatible with a wide range of Arm Cortex-M series processors.

- Edge Impulse: Offers a comprehensive solution, supporting deployment to a vast array of microcontrollers and providing an end-to-end platform that covers data collection, model training, and deployment.

- Platform-Specific Tools: Some microcontroller manufacturers offer platform-specific libraries and tools. For instance, STMicroelectronics provides STM32Cube.AI, which is specifically designed to optimize and deploy AI models on their STM32 family of microcontrollers.

flowchart TD

A[Trained Model\n.h5/.pb/.onnx] --> B{Choose Framework}

B -->|TensorFlow Lite Micro| C1[Convert to TFLite]

B -->|Edge Impulse| D1[Import to Edge Impulse]

B -->|STM32Cube.AI| E1[Import to X-CUBE-AI]

B -->|Neuton TinyML| F1[Upload to Neuton]

C1 --> C2[Quantize & Optimize]

C2 --> C3[Convert to C Array]

C3 --> C4[TF Micro Interpreter]

D1 --> D2[Automatic Optimization]

D2 --> D3[Generate Deployment Files]

D3 --> D4[Deploy with Edge Impulse SDK]

E1 --> E2[Analyze Performance]

E2 --> E3[Optimize for STM32]

E3 --> E4[Generate STM32 Code]

F1 --> F2[Automatic Model Design]

F2 --> F3[Generate C/C++ Library]

C4 --> G[Integrate with\nTarget MCU Code]

D4 --> G

E4 --> G

F3 --> G

G --> H[Build & Flash\nFirmware]

H --> I[Deploy & Test\non Target Device]

subgraph "TensorFlow Process"

C1

C2

C3

C4

end

subgraph "Edge Impulse Process"

D1

D2

D3

D4

end

subgraph "STM32Cube.AI Process"

E1

E2

E3

E4

end

subgraph "Neuton Process"

F1

F2

F3

end

classDef tfProcess fill:#f5f5ff,stroke:#3498db,stroke-width:2px;

classDef eiProcess fill:#f0fff0,stroke:#2ecc71,stroke-width:2px;

classDef stmProcess fill:#fff5f5,stroke:#e74c3c,stroke-width:2px;

classDef neutonProcess fill:#fffff0,stroke:#f39c12,stroke-width:2px;

classDef common fill:#f8f9fa,stroke:#333,stroke-width:1px;

class C1,C2,C3,C4 tfProcess;

class D1,D2,D3,D4 eiProcess;

class E1,E2,E3,E4 stmProcess;

class F1,F2,F3 neutonProcess;

class A,B,G,H,I common;The availability of well-supported deployment frameworks significantly simplifies the process of getting TinyML models running on microcontrollers. These frameworks often handle low-level details of model interpretation and hardware interaction, allowing developers to focus on the application logic.

Navigating the Challenges and Limitations of TinyML

Addressing Constraints in Latency, Accuracy, and Memory Footprint

Deploying artificial intelligence models on low-power embedded systems inherently presents several challenges, primarily concerning latency, accuracy, and memory footprint. These three factors are often interconnected, and optimizing for one can sometimes negatively impact the others.

For example, reducing the size of a model to fit within the limited memory of a microcontroller might lead to a decrease in its overall accuracy. Conversely, attempting to achieve higher accuracy by using a more complex model can result in a larger memory footprint and increased inference time, potentially exceeding the capabilities or power budget of the target device.

Achieving an acceptable level of accuracy with highly compressed models requires careful model design, meticulous optimization techniques, and often a deep understanding of the specific application domain. The inherent resource constraints of microcontrollers necessitate a careful balancing act between model complexity (which often correlates with accuracy), model size (memory footprint), and inference speed (latency).

Developers must make informed trade-offs based on the specific requirements of their application. Continuous advancements in model optimization techniques and microcontroller hardware are constantly pushing the boundaries of what is achievable in terms of latency, accuracy, and memory footprint for TinyML deployments.

Strategies for Overcoming Hardware Limitations

Several strategies can be employed to mitigate the challenges posed by the hardware limitations of microcontrollers in TinyML applications:

- Leveraging Specialized Hardware: Using microcontrollers with integrated hardware accelerators, such as DSPs and NPUs, which can significantly improve processing performance for machine learning tasks without a substantial increase in power consumption.

- Efficient Algorithms: Employing efficient data processing techniques and utilizing lightweight machine learning algorithms designed for resource-constrained environments can help minimize the computational load on the microcontroller.

- Right-Sizing: Carefully selecting the appropriate microcontroller with sufficient, but not excessive, resources for the specific task at hand is crucial for optimizing both performance and cost.

Overcoming hardware limitations in TinyML often involves a combination of hardware-level optimizations (using specialized processors) and software-level optimizations (employing efficient algorithms and data processing). A holistic approach is often necessary to achieve the desired performance within the given constraints.

Considerations for Real-World Deployment and Maintenance

Beyond the core technical challenges, successful real-world deployment of TinyML solutions requires careful consideration of several practical factors:

- Environmental Robustness: Deployed devices often encounter noisy and variable sensor data, operate in diverse environmental conditions, and must maintain robust and reliable operation over extended periods.

- Maintainability: For long-term viability, the ability to perform over-the-air (OTA) updates for model refinement, bug fixes, and security patches is becoming increasingly important for deployed TinyML devices.

- Security: Security considerations are paramount, especially for applications that handle sensitive user data or operate in critical infrastructure. Implementing appropriate security measures is crucial to protect against unauthorized access and potential vulnerabilities.

Successful real-world deployment of TinyML solutions requires not only a well-performing model but also careful consideration of factors like data quality, environmental robustness, power management, security, and maintainability.

Illustrative Case Studies: Successful TinyML Deployments

Smart Sensors and Their Applications

TinyML has enabled the development of intelligent smart sensors for a wide array of applications:

- Environmental Monitoring: TinyML-powered sensors can autonomously track air and water quality, detect forest fires, and monitor various ecological parameters.

- Industrial Applications: TinyML facilitates predictive maintenance by analyzing sensor data from machinery to detect anomalies indicative of potential failures, preventing costly downtime.

- Agricultural Monitoring: Smart sensors utilizing TinyML can monitor soil moisture levels, temperature, and other critical factors to optimize irrigation and resource management, leading to improved crop yields.

A notable example is Ping Services, which developed a TinyML-powered device to continuously monitor the acoustic signature of wind turbine blades, enabling early detection of damage and reducing maintenance costs. Furthermore, TinyML can breathe new life into existing, less sophisticated sensors by adding intelligence for tasks such as anomaly detection and predictive maintenance.

The deployment of TinyML in smart sensors demonstrates its ability to bring real-time intelligence to the edge, enabling autonomous decision-making and reducing the need for constant communication with the cloud. This leads to faster response times and lower power consumption.

TinyML in Wearable and Portable Devices

The low power consumption and small size of TinyML models make them ideally suited for integration into wearable and portable devices:

- Health Monitoring: These devices can leverage TinyML for continuous health monitoring, such as tracking heart rate, sleep patterns, and detecting falls.

- Portable Diagnostics: TinyML enables the development of portable diagnostic devices capable of analyzing medical images or test results directly at the point of care, improving accessibility and reducing turnaround times.

- Gesture Recognition: Powered by TinyML, gesture recognition enhances human-computer interaction by allowing users to control interfaces and manipulate virtual objects through intuitive hand movements.

The integration of TinyML into wearable and portable devices enables continuous and personalized monitoring and interaction while preserving user privacy by processing sensitive data locally. The low power consumption of TinyML is crucial for extending the battery life of these devices, making them practical for everyday use.

IoT Applications: Agriculture, Industrial Monitoring, and Smart Homes

TinyML plays a pivotal role in realizing the full potential of the Internet of Things (IoT) by enabling edge intelligence across various application domains:

- Agriculture: TinyML-powered IoT devices can monitor crop health by analyzing images for signs of disease, track soil conditions to optimize fertilization, and monitor livestock behavior for early detection of illness or distress.

- Industrial Monitoring: TinyML facilitates predictive maintenance of machinery by analyzing vibration, temperature, and other sensor data to anticipate potential failures.

- Smart Homes: TinyML enhances privacy and security by enabling local processing of data from devices like smart thermostats and security cameras, reducing the need to transmit sensitive information to the cloud.

TinyML’s ability to enable local processing and decision-making in IoT devices reduces reliance on constant cloud connectivity, improves responsiveness for time-critical applications, and enhances data privacy within these interconnected systems.

Current Trends and the Future Landscape of TinyML

Emerging Advancements in Microcontroller Hardware

The field of TinyML is continuously being propelled forward by advancements in microcontroller hardware:

- More Powerful Processors: Microcontrollers are becoming increasingly powerful while maintaining or even improving their energy efficiency. This progress is evident in the development of more sophisticated processor architectures, such as the ARM Cortex-M55, which offers enhanced performance for machine learning tasks.

- Expanded Memory: Memory capacities are also increasing, allowing for the deployment of slightly larger and more complex models.

- Integrated Accelerators: A significant trend is the integration of specialized hardware accelerators, like Neural Processing Units (NPUs) and Digital Signal Processors (DSPs), directly onto the microcontroller chip. These accelerators are designed to significantly speed up the execution of machine learning operations, further enhancing the performance of TinyML applications.

- Energy Efficiency Improvements: Technologies like Arm Helium are also being incorporated into MCUs, providing significant improvements in DSP and ML capabilities while maintaining low power consumption, specifically targeting battery-powered endpoint AI applications.

The trend in microcontroller hardware is towards greater computational power and energy efficiency, specifically tailored for edge AI workloads. This will enable the deployment of more sophisticated TinyML models on even smaller and more power-constrained devices.

Developments in Software Tools and Algorithm Design

Alongside hardware advancements, the software ecosystem for TinyML is also undergoing rapid development:

- Improved Frameworks: Frameworks like TensorFlow Lite Micro, Edge Impulse, and Neuton TinyML are continuously being improved with new features, more efficient optimization techniques, and broader support for a wider range of hardware platforms.

- Specialized Algorithms: Researchers are actively focusing on developing novel lightweight machine learning algorithms and optimization strategies specifically designed to operate effectively within the severe resource constraints of microcontrollers.

- User-Friendly Tools: There is a growing emphasis on creating more user-friendly tools and platforms that simplify the entire TinyML workflow, from the initial stages of data collection and model training to the final deployment and monitoring of models on embedded devices.

The software ecosystem for TinyML is rapidly evolving, with a focus on creating more user-friendly, efficient, and versatile tools and algorithms. This will lower the barrier to entry for developers and enable the creation of more powerful and sophisticated TinyML applications.

Future Trends in TinyML (2025-2030)

Sub-100KB ML Models

Advanced compression techniques will enable complex ML models under 100KB, running on 8-bit MCUs with minimal RAM.

Specialized ML MCUs

Widespread adoption of microcontrollers with dedicated ML accelerators, reducing power consumption by 10x while improving performance.

Federated TinyML

Networks of TinyML devices collaboratively learning and sharing insights while preserving data privacy and minimizing bandwidth.

On-Device Training

Microcontrollers capable of limited on-device training and adaptation, enabling personalized models and continuous learning.

TinyML Security Standards

Industry-wide security standards for TinyML implementations, ensuring robust protection against model theft and adversarial attacks.

Generative TinyML

Miniaturized generative models running on edge devices, creating contextual responses and adaptive behaviors with minimal resources.

Key Enabling Technologies

- Ultra-low power neural processing units (NPUs)

- Advanced model compression and pruning algorithms

- Energy-harvesting microcontroller designs

- Standardized embedded ML frameworks and deployment tools

Anticipated Future Directions and Potential Breakthroughs

The future of TinyML holds immense potential for transforming various industries and enabling entirely new applications:

- Integration with Other Technologies: One anticipated future direction is the increasing integration of TinyML with other emerging technologies, such as generative AI, at the edge. This could lead to more intelligent and autonomous edge devices capable of generating contextually relevant responses and insights locally.

- Expanding Capabilities: Continued advancements in microcontroller hardware, software tools, and algorithm design are expected to further push the boundaries of what is currently possible with TinyML, enabling the deployment of even more complex and accurate models on ultra-low-power devices.

- Standardization: Increased standardization and interoperability across different hardware and software platforms will likely play a crucial role in facilitating wider adoption of TinyML technologies and simplifying the development process for a broader range of developers.

The future of TinyML holds immense potential for creating truly intelligent and autonomous edge devices that can sense, analyze, and act on data in real-time with minimal power consumption. This will likely lead to breakthroughs in various fields, from personalized healthcare and smart environments to advanced industrial automation and beyond.

Evaluating the Performance of TinyML Models on Microcontrollers

Key Performance Metrics: Power Consumption, Inference Time, and Accuracy

Evaluating the performance of TinyML models deployed on microcontrollers involves considering several key metrics that reflect their suitability for the intended application:

- Power Consumption: Typically measured in milliwatts (mW), this is a critical factor, especially for battery-operated devices where longevity is paramount.

- Inference Time (Latency): The time taken by the model to process an input and produce an output is crucial for real-time applications requiring rapid responses.

- Accuracy: This measures how well the model’s predictions align with the ground truth and is essential for the reliability and effectiveness of the application. It can be quantified using metrics like classification accuracy or F1 score, depending on the specific task.

- Memory Footprint: The amount of RAM and flash memory utilized by the model and its associated libraries on the microcontroller is a fundamental constraint that determines whether the model can even be deployed on the target device.

These performance metrics are often interdependent, and optimizing for one metric might negatively impact another. Therefore, evaluating TinyML model performance involves understanding these trade-offs and finding the right balance of metrics that meets the requirements of the specific application.

/**

* Platform-Agnostic TinyML Performance Benchmarking Framework

*

* This code demonstrates how to properly benchmark TinyML models on any

* microcontroller platform, measuring key performance metrics including:

* - Inference time (latency)

* - Memory usage

* - Power consumption (if supported by hardware)

* - Accuracy

*/

#include <stdint.h>

#include <stdio.h>

#include <string.h>

// Include TensorFlow Lite Micro headers

#include "tensorflow/lite/micro/micro_error_reporter.h"

#include "tensorflow/lite/micro/micro_interpreter.h"

#include "tensorflow/lite/micro/micro_mutable_op_resolver.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "tensorflow/lite/version.h"

// Platform-specific timing, power, and memory measurement headers

#include "platform_timer.h" // Provides timing_get_ms(), timing_get_us()

#include "platform_memory.h" // Provides memory_get_usage()

#ifdef ENABLE_POWER_MEASUREMENT

#include "platform_power.h" // Provides power_start_measurement(), power_stop_measurement()

#endif

// Include model and test data

#include "model_data.h" // Contains g_model_data array with model

#include "test_data.h" // Contains test input/output data

// ===== CONFIGURATION =====

#define NUM_TEST_SAMPLES 100

#define TENSOR_ARENA_SIZE (32 * 1024) // Adjust based on your model requirements

#define WARM_UP_RUNS 5 // Number of warm-up inferences

#define TEST_RUNS NUM_TEST_SAMPLES // Number of actual test inferences

#define LOG_INTERVAL 10 // Log progress every X inferences

// ===== GLOBALS =====

uint8_t tensor_arena[TENSOR_ARENA_SIZE] __attribute__((aligned(16)));

float inference_times[TEST_RUNS];

float accuracy_results[TEST_RUNS];

uint32_t memory_usage[TEST_RUNS];

#ifdef ENABLE_POWER_MEASUREMENT

float power_measurements[TEST_RUNS];

#endif

// ===== FUNCTION PROTOTYPES =====

void setup_tflite();

void run_benchmark();

float measure_inference_time();

float calculate_accuracy(float* output, int8_t* expected, size_t length);

void log_results();

// TFLite globals

tflite::MicroErrorReporter micro_error_reporter;

tflite::ErrorReporter* error_reporter = µ_error_reporter;

const tflite::Model* model = nullptr;

tflite::MicroInterpreter* interpreter = nullptr;

TfLiteTensor* input = nullptr;

TfLiteTensor* output = nullptr;

// Memory tracking variables

uint32_t memory_baseline = 0;

uint32_t peak_memory = 0;

int main() {

printf("TinyML Performance Evaluation Framework\n");

printf("======================================\n");

// Register memory baseline

memory_baseline = memory_get_usage();

printf("Memory baseline: %lu bytes\n", memory_baseline);

// Initialize TFLite

setup_tflite();

// Run the benchmark

run_benchmark();

// Log the results

log_results();

return 0;

}

void setup_tflite() {

printf("Setting up TensorFlow Lite...\n");

// Map the model into a usable data structure

model = tflite::GetModel(g_model_data);

if (model->version() != TFLITE_SCHEMA_VERSION) {

TF_LITE_REPORT_ERROR(error_reporter, "Model schema version mismatch!");

return;

}

// Create an operation resolver with only the operations needed

static tflite::MicroMutableOpResolver<6> micro_op_resolver;

micro_op_resolver.AddConv2D();

micro_op_resolver.AddDepthwiseConv2D();

micro_op_resolver.AddFullyConnected();

micro_op_resolver.AddSoftmax();

micro_op_resolver.AddReshape();

micro_op_resolver.AddAveragePool2D();

// Build an interpreter

static tflite::MicroInterpreter static_interpreter(

model, micro_op_resolver, tensor_arena, TENSOR_ARENA_SIZE, error_reporter);

interpreter = &static_interpreter;

// Allocate memory for the model's tensors

TfLiteStatus allocate_status = interpreter->AllocateTensors();

if (allocate_status != kTfLiteOk) {

TF_LITE_REPORT_ERROR(error_reporter, "AllocateTensors() failed");

return;

}

// Get pointers to input and output tensors

input = interpreter->input(0);

output = interpreter->output(0);

// Log model details

printf("Model loaded successfully\n");

printf("Input tensor size: %lu bytes\n", input->bytes);

printf("Output tensor size: %lu bytes\n", output->bytes);

// Calculate tensor arena usage after allocation

uint32_t used_arena = memory_get_usage() - memory_baseline;

printf("TFLite arena memory used: %lu bytes out of %d bytes allocated\n",

used_arena, TENSOR_ARENA_SIZE);

}

void run_benchmark() {

printf("\nStarting benchmark...\n");

// Warm-up runs

printf("Performing %d warm-up inferences...\n", WARM_UP_RUNS);

for (int i = 0; i < WARM_UP_RUNS; i++) {

// Copy a test sample to the input tensor

memcpy(input->data.int8, g_test_inputs[0], input->bytes);

// Run inference (not measuring performance yet)

TfLiteStatus invoke_status = interpreter->Invoke();

if (invoke_status != kTfLiteOk) {

TF_LITE_REPORT_ERROR(error_reporter, "Invoke failed on warm-up");

return;

}

}

printf("Running %d test inferences...\n", TEST_RUNS);

// Main benchmark loop

for (int i = 0; i < TEST_RUNS; i++) {

// Select test sample (cycling through available samples)

int sample_idx = i % NUM_TEST_SAMPLES;

// Copy the test input to the input tensor

memcpy(input->data.int8, g_test_inputs[sample_idx], input->bytes);

// Start power measurement if enabled

#ifdef ENABLE_POWER_MEASUREMENT

power_start_measurement();

#endif

// Measure inference time

uint64_t start_time = timing_get_us();

// Run inference

TfLiteStatus invoke_status = interpreter->Invoke();

// Record end time

uint64_t end_time = timing_get_us();

inference_times[i] = (float)(end_time - start_time) / 1000.0f; // Convert to ms

// Stop power measurement if enabled

#ifdef ENABLE_POWER_MEASUREMENT

power_measurements[i] = power_stop_measurement();

#endif

// Check inference status

if (invoke_status != kTfLiteOk) {

TF_LITE_REPORT_ERROR(error_reporter, "Invoke failed on test run %d", i);

return;

}

// Calculate accuracy for this sample

accuracy_results[i] = calculate_accuracy(output->data.f,

g_expected_outputs[sample_idx],

output->bytes / sizeof(float));

// Record memory usage

memory_usage[i] = memory_get_usage();

if (memory_usage[i] > peak_memory) {

peak_memory = memory_usage[i];

}

// Log progress

if (i % LOG_INTERVAL == 0 || i == TEST_RUNS - 1) {

printf("Progress: %d/%d\n", i + 1, TEST_RUNS);

}

}

printf("Benchmark completed!\n");

}

float calculate_accuracy(float* output, int8_t* expected, size_t length) {

// This is a simplified accuracy calculation

// In a real application, this would depend on your model type

// (classification, regression, etc.)

// For classification tasks, you might check if the highest confidence class

// matches the expected class

int predicted_class = 0;

float max_value = output[0];

for (size_t i = 1; i < length; i++) {

if (output[i] > max_value) {

max_value = output[i];

predicted_class = i;

}

}

// Convert expected output (could be one-hot encoded or class index)

int expected_class = expected[0];

// Return 1.0 for correct classification, 0.0 for incorrect

return (predicted_class == expected_class) ? 1.0f : 0.0f;

}

void log_results() {

printf("\n=== BENCHMARK RESULTS ===\n");

// Calculate inference time statistics

float total_time = 0.0f;

float min_time = inference_times[0];

float max_time = inference_times[0];

for (int i = 0; i < TEST_RUNS; i++) {

total_time += inference_times[i];

if (inference_times[i] < min_time) min_time = inference_times[i];

if (inference_times[i] > max_time) max_time = inference_times[i];

}

float avg_time = total_time / TEST_RUNS;

// Calculate memory statistics

uint32_t min_memory = memory_usage[0] - memory_baseline;

uint32_t max_memory = memory_usage[0] - memory_baseline;

uint32_t total_memory = 0;

for (int i = 0; i < TEST_RUNS; i++) {

uint32_t current = memory_usage[i] - memory_baseline;

total_memory += current;

if (current < min_memory) min_memory = current;

if (current > max_memory) max_memory = current;

}

uint32_t avg_memory = total_memory / TEST_RUNS;

// Calculate accuracy statistics

float total_accuracy = 0.0f;

for (int i = 0; i < TEST_RUNS; i++) {

total_accuracy += accuracy_results[i];

}

float avg_accuracy = (total_accuracy / TEST_RUNS) * 100.0f;

// Report performance metrics

printf("\nInference Time:\n");

printf(" Average: %.2f ms\n", avg_time);

printf(" Min: %.2f ms\n", min_time);

printf(" Max: %.2f ms\n", max_time);

printf("\nMemory Usage:\n");

printf(" Peak Memory: %lu bytes\n", peak_memory - memory_baseline);

printf(" Average: %lu bytes\n", avg_memory);

printf(" Min: %lu bytes\n", min_memory);

printf(" Max: %lu bytes\n", max_memory);

printf("\nAccuracy:\n");

printf(" Average: %.2f%%\n", avg_accuracy);

#ifdef ENABLE_POWER_MEASUREMENT

// Calculate power statistics

float total_power = 0.0f;

float min_power = power_measurements[0];

float max_power = power_measurements[0];

for (int i = 0; i < TEST_RUNS; i++) {

total_power += power_measurements[i];

if (power_measurements[i] < min_power) min_power = power_measurements[i];

if (power_measurements[i] > max_power) max_power = power_measurements[i];

}

float avg_power = total_power / TEST_RUNS;

printf("\nPower Consumption:\n");

printf(" Average: %.2f mW\n", avg_power);

printf(" Min: %.2f mW\n", min_power);

printf(" Max: %.2f mW\n", max_power);

// Calculate and report energy efficiency

float energy_per_inference = avg_power * avg_time / 1000.0f; // Convert to mJ

printf("\nEnergy Efficiency:\n");

printf(" Energy per inference: %.4f mJ\n", energy_per_inference);

#endif

// Output summary metrics table

printf("\n=== SUMMARY METRICS ===\n");

printf("| Metric | Value | Unit |\n");

printf("|----------------------------|---------------|--------:|\n");

printf("| Average Inference Time | %-13.2f | ms |\n", avg_time);

printf("| Peak Memory Usage | %-13lu | bytes |\n", peak_memory - memory_baseline);

printf("| Model Accuracy | %-13.2f | %% |\n", avg_accuracy);

#ifdef ENABLE_POWER_MEASUREMENT

printf("| Average Power Consumption | %-13.2f | mW |\n", avg_power);

printf("| Energy per Inference | %-13.4f | mJ |\n", energy_per_inference);

#endif

printf("| Input Tensor Size | %-13lu | bytes |\n", input->bytes);

printf("| Output Tensor Size | %-13lu | bytes |\n", output->bytes);

// Output in CSV format for easy data analysis

printf("\nCSV Format (for data analysis):\n");

printf("run,inference_time_ms,memory_bytes,accuracy\n");

for (int i = 0; i < TEST_RUNS; i++) {

printf("%d,%.2f,%lu,%.1f\n",

i,

inference_times[i],

memory_usage[i] - memory_baseline,

accuracy_results[i] * 100.0f);

}

}

/*

* Platform-specific implementation examples

* In a real implementation, these would be in separate files

*/

/*

// Example platform_timer.h implementation for STM32

#include "stm32f4xx_hal.h"

uint64_t timing_get_us() {

return (uint64_t)HAL_GetTick() * 1000; // Simple version, less accurate

// For more accurate timing, use hardware timers or DWT cycle counter

}

// Example platform_memory.h implementation

uint32_t memory_get_usage() {

// For ARM Cortex-M, you could track heap usage

extern uint32_t _end;

extern uint32_t __HeapLimit;

extern uint32_t _sbrk_heap_end;

return (uint32_t)&_sbrk_heap_end - (uint32_t)&_end;

}

// Example platform_power.h implementation for a device with a power monitor IC

#include "power_monitor_i2c.h"

void power_start_measurement() {

power_monitor_reset_accumulator();

power_monitor_start_sampling();

}

float power_stop_measurement() {

power_monitor_stop_sampling();

return power_monitor_get_average_power();

}

*/Methodologies for Performance Measurement and Analysis

Accurate and comprehensive performance evaluation of TinyML models on microcontrollers requires the use of appropriate methodologies and tools:

- Power Measurement: Power consumption can be measured using specialized power analysis equipment or by carefully monitoring the current drawn by the microcontroller during the execution of the inference process.

- Timing Analysis: Inference time can be determined by precisely timing the execution of the model on the microcontroller for a representative set of input data.

- Accuracy Assessment: Accuracy is typically evaluated by running the deployed model on a separate test dataset that the model has not seen during training and comparing its predictions to the known correct labels.

- Memory Analysis: Memory footprint can be assessed by examining the memory usage reported by the microcontroller’s development environment or through specialized memory analysis tools.

Thorough performance evaluation necessitates testing the model under realistic operating conditions that closely mimic the intended real-world deployment scenario. This ensures that the measured metrics accurately reflect the model’s behavior and limitations in its actual application environment.

Conclusion: Empowering the Edge with TinyML

In conclusion, the deployment of TinyML models on microcontrollers represents a significant advancement in the field of artificial intelligence, bringing machine learning capabilities to the very edge of the computing spectrum. By enabling AI on resource-constrained devices, TinyML addresses the limitations of traditional cloud-based ML in scenarios demanding low latency, enhanced privacy, and operation in disconnected environments.

The benefits of TinyML, including reduced latency, energy savings, improved data privacy, and real-time processing, are driving its rapid adoption across a diverse range of industries, from healthcare and agriculture to industrial automation and consumer electronics. While challenges related to memory footprint, processing power, and accuracy persist, ongoing advancements in both microcontroller hardware and software tools are continuously expanding the possibilities for TinyML deployments.

The emergence of more powerful yet energy-efficient microcontrollers with integrated AI accelerators, coupled with the development of lightweight algorithms and user-friendly deployment frameworks, is making TinyML more accessible and versatile than ever before. Looking ahead, the future of TinyML promises even greater intelligence and autonomy at the edge, with potential breakthroughs in areas like generative AI for embedded systems and increased standardization across the ecosystem.

Evaluating the performance of TinyML models through key metrics like power consumption, inference time, and accuracy remains crucial for ensuring their suitability for specific applications. Ultimately, TinyML is empowering a new generation of intelligent edge devices, paving the way for a smarter, more efficient, and more connected world.

Reference Works

- www.datacamp.com, access date March 29, 2025, https://www.datacamp.com/blog/what-is-tinyml-tiny-machine-learning#:~:text=TinyML%20is%20a%20type%20of,cases%20and%20battery%2Doperated%20devices.

- What is TinyML? An Introduction to Tiny Machine Learning – DataCamp, access date March 29, 2025, https://www.datacamp.com/blog/what-is-tinyml-tiny-machine-learning

- What Is Tiny Machine Learning (TinyML)? – Maryville University Online, access date March 29, 2025, https://online.maryville.edu/blog/what-is-tinyml/

- Harnessing TinyML to Revolutionize IoT Development – Cardinal Peak, access date March 29, 2025, https://www.cardinalpeak.com/blog/harnessing-tinyml-to-revolutionize-iot-development

- TinyML: What is It and Why Does It Matter – MacroFab, access date March 29, 2025, https://www.macrofab.com/blog/what-is-tinyml-why-does-it-matter/

- Advancements in TinyML: Applications, Limitations, and Impact on …, access date March 29, 2025, https://www.mdpi.com/2079-9292/13/17/3562

- What is tinyml? – Learn More – Imagimob, access date March 29, 2025, https://www.imagimob.com/blog/what-is-tinyml

- Unpacking TinyML: The Future of Machine Learning on Microdevices – IoT Marketing, access date March 29, 2025, https://iotmktg.com/unpacking-tinyml-the-future-of-machine-learning-on-microdevices/

- A Closer Look at the TinyML -Importance, Advantages & More | by Mark Taylor | Medium, access date March 29, 2025, https://taylor-mark110.medium.com/a-closer-look-at-the-tinyml-importance-advantages-more-1af30e1f16e6

- GenAI at the Edge: The Power of TinyML and Embedded Databases – Actian Corporation, access date March 29, 2025, https://www.actian.com/blog/databases/genai-at-the-edge-the-power-of-tinyml-and-embedded-databases/

- HarvardX: Applications of TinyML – edX, access date March 29, 2025, https://www.edx.org/learn/tinyml/harvard-university-applications-of-tinyml

- What is TinyML | Seeed Studio Wiki, access date March 29, 2025, https://wiki.seeedstudio.com/Wio-Terminal-TinyML/

- TinyML: Applications, Limitations, and It’s Use in IoT & Edge Devices – Unite.AI, access date March 29, 2025, https://www.unite.ai/tinyml-applications-limitations-and-its-use-in-iot-edge-devices/

- Everything About TinyML – Basics, Courses, Projects & More! – Seeed Studio, access date March 29, 2025, https://www.seeedstudio.com/blog/2021/06/14/everything-about-tinyml-basics-courses-projects-more/

- Low-Power Microcontrollers: Enabling AI-Driven Edge Computing – AiThority, access date March 29, 2025, https://aithority.com/machine-learning/low-power-microcontrollers-enabling-ai-driven-edge-computing/

- tinyML – MATLAB & Simulink – MathWorks, access date March 29, 2025, https://www.mathworks.com/discovery/tinyml.html

- How and Why Microcontrollers Can Help Democratize Access to Edge AI – DigiKey, access date March 29, 2025, https://www.digikey.com/en/articles/how-and-why-microcontrollers-can-help-democratize-access-to-edge-ai

- TinyML: Machine Learning for Embedded System — Part II – Leonardo Cavagnis – Medium, access date March 29, 2025, https://leonardocavagnis.medium.com/tinyml-machine-learning-for-embedded-system-part-ii-e7b6d61a9cbf

- Arduino Tiny Machine Learning Kit, access date March 29, 2025, https://store-usa.arduino.cc/products/arduino-tiny-machine-learning-kit

- TinyML – Seeed Studio Wiki, access date March 29, 2025, https://wiki.seeedstudio.com/tinyml_topic/

- STM32 Ultra Low Power Microcontrollers (MCUs) – STMicroelectronics, access date March 29, 2025, https://www.st.com/en/microcontrollers-microprocessors/stm32-ultra-low-power-mcus.html

- Ambiq adds always-on AI to low power microcontrollers … – eeNews Europe, access date March 29, 2025, https://www.eenewseurope.com/en/ambiq-adds-always-on-ai-to-low-power-microcontrollers/

- Ultra-Low Power Artificial Intelligence (AI) MCUs – Analog Devices, access date March 29, 2025, https://www.analog.com/en/product-category/ultralow-power-artificial-intelligence-ai-mcus.html

- Machine Learning on Microcontrollers — Implementing TinyML for Resource-Constrained Devices | by RocketMe Up I/O | Medium, access date March 29, 2025, https://medium.com/@RocketMeUpIO/machine-learning-on-microcontrollers-implementing-tinyml-for-resource-constrained-devices-aa52c43e9f11

- Ultra TinyML: Machine Learning for 8-bit Microcontroller | by Leonardo Cavagnis – Medium, access date March 29, 2025, https://medium.com/towards-data-science/ultra-tinyml-machine-learning-for-8-bit-microcontroller-9ec8f7c8dd12