Chapter 25: Data Privacy and Compliance (GDPR, CCPA)

Chapter Objectives

Upon completing this chapter, students will be able to:

- Understand the core principles, legal requirements, and jurisdictional scope of major data privacy regulations, including the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) as amended by the California Privacy Rights Act (CPRA).

- Analyze the specific impact of these regulations on the entire AI/ML development lifecycle, from data acquisition and preprocessing to model training, deployment, and monitoring.

- Implement fundamental data protection techniques, including data anonymization, pseudonymization, and the principles of Privacy by Design and by Default in AI system architecture.

- Design compliance-oriented data governance frameworks and operational workflows for handling Data Subject Access Requests (DSARs), managing user consent, and conducting Data Protection Impact Assessments (DPIAs).

- Evaluate advanced privacy-preserving machine learning (PPML) methodologies, such as differential privacy, federated learning, and homomorphic encryption, and identify appropriate use cases for each.

- Deploy AI systems that are not only technically robust but also legally compliant and ethically responsible, mitigating risks of fines, reputational damage, and loss of user trust.

Introduction

In the modern data-driven economy, data is the essential fuel for innovation in artificial intelligence and machine learning. The unprecedented scale of data collection and processing that powers today’s advanced algorithms has, however, brought the critical issues of individual privacy and data protection to the forefront of technological and societal discourse. The development of powerful AI systems is no longer solely an engineering challenge; it is a complex socio-technical endeavor that intersects deeply with law, ethics, and fundamental human rights. As AI engineers and data scientists, our responsibility extends beyond building accurate models to ensuring the systems we create are lawful, fair, and respectful of the individuals whose data they leverage.

This chapter confronts this challenge directly by providing a comprehensive overview of the legal and regulatory landscape governing data privacy. We will move beyond abstract principles to explore the concrete operational and architectural implications of landmark regulations like Europe’s GDPR and California’s CCPA/CPRA. These laws have reshaped the digital world, establishing stringent requirements for how organizations collect, use, and protect personal data, and granting individuals significant rights over their information. For AI practitioners, non-compliance is not an option; it carries the risk of severe financial penalties—up to 4% of global annual turnover for GDPR—and, more importantly, can cause irreparable damage to an organization’s reputation and user trust. We will explore how to translate legal text into engineering requirements, transforming compliance from a reactive checklist into a proactive, foundational component of the AI development lifecycle. This involves embedding “Privacy by Design” into our systems, employing technical solutions like anonymization and advanced cryptographic methods, and building robust governance frameworks to manage data responsibly.

Technical Background

Navigating the intersection of AI and data privacy requires a dual understanding of legal principles and the technical mechanisms used to enforce them. The legal frameworks provide the “what”—the rules and rights that must be respected—while the engineering solutions provide the “how”—the practical implementation of those rules within complex data pipelines and machine learning models. This section lays the foundational knowledge for both, creating a bridge between legal compliance and technical execution.

Fundamental Concepts in Data Privacy Regulation

At the heart of modern data privacy laws are a few core concepts that redefine the relationship between individuals (data subjects) and organizations (data controllers and processors). Understanding this terminology is the first step toward building compliant AI systems.

Core Terminology and Legal Foundations

Personal Data, or Personally Identifiable Information (PII), is the central focus of these regulations. It is defined broadly as any information that relates to an identified or identifiable natural person. This includes obvious identifiers like a name, an identification number, location data, or an online identifier (like an IP address or cookie ID). Crucially, it also includes factors specific to the physical, physiological, genetic, mental, economic, cultural, or social identity of that person. In the context of AI, this can encompass everything from images and voice recordings to user-generated content and behavioral tracking data.

The General Data Protection Regulation (GDPR), enacted by the European Union in 2018, is arguably the most comprehensive and influential data privacy law in the world. Its reach is extraterritorial, meaning it applies to any organization, regardless of its location, that processes the personal data of individuals within the EU. The GDPR is built on several key principles:

- Lawfulness, Fairness, and Transparency: Processing must be lawful, with a clearly defined legal basis (e.g., user consent, contractual necessity, legitimate interest). Organizations must be transparent about what data they collect and how it is used.

- Purpose Limitation: Data can only be collected for specified, explicit, and legitimate purposes and cannot be further processed in a manner incompatible with those purposes. This directly challenges the common practice of collecting vast datasets for potential future, undefined AI projects.

- Data Minimization: Only data that is adequate, relevant, and limited to what is necessary for the specified purpose should be collected. This principle is often at odds with the “more data is better” mantra of machine learning.

- Accuracy: Personal data must be accurate and, where necessary, kept up to date.

- Storage Limitation: Data should be kept in a form which permits identification of data subjects for no longer than is necessary for the purposes for which the data are processed.

- Integrity and Confidentiality: Data must be processed in a manner that ensures appropriate security, including protection against unauthorized or unlawful processing and against accidental loss, destruction, or damage.

The California Consumer Privacy Act (CCPA), and its successor, the California Privacy Rights Act (CPRA), provide similar protections for residents of California. While its principles overlap with GDPR, it introduces specific rights such as the right to opt-out of the sale or sharing of personal information, which has significant implications for the ad-tech and data brokerage industries that often fuel AI models.

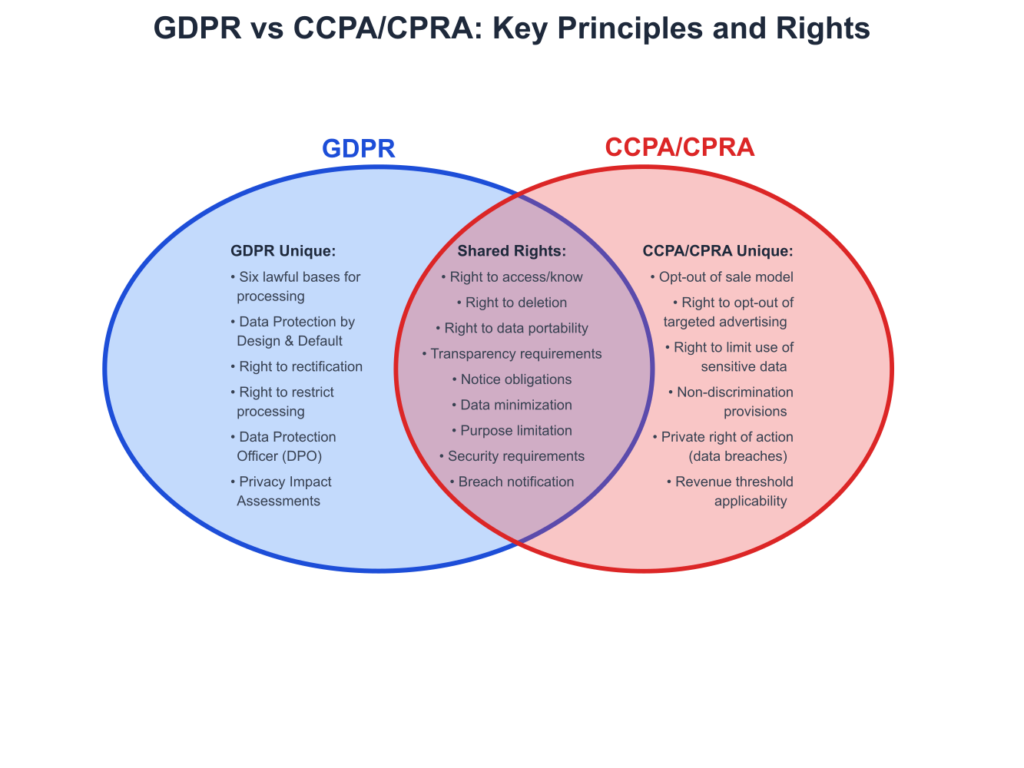

GDPR vs. CCPA/CPRA: Key Provisions Comparison

| Feature | GDPR (General Data Protection Regulation) | CCPA/CPRA (California Consumer Privacy Act/Rights Act) |

|---|---|---|

| Geographic Scope | Applies to processing of personal data of individuals in the EU/EEA, regardless of the company’s location. | Applies to for-profit entities doing business in California that meet certain revenue or data processing thresholds. Protects California residents. |

| Core Principle | Opt-in consent model. Requires a specified, lawful basis for any data processing activity (e.g., consent, contract, legitimate interest). | Opt-out model. Consumers have the right to opt-out of the sale or sharing of their personal information. |

| Personal Data Definition | Broadly defined: “any information relating to an identified or identifiable natural person.” Includes online identifiers like IP addresses. | Broadly defined: “information that identifies, relates to, describes, is reasonably capable of being associated with… a particular consumer or household.” |

| Key Consumer Rights | Right to Access, Right to Rectification, Right to Erasure (‘to be forgotten’), Right to Restrict Processing, Right to Data Portability, Right to Object. | Right to Know, Right to Delete, Right to Opt-Out of Sale/Sharing, Right to Correct, Right to Limit Use of Sensitive Personal Information. |

| “Sensitive Data” | Has a special category of sensitive data (e.g., health, race, political opinions) with stricter processing rules. | CPRA introduced a “Sensitive Personal Information” category (e.g., precise geolocation, race, union membership) with specific consumer rights to limit its use. |

| Enforcement & Fines | Severe fines: up to €20 million or 4% of global annual turnover, whichever is higher. Enforced by Data Protection Authorities (DPAs). | Fines up to $2,500 per unintentional violation and $7,500 per intentional violation. Enforced by the California Privacy Protection Agency (CPPA). |

| Impact on AI | Requires documented lawful basis for training data, purpose limitation, and data minimization. Right to explanation for automated decisions. | Right to opt-out impacts data acquisition for model training. Requires transparency about automated decision-making logic. |

A critical distinction in these laws is between a Data Controller and a Data Processor. The controller is the entity that determines the purposes and means of the processing of personal data (e.g., the company building the AI application). The processor is an entity that processes personal data on behalf of the controller (e.g., a cloud provider like AWS or Google Cloud). While controllers bear the primary responsibility for compliance, processors also have direct legal obligations.

Data Anonymization and Pseudonymization Techniques

To train AI models without violating privacy principles, it’s often necessary to de-link data from individual identities. Anonymization and pseudonymization are two primary approaches, though they offer different levels of protection and utility.

Distinguishing Anonymization from Pseudonymization

Pseudonymization is a process that replaces identifying fields in a data record with one or more artificial identifiers, or pseudonyms. For example, a user’s name might be replaced with a randomly generated user ID. The key feature of pseudonymization is that the process is reversible. A separate, securely stored mapping table can be used to re-identify the data subject if needed. Under GDPR, pseudonymized data is still considered personal data because re-identification is possible, but it is seen as a valuable security measure that can reduce privacy risks.

Anonymization, by contrast, is the process of irreversibly altering data so that a data subject can no longer be identified, directly or indirectly. The goal is to make it impossible to re-identify individuals, even by linking the data with other available information. Truly anonymized data falls outside the scope of GDPR, making it a highly desirable goal for AI development. However, achieving true, robust anonymization is technically challenging. A famous example of failed anonymization involved Netflix releasing a dataset of movie ratings in 2007. Though user names were removed, researchers were able to de-anonymize a significant portion of the dataset by cross-referencing it with public ratings on the Internet Movie Database (IMDb).

Anonymization vs. Pseudonymization

| Aspect | Pseudonymization | Anonymization |

|---|---|---|

| Goal | To replace personal identifiers with pseudonyms to de-link data from a specific individual. | To remove or alter identifying information so that re-identification of an individual is impossible. |

| Reversibility | Reversible. The original data can be restored by linking the pseudonym back to the original identifier. | Irreversible. The process is designed to prevent re-identification. |

| Status under GDPR | Still considered personal data, as re-identification is possible. It is a recommended security measure. | If done correctly, the data is no longer considered personal data and falls outside the scope of GDPR. |

| Example Technique | Replacing a user’s name with a unique ID (e.g., ‘John Doe’ -> ‘User-12345’) and storing the mapping securely elsewhere. | Aggregating data, generalizing fields (e.g., ‘Age: 31’ -> ‘Age Range: 30-40’), and suppressing unique values. |

| Primary Use Case | Reducing privacy risk in data processing and analysis where re-identification might be needed for specific purposes. | Public data releases, research, and training AI models where individual identity is not required and must be protected. |

K-Anonymity, L-Diversity, and T-Closeness

To address the shortcomings of naive anonymization, computer scientists have developed more rigorous mathematical frameworks. These techniques primarily focus on protecting against linkage attacks by ensuring data records are not unique.

K-Anonymity is a property of an anonymized dataset. A dataset is said to be k-anonymous if for any combination of quasi-identifiers (attributes that are not unique on their own but can become identifying when combined, such as ZIP code, birth date, and gender), every record is indistinguishable from at least \(k-1\) other records. For example, if \(k=5\), for any person in the dataset, there are at least four other people who share the same combination of quasi-identifiers. This is achieved through techniques like generalization (e.g., replacing an age of “31” with the range “30-35”) and suppression (e.g., redacting a specific value). The higher the value of \(k\), the greater the privacy, but often at the cost of data utility, as the data becomes less precise.

However, k-anonymity is vulnerable to attacks if there is a lack of diversity in the sensitive attributes within an equivalence class (the group of \(k\) indistinguishable records). For instance, if a k-anonymous medical dataset has an equivalence class where all \(k\) individuals have the same diagnosis, an attacker who knows an individual is in that class can infer their diagnosis.

L-Diversity was proposed to solve this problem. It requires that every equivalence class has at least \(l\) “well-represented” values for the sensitive attribute. This ensures that even if an individual is identified as part of a group, there is still ambiguity about their sensitive information.

T-Closeness is a further refinement. It requires that the distribution of a sensitive attribute within any equivalence class is close to the distribution of the attribute in the overall dataset (no more than a threshold \(t\)). This prevents attacks that rely on skewness in the data distribution, providing a more robust privacy guarantee.

graph TD

A[Start: Raw Dataset] --> B{"Identify Identifiers &<br>Quasi-Identifiers (QIs)"};

B --> C[Separate Direct Identifiers<br>e.g., Name, SSN];

C --> D{Apply Generalization or<br>Suppression to QIs};

D --> E[Group Records into<br>Equivalence Classes based on QIs];

E --> F{Check Condition:<br>Does every class have at least 'k' records?};

F -- No --> G{Increase Generalization/Suppression};

G --> D;

F -- Yes --> H[Success: K-Anonymous Dataset];

%% Styling

style A fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style B fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style C fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style D fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style E fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style F fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style G fill:#f1c40f,stroke:#f1c40f,stroke-width:1px,color:#283044

style H fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5eePrivacy-Preserving Machine Learning (PPML)

While data anonymization focuses on protecting the training data before it is used, PPML techniques are designed to provide privacy guarantees during and after the model training process itself. These advanced methods are becoming increasingly crucial as models become more complex and capable of inadvertently memorizing sensitive information from their training data.

Comparison of Privacy-Preserving Machine Learning (PPML) Techniques

| Algorithm | Problem Type | Strengths | Weaknesses | Best Use Cases |

|---|---|---|---|---|

| Differential Privacy (DP) | Statistical Query / Model Training |

Provable mathematical privacy guarantee. Gold standard for anonymization. Composable: privacy loss can be tracked across multiple computations. |

Reduces data utility and model accuracy due to noise injection. Complex to correctly calibrate the privacy parameter ε (epsilon). Can be computationally expensive. |

Large-scale statistical analysis (e.g., census data). Training ML models on sensitive datasets where individual privacy is paramount (e.g., DP-SGD). Aggregating user data for analytics (e.g., Apple, Google). |

| Federated Learning (FL) | Decentralized Model Training |

Raw data never leaves the client device (strong data minimization). Reduces data transmission and central storage costs. Enables training on data that cannot be centralized due to policy or size. |

Model updates can still leak information about client data. Complex infrastructure to manage. Vulnerable to non-IID (not independent and identically distributed) data across clients. |

Training models on user data from mobile devices (e.g., predictive keyboards). Collaborative medical research across hospitals without sharing patient records. Industrial IoT applications (e.g., predictive maintenance). |

| Homomorphic Encryption (HE) | Secure Computation on Encrypted Data |

Allows computations (e.g., model inference) on fully encrypted data. Provides very strong confidentiality guarantees. |

Extremely high computational overhead; often too slow for practical model training. Limited set of supported operations for some schemes. Significant data size expansion (ciphertext is much larger than plaintext). |

“Private AI as a Service” where a client sends encrypted data to a server for prediction. Secure multi-party computation where parties jointly compute a function without revealing their inputs. Financial services for secure data analysis. |

| Secure Multi-Party Computation (SMPC) | Collaborative Secure Computation |

Allows multiple parties to jointly compute a function over their inputs while keeping those inputs private. Strong, provable security guarantees. |

High communication overhead between parties. Can be complex to set up and coordinate. Performance degrades as the number of parties increases. |

Joint fraud detection between banks. Private auctions or voting systems. Collaborative data analysis where organizations want to pool insights without sharing raw data. |

Differential Privacy

Differential Privacy (DP) is a mathematical definition of privacy that has become a gold standard in the field. It provides a strong, provable guarantee that the output of a computation (like training a machine learning model) will be roughly the same, whether or not any single individual’s data is included in the input dataset. This means that an adversary looking at the model’s output cannot confidently infer if a specific person’s data was used in the training process, thus protecting their privacy.

This guarantee is achieved by injecting a carefully calibrated amount of statistical noise into the algorithm. The two key parameters that control this process are epsilon (\(\epsilon\)) and delta (\(\delta\)).

- Epsilon (\(\epsilon\)) is the privacy loss parameter. It measures how much the output probability distribution can change when a single record is added or removed from the dataset. A smaller \(\epsilon\) means more noise is added, providing stronger privacy but potentially reducing the model’s accuracy. The choice of \(\epsilon\) represents a direct trade-off between privacy and utility.

- Delta (\(\delta\)) is a parameter that represents the probability that the \(\epsilon\)-guarantee is broken. It is typically set to a very small number (e.g., less than the inverse of the dataset size), representing a small chance of catastrophic privacy failure. A pure DP guarantee has \(\delta=0\).

The relationship can be formally expressed as:

\\\Pr[A(D\_1) \\in S] \\le e^\\epsilon \\Pr[A(D\_2) \\in S] + \\delta \\

Where \(A\) is the randomized algorithm, \(D_1\) and \(D_2\) are two datasets that differ by only one element, and \(S\) is any set of possible outputs. This formula essentially bounds the privacy leakage.

In practice, DP is often implemented in machine learning through differentially private stochastic gradient descent (DP-SGD). In each training step, the gradients computed for each data point are clipped to limit their maximum influence, and then noise (typically Gaussian) is added before the gradients are averaged and used to update the model weights.

Federated Learning

Federated Learning (FL) is a decentralized machine learning approach that allows a model to be trained across multiple devices or servers holding local data samples, without exchanging the raw data itself. This is particularly relevant for applications involving sensitive data that resides on user devices, such as mobile phones or hospital records.

The process typically works as follows:

- A central server initializes a global model.

- The global model is sent to a selection of client devices.

- Each client device trains the model locally on its own private data for a few epochs.

- The client devices send their updated model parameters (e.g., weights and biases), not their data, back to the central server.

- The central server aggregates the updates from all clients (e.g., by averaging them) to improve the global model.

- This process is repeated for many rounds until the global model converges.

graph TD

subgraph Central Server

A(Global Model<br>Version N)

F(Aggregation)

G(Global Model<br>Version N+1)

end

subgraph "Client Devices (Data remains local)"

B1(Client 1)

B2(Client 2)

B3(Client 3)

end

A -- 1- Distribute Model --> B1;

A -- 1- Distribute Model --> B2;

A -- 1- Distribute Model --> B3;

B1 -- 2- Train Locally on Private Data --> C1(Local Update 1);

B2 -- 2- Train Locally on Private Data --> C2(Local Update 2);

B3 -- 2- Train Locally on Private Data --> C3(Local Update 3);

C1 -- "3- Send <i>only</i> model updates" --> F;

C2 -- "3- Send <i>only</i> model updates" --> F;

C3 -- "3- Send <i>only</i> model updates" --> F;

F -- 4- Aggregate Updates --> G;

G -.-> A;

%% Styling

style A fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee

style F fill:#e74c3c,stroke:#e74c3c,stroke-width:1px,color:#ebf5ee

style G fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

style B1 fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style B2 fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style B3 fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style C1 fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style C2 fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style C3 fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044While FL is a powerful technique for data minimization, it is not a complete privacy solution on its own. The model updates sent from clients can still leak information about the underlying training data. Therefore, FL is often combined with other PPML techniques. For example, Secure Aggregation can be used to ensure the central server can only see the combined average of all updates, not individual client updates. Additionally, clients can apply differential privacy to their updates before sending them, providing a robust, multi-layered privacy defense.

Strategic Framework and Implementation

Translating the legal and technical principles of data privacy into a coherent organizational strategy is a significant challenge that requires more than just engineering prowess. It demands a holistic approach that integrates legal counsel, business strategy, and technical implementation into a unified framework. This section provides a roadmap for developing such a framework, moving from high-level strategy to on-the-ground implementation.

Framework Development and Application

A robust data privacy strategy is built on the principle of Privacy by Design and by Default. This means that privacy considerations are not an afterthought or a feature to be added at the end of the development cycle, but are instead embedded into the very architecture and functionality of AI systems from the outset.

A practical framework for implementing Privacy by Design can be structured around the AI development lifecycle:

- Project Inception and Design:

- Data Protection Impact Assessment (DPIA): Before a single line of code is written, a DPIA should be conducted for any project likely to involve high-risk data processing. This is a formal process mandated by GDPR for certain activities. It involves identifying the purpose of the processing, assessing its necessity and proportionality, identifying and assessing risks to individuals’ rights, and determining measures to mitigate those risks.

- Purpose Specification and Data Minimization: Clearly define the specific, legitimate purpose of the AI model. Challenge the data requirements. Is every feature necessary? Can the goal be achieved with less data, or with less sensitive data? This is the time to design the data schema with minimization in mind.

- Data Acquisition and Preprocessing:

- Lawful Basis for Processing: For every piece of personal data collected, document the lawful basis under GDPR (e.g., explicit consent, legitimate interest). If using consent, ensure it is freely given, specific, informed, and unambiguous. Design user interfaces that make giving and withdrawing consent simple and clear.

- Anonymization/Pseudonymization Strategy: Based on the model’s needs and the data’s sensitivity, decide on the appropriate de-identification strategy. Implement the chosen techniques (e.g., k-anonymity, pseudonymization) within the data ingestion pipeline.

- Model Training and Validation:

- Access Controls: Implement strict access controls on training data. Engineers and data scientists should only have access to the data they absolutely need. Use pseudonymized data for development and testing wherever possible.

- Implementation of PPML: If the risk assessment indicates it, implement advanced techniques like DP-SGD during the training phase. This requires careful tuning of privacy parameters (like \(\epsilon\)) to balance privacy guarantees with model utility.

- Deployment and Monitoring:

- Secure Deployment: Deploy models in secure environments with robust logging and monitoring. Ensure that any data processed by the live model is handled with the same care as the training data.

- Data Subject Rights (DSR) Automation: Build automated or semi-automated workflows to handle user requests, such as the right to access their data, the right to correct it, and the right to erasure (the “right to be forgotten”). The right to erasure is particularly challenging for AI models, as it may be technically infeasible to remove a single individual’s influence from a trained model without retraining it. This technical constraint must be communicated transparently to users.

- Model Governance and Maintenance:

- Retention Policies: Implement and enforce data retention policies. Do not store personal data indefinitely. Automatically delete or anonymize data once the purpose for which it was collected has been fulfilled.

- Regular Audits: Conduct regular privacy audits of your AI systems to ensure they remain compliant as regulations evolve and the system itself is updated.

graph TD

A["Start: User Submits Request<br>(e.g., via Web Form)"] --> B{Verify User Identity};

B -- Identity Verified --> C[Log Request in Tracking System];

B -- Identity Failed --> D[Request Rejection &<br>Notify User];

C --> E{"Determine Nature of Request<br>(Access, Deletion, Correction)"};

E -- Request for Access --> F["Query All Systems for User's Data<br>(CRM, DBs, Data Lake)"];

F --> G[Compile Data into a<br>Readable Format];

G --> H[Review Data for<br>Third-Party Information];

H --> I{Redact Third-Party Data?};

I -- Yes --> J[Perform Redaction];

I -- No --> K;

J --> K;

K[Securely Deliver Data to User] --> L;

E -- Request for Deletion --> M[Locate User's Data Across Systems];

M --> N{"Is there a legal reason to retain data?<br>(e.g., financial records)"};

N -- No --> O[Execute Deletion/Anonymization];

N -- Yes --> P[Inform User of Partial Deletion<br>and Retention Reason];

O --> Q[Confirm Deletion to User];

P --> L;

Q --> L;

L[End: Close Request in Tracking System];

%% Styling

style A fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee

style B fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style C fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style D fill:#d63031,stroke:#d63031,stroke-width:1px,color:#ebf5ee

style E fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style F fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style G fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style H fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style I fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style J fill:#f1c40f,stroke:#f1c40f,stroke-width:1px,color:#283044

style K fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style L fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

style M fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style N fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style O fill:#e74c3c,stroke:#e74c3c,stroke-width:1px,color:#ebf5ee

style P fill:#f1c40f,stroke:#f1c40f,stroke-width:1px,color:#283044

style Q fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044Case Study Analysis

Company: “CardioPredict,” a health-tech startup developing an AI-powered application to predict the risk of cardiovascular events based on user-submitted health data from wearable devices and electronic health records (EHRs).

Business Scenario: CardioPredict aims to sell its risk-scoring service to hospitals in both the EU and the United States. Their dataset contains highly sensitive personal data, including heart rate, blood pressure, cholesterol levels, genetic markers, and lifestyle information.

Decision-Making Process and Stakeholder Considerations:

- Initial Legal and Business Consultation: The CEO, CTO, and legal counsel meet. Legal counsel confirms that because they will be processing data from EU residents, they are fully subject to GDPR. They are also subject to HIPAA in the US, which has its own strict privacy rules. The CEO is concerned about the cost of compliance, while the CTO is worried about its impact on model accuracy.

- DPIA and Risk Assessment: The team initiates a DPIA.

- Purpose: To provide a cardiovascular risk score to clinicians. This is deemed a legitimate purpose.

- Risks Identified: High risk of re-identification due to the detailed nature of the data. Risk of discrimination if the model is biased. Risk of a data breach exposing sensitive health information. Risk of violating user rights if they cannot access or delete their data.

- Strategic Decisions:

- Consent as Lawful Basis: They decide to use explicit, granular consent as their primary lawful basis. The user interface will be designed to allow users to consent separately to different data types (e.g., wearable data vs. EHR data).

- Privacy by Design Architecture: The CTO proposes a federated learning architecture. Instead of collecting all raw data in a central cloud, a portion of the model training will happen within the secure IT environment of each partner hospital. Only anonymized model updates will be sent to CardioPredict’s central server for aggregation. This significantly minimizes their role as a processor of raw patient data.

- Anonymization and PPML: For the data that must be processed centrally, they implement a multi-stage de-identification pipeline. First, direct identifiers are removed and replaced with pseudonyms. Then, quasi-identifiers are generalized to achieve a high level of k-anonymity and t-closeness. Finally, during the central model aggregation phase, they apply differential privacy to the aggregated gradients to provide a provable privacy guarantee.

- Outcome Analysis:

- Business Value: While the initial investment in this complex architecture is high, it becomes a major competitive advantage. They can market their service as “privacy-first,” which is a strong selling point for risk-averse hospitals. It also reduces their liability in the event of a breach at a partner hospital.

- Technical Outcome: The CTO’s team finds that the combination of FL and DP slightly reduces the model’s accuracy compared to a centralized approach. However, through careful tuning of the privacy parameters and model architecture, they achieve a level of performance that is still clinically valuable and far superior to existing non-AI methods. They have successfully balanced utility and privacy.

Implementation Strategies

Implementing a privacy-compliant AI program requires a combination of technical controls, organizational policies, and cultural change.

Change Management: Data privacy cannot be the sole responsibility of the legal or IT department. It requires a cultural shift where every engineer, data scientist, and product manager feels a sense of ownership over protecting user data. This can be fostered through:

- Mandatory Training: Regular, role-specific training for all employees on privacy regulations and internal policies.

- Privacy Champions: Appointing “privacy champions” within engineering teams who can act as the first point of contact for privacy-related questions and ensure best practices are being followed.

- Incentives: Tying privacy-related metrics to performance reviews and project success criteria.

Resource Planning: Compliance requires dedicated resources. This includes:

- Legal Expertise: Access to legal counsel specializing in data privacy.

- Engineering Time: Allocating engineering sprints specifically for building and maintaining privacy features (e.g., DSR dashboards, consent management tools).

- Specialized Tools: Budgeting for tools such as data discovery and classification software, consent management platforms, and security monitoring systems.

Success Metrics: Measuring the success of a privacy program can be challenging, but key metrics include:

- Number of Privacy Incidents/Breaches: The ultimate measure of failure. The goal is zero.

- DSR Fulfillment Time: The average time it takes to respond to and fulfill a user’s data access or deletion request.

- DPIA Completion Rate: The percentage of new projects that complete a DPIA before development begins.

- Employee Training Completion: The percentage of employees who have completed their mandatory privacy training.

Tools and Assessment Methods

A variety of tools can help operationalize and assess a privacy program.

- Data Discovery and Mapping Tools (e.g., BigID, OneTrust): These tools scan an organization’s data stores (databases, data lakes, cloud storage) to automatically discover and classify personal and sensitive data, creating a data map that is essential for compliance.

- Consent Management Platforms (CMPs): These platforms provide a centralized way to manage user consent preferences across websites and applications, record consent for auditing purposes, and honor user choices.

- Privacy Impact Assessment (PIA/DPIA) Templates: Standardized templates and software can guide teams through the process of conducting a DPIA, ensuring all legal requirements are met and the process is documented.

- Internal Audits and Checklists: Develop detailed internal checklists based on GDPR and CCPA requirements. These can be used for self-assessment by engineering teams before a product launch or for formal internal audits. An example checklist item might be: “Has a data retention period been defined and implemented for all personal data associated with this feature?”

By combining a strategic framework, real-world analysis, and practical implementation tactics, organizations can move from viewing privacy compliance as a burdensome cost center to seeing it as a strategic enabler of trust, innovation, and long-term business value.

Industry Applications and Case Studies

The principles of data privacy and compliance are not theoretical; they are actively shaping how AI is being developed and deployed across every major industry. The technical and strategic frameworks discussed are being put to the test in real-world scenarios, driving innovation in privacy-preserving technologies.

- Healthcare and Medical Research: Hospitals and research institutions are using federated learning to train diagnostic models on medical images (like X-rays and MRIs) from multiple hospitals without centralizing sensitive patient data. For example, a model to detect cancerous tumors can learn from data across a consortium of institutions, improving its accuracy and generalizability while each hospital retains full control over its patient records. This respects patient privacy and complies with strict regulations like HIPAA and GDPR. The technical challenge lies in standardizing data formats across institutions and managing the complex federated training infrastructure.

- Finance and Fraud Detection: Banks use machine learning to detect fraudulent transactions in real-time. This requires processing vast amounts of personal financial data, which is heavily regulated. To comply, financial institutions employ robust pseudonymization and strict access controls. Furthermore, to satisfy the “right to explanation” under GDPR, which gives individuals the right to a meaningful explanation of the logic involved in automated decisions, banks are investing heavily in explainable AI (XAI) techniques to make their fraud detection models less of a “black box.”

- On-Device Personalization: Tech companies are moving away from cloud-based processing for personalization features. For example, a smartphone’s predictive keyboard learns a user’s writing style and common phrases by training a small language model directly on the device. Through federated learning, aggregated and anonymized insights from thousands of users can be used to improve the general keyboard model without any personal text ever leaving the user’s phone. This approach, which leverages data minimization and Privacy by Design, enhances user privacy and reduces the need for large-scale data transfers.

- Smart Retail and Customer Analytics: Retailers use AI to analyze in-store customer behavior via cameras and sensors to optimize store layouts and product placements. To do this without violating privacy, they employ real-time anonymization techniques. Video feeds are processed at the edge (on-device), and individuals are immediately converted into anonymized data points or skeletal representations. Only aggregated, anonymous data (e.g., “75% of customers turned left at this aisle”) is sent to the cloud for analysis, ensuring no personal data is stored.

Best Practices and Common Pitfalls

Successfully integrating data privacy into AI development is a continuous process of diligence and adaptation. Adhering to best practices can prevent costly mistakes, while being aware of common pitfalls can help teams navigate the complex compliance landscape.

Best Practices:

- Embrace Privacy by Design as a Core Engineering Principle: Don’t treat privacy as a feature to be added later or a checklist to be completed by the legal team. Embed privacy considerations into every stage of the design, architecture, and development process. Conduct DPIAs early and often.

- Minimize Data Collection and Retention: Vigorously challenge the need for every piece of data you collect. The less data you hold, the lower your risk. Implement and automate strict data retention policies to ensure data that is no longer needed is securely deleted or anonymized.

- Invest in User-Centric Transparency and Control: Build clear, simple, and honest user interfaces for managing consent and privacy preferences. Give users meaningful control over their data. A user who feels in control is a user who trusts your product.

- Combine Multiple Layers of Privacy Protection: Relying on a single technique is risky. A robust strategy combines legal agreements (with vendors), organizational policies (access controls), data protection techniques (anonymization), and advanced PPML (federated learning, differential privacy) to create a defense-in-depth approach.

- Maintain Comprehensive Documentation: Document every decision related to data processing. This includes your lawful basis for processing, the results of your DPIAs, records of user consent, and your data retention policies. This documentation is your first line of defense in an audit or regulatory inquiry.

Common Pitfalls:

- Treating Anonymization as a Silver Bullet: Believing that simply removing names and addresses from a dataset makes it anonymous is a common and dangerous mistake. Sophisticated re-identification attacks are possible on naively “anonymized” data. Use rigorous, mathematically-grounded techniques like k-anonymity or differential privacy.

- Ignoring the “Right to Explanation”: Deploying complex “black box” models for decisions that have a significant impact on individuals (e.g., loan applications, hiring) without a mechanism to explain the decision-making process can violate regulations like GDPR. Invest in explainable AI (XAI).

- Forgetting about Vendor and Third-Party Risk: Your compliance responsibility does not end when you hand data to a third-party service or cloud provider. You are responsible for ensuring your vendors are also compliant. Conduct thorough due diligence and have strong data processing agreements (DPAs) in place.

- Scope Creep and Purpose Limitation Violations: Collecting data for one specific, stated purpose and then later using it to train an entirely different AI model without new consent is a direct violation of the purpose limitation principle. Be disciplined about data use and seek new consent when purposes change.

Hands-on Exercises

These exercises are designed to translate the theoretical concepts from the chapter into practical skills. They progressively increase in complexity.

- Basic: Conducting a Mini-DPIA for a Fictional App.

- Objective: To practice identifying privacy risks and mitigation strategies in a real-world context.

- Task: Imagine you are developing a new mobile app called “FitFriend” that tracks users’ daily steps, heart rate, and GPS location during workouts. It provides personalized fitness recommendations.

- Guidance:

- Identify all the personal data the app collects. Classify each piece as either personal or sensitive personal data.

- What is the specific, legitimate purpose for collecting each piece of data?

- Identify at least three potential privacy risks to the user (e.g., risk of a location data breach, risk of unwanted health inferences).

- For each risk, propose a specific mitigation measure (e.g., “Implement end-to-end encryption for all data in transit,” “Anonymize location data by reducing its precision before storage”).

- Outcome: A one-page document outlining the data inventory, purposes, risks, and mitigations.

- Intermediate: Applying K-Anonymity to a Dataset.

- Objective: To gain hands-on experience with a fundamental data anonymization technique.

- Task: You are given a small CSV file with fictional patient data containing columns for

Age,Gender,ZIP Code, andDiagnosis. Your goal is to make this dataset 3-anonymous with respect to the quasi-identifiers (Age,Gender,ZIP Code). - Guidance:

- Use a Python script with the Pandas library to load the data.

- Identify the quasi-identifiers.

- Apply generalization techniques. For example, you might generalize

Ageinto ranges (e.g., 20-30, 30-40) andZIP Codeby masking the last two digits (e.g., 90210 becomes 902**). - Verify that in your resulting dataset, every unique combination of the generalized quasi-identifiers appears at least 3 times.

- Outcome: A Jupyter Notebook demonstrating the process and the final, 3-anonymous dataset.

- Advanced: Designing a Privacy-by-Design System Architecture.

- Objective: To think strategically about embedding privacy into the architecture of a complex AI system.

- Task: Design the high-level system architecture for a “Smart-Home AI Assistant” that learns users’ routines (e.g., when they wake up, adjust the thermostat, play music) to automate their home. The assistant processes voice commands and sensor data from within the home.

- Guidance:

- Create an architecture diagram. Components should include on-device processors, a central cloud service, user mobile app, and smart devices (lights, thermostat).

- Clearly indicate where different types of data (voice recordings, sensor data, user preferences) are stored and processed.

- Incorporate at least three specific Privacy by Design principles. For example:

- On-device processing: Which computations can happen locally to avoid sending raw data to the cloud?

- Data minimization: How can you design the data flow to send only the necessary information to the cloud? (e.g., sending a “turn on lights” command instead of the raw voice recording).

- User control: How will the user interface allow users to view, manage, and delete their data history?

- Outcome: An architecture diagram and a brief document explaining the design choices and how they enhance user privacy.

Tools and Technologies

Implementing robust data privacy requires leveraging a modern stack of tools and frameworks designed for the task. While policies and procedures are crucial, these technologies provide the technical enforcement of those policies.

- Python Libraries for Privacy:

- Google’s Differential Privacy Library (Python, C++, Go): Provides a set of algorithms for producing differentially private statistics. It can be used to add noise to data aggregations in a principled way.

- TensorFlow Privacy: An extension of TensorFlow that allows you to train machine learning models with differential privacy. It includes an implementation of DP-SGD.

- PySyft: An open-source framework for secure and private deep learning. It allows for training models using federated learning and other privacy-enhancing technologies across different devices and servers.

- Federated Learning Frameworks:

- TensorFlow Federated (TFF): A powerful open-source framework for machine learning and other computations on decentralized data. It provides a high-level API for implementing federated learning algorithms.

- PyTorch-based frameworks (e.g., Flower): Frameworks that allow for easy implementation of federated learning systems with PyTorch models, making them accessible to a wide range of developers.

- Data Anonymization Tools:

- ARX Data Anonymization Tool: A comprehensive open-source software for anonymizing sensitive personal data. It supports various methods, including k-anonymity, l-diversity, and t-closeness.

- Cloud Platform Services:

- AWS Macie / Google Cloud Data Loss Prevention (DLP): These are managed cloud services that use machine learning to automatically discover, classify, and protect sensitive data stored in cloud environments like S3 or Google Cloud Storage. They are essential for identifying where your personal data resides.

When integrating these tools, it is critical to manage dependencies carefully. For instance, using pip with a requirements.txt file or conda with an environment.yml file is essential to ensure that your development environment is reproducible and that all privacy-related libraries are at their correct, stable versions.

Summary

This chapter provided a comprehensive guide to navigating the critical intersection of AI development and data privacy regulation. We have established that compliance is a non-negotiable aspect of modern AI engineering, essential for legal adherence, ethical responsibility, and building user trust.

- Key Concepts Learned:

- We deconstructed the core principles of major regulations like GDPR and CCPA/CPRA, including concepts like lawful basis, purpose limitation, and data minimization.

- We differentiated between anonymization (irreversible) and pseudonymization (reversible) and explored the mathematical underpinnings of robust anonymization through k-anonymity, l-diversity, and t-closeness.

- We introduced advanced Privacy-Preserving Machine Learning (PPML) techniques, providing an in-depth look at the mathematical guarantees of Differential Privacy (DP) and the decentralized architecture of Federated Learning (FL).

- Practical Skills Gained:

- You have learned how to develop a strategic framework for implementing Privacy by Design, integrating privacy considerations across the entire AI lifecycle.

- You are now equipped to conduct a Data Protection Impact Assessment (DPIA) to identify and mitigate privacy risks before development begins.

- You can design and architect AI systems that minimize data exposure and provide users with meaningful transparency and control.

- Real-World Applicability: The principles and techniques covered are directly applicable to careers in AI engineering, data science, and MLOps. Understanding this domain is a significant competitive advantage, enabling you to build innovative AI products that are not only powerful but also safe, compliant, and trustworthy. As AI becomes more pervasive, the demand for engineers who can navigate these complex requirements will only continue to grow.

Further Reading and Resources

- Official Regulatory Texts:

- Full Text of the GDPR: https://gdpr-info.eu/ – The complete, official text of the regulation. An essential primary source.

- California Privacy Protection Agency (CPPA): https://cppa.ca.gov/ – The official source for regulations and updates regarding the CCPA and CPRA.

- Academic Papers and Research:

- “The Algorithmic Foundations of Differential Privacy” by Cynthia Dwork and Aaron Roth: A foundational textbook on the theory and mathematics behind differential privacy.

- “Communication-Efficient Learning of Deep Networks from Decentralized Data” by McMahan et al. (Google): The seminal paper that introduced the concept of Federated Learning.

- Industry Blogs and Technical Tutorials:

- Google AI Blog (Privacy Section): https://research.google/search/?query=privacy& – Often features articles on the practical application of federated learning and differential privacy.

- OpenMined Blog: https://blog.openmined.org/ – A blog focused on the development and application of privacy-preserving AI technologies, including tutorials on PySyft.

- Books:

- “Practical Data Privacy” by Katharine Jarmul: A book focused on the practical implementation of privacy-preserving data science workflows in Python.

Glossary of Terms

- Anonymization: The process of irreversibly altering personal data in such a way that the data subject is not or no longer identifiable.

- CCPA (California Consumer Privacy Act): A state statute intended to enhance privacy rights and consumer protection for residents of California, United States.

- CPRA (California Privacy Rights Act): An act that significantly amended and expanded the CCPA.

- Data Controller: The entity that determines the purposes and means of the processing of personal data.

- Data Processor: The entity that processes personal data on behalf of the data controller.

- Data Protection Impact Assessment (DPIA): A process to help identify and minimize the data protection risks of a project.

- Differential Privacy (DP): A system for publicly sharing information about a dataset by describing the patterns of groups within the dataset while withholding information about individuals in the dataset.

- Federated Learning (FL): A decentralized machine learning technique that enables model training on data distributed across multiple devices without exchanging the raw data itself.

- GDPR (General Data Protection Regulation): A regulation in EU law on data protection and privacy for all individuals within the European Union and the European Economic Area.

- K-Anonymity: A property of anonymized data that ensures each record is indistinguishable from at least (k-1) other records with respect to a set of quasi-identifiers.

- Personal Data: Any information which is related to an identified or identifiable natural person.

- Privacy by Design: An approach to systems engineering which seeks to embed privacy into the design and architecture of IT systems and business practices from the outset.

- Pseudonymization: A data management and de-identification procedure by which personally identifiable information fields within a data record are replaced by one or more artificial identifiers, or “pseudonyms.”

- Quasi-Identifier: A piece of information that is not of itself a unique identifier but can be combined with other quasi-identifiers to create a unique identifier (e.g., birth date, ZIP code).