Chapter 4: AI Industry Landscape and Career Opportunities

Chapter Objectives

Upon completing this chapter, you will be able to:

- Analyze the structure of the modern AI industry, including the roles of major technology corporations, startups, and open-source communities.

- Identify and differentiate between various career paths in AI, such as Machine Learning Engineer, AI Research Scientist, and AI Product Manager.

- Understand the economic and market dynamics driving the AI industry, including key growth sectors and investment trends.

- Evaluate the skills, educational backgrounds, and experiences required to succeed in different AI roles.

- Design a personal career development plan for entering or advancing within the AI field.

- Critique the ethical and societal implications of the expanding AI industry and its career landscape.

Introduction

Welcome to a pivotal moment in your journey as an aspiring AI professional. We now turn our attention to the vibrant, complex, and rapidly evolving ecosystem where these technologies are brought to life: the global AI industry. This chapter serves as your guide to navigating this dynamic landscape, offering a comprehensive overview of its structure, key players, and the multitude of career opportunities it presents.

The AI industry is no longer a niche sector of the technology world; it is a primary driver of innovation and economic growth across virtually every domain, from healthcare and finance to transportation and entertainment. As of 2025, the global AI market is valued in the hundreds of billions of dollars and is projected to grow at an astonishing rate, with some forecasts predicting it will exceed a trillion dollars in the coming years. This explosive growth is fueled by breakthroughs in machine learning, particularly in areas like generative AI, and the increasing accessibility of powerful computational resources through cloud platforms.

In this chapter, we will dissect the anatomy of the AI industry, exploring the symbiotic relationship between established tech giants like Google, Microsoft, and Amazon, and the agile, innovative startups that are constantly pushing the boundaries of what is possible. We will also examine the critical role of the open-source community in democratizing AI and fostering collaboration on a global scale. By understanding the forces that shape this industry, you will be better equipped to identify your place within it. More importantly, we will provide a detailed roadmap of the diverse career paths available, from the deeply technical role of a Machine Learning Engineer to the strategic position of an AI Product Manager. By the end of this chapter, you will not only have a clearer picture of the AI industry but also a more defined vision of your future within it.

Technical Background

The Architecture of the Modern AI Industry

The AI industry of the mid-2020s is a multifaceted ecosystem characterized by a complex interplay of different types of organizations, each contributing to the field in unique ways. Understanding this structure is essential for anyone looking to build a career in AI, as it informs everything from the types of problems being solved to the cultures of the teams solving them.

At the highest level, the industry can be segmented into three primary categories: incumbent technology giants, venture-backed startups, and the open-source community. These are not mutually exclusive categories, and there is significant overlap and interaction between them. For example, many large tech companies are major contributors to open-source projects, and many startups build their products on top of open-source technologies.

The Role of Incumbent Technology Giants

The behemoths of the tech world—companies like Google (Alphabet), Microsoft, Amazon (AWS), Meta, and NVIDIA—form the bedrock of the modern AI industry. Their influence is multifaceted, stemming from their vast resources, extensive research and development capabilities, and control over key infrastructure, particularly cloud computing platforms.

These companies are engaged in a fierce competition for AI supremacy, which manifests in several ways. First, they are at the forefront of fundamental research, with dedicated AI labs that publish groundbreaking papers and develop new models and algorithms. Google’s DeepMind and Microsoft Research AI are prime examples of this, consistently pushing the boundaries of the field.

Second, they are the primary providers of the infrastructure that powers the AI industry. Cloud platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer a suite of AI and machine learning services, from pre-trained models accessible via APIs to powerful GPU instances for training custom models. This “AI-as-a-Service” model has democratized access to AI capabilities, allowing smaller companies and individual developers to build sophisticated applications without the need for massive upfront investments in hardware.

Third, these giants are integrating AI into their vast portfolios of products and services. Google’s search engine, Microsoft’s Office suite, and Amazon’s e-commerce platform are all powered by sophisticated AI systems that enhance user experience and drive business value. This integration provides a massive real-world laboratory for testing and refining AI technologies at scale.

Finally, these companies are active acquirers of promising AI startups, often using acquisitions as a way to quickly gain access to new talent and technology. This creates a dynamic where startups can either aim to compete with the giants or position themselves for a lucrative exit.

Note: The influence of these large companies is not without its critics. Concerns about market concentration, data privacy, and the potential for algorithmic bias are significant and ongoing topics of debate within the AI community and society at large.

The Rise of Venture-Backed Startups

While the tech giants provide the foundation, much of the most exciting and disruptive innovation in AI is happening within the startup ecosystem. Fueled by billions of dollars in venture capital investment, AI startups are typically more agile and focused than their larger counterparts, allowing them to tackle specific problems with a high degree of specialization.

The startup landscape is incredibly diverse, with companies operating in virtually every sector of the economy. Some startups are focused on developing new foundation models, competing directly with the likes of OpenAI and Google. Others are building AI-powered applications for specific industries, such as healthcare, finance, or legal tech. A third category of startups is focused on building the tools and infrastructure that support the AI development lifecycle, a field often referred to as MLOps (Machine Learning Operations).

quadrantChart

title "AI Ecosystem: Startups vs. Tech Giants"

x-axis "Low Resources / Scale" --> "High Resources / Scale"

y-axis "Low Agility / Speed" --> "High Agility / Speed"

quadrant-1 "High-Agility, High-Resource"

quadrant-2 "High-Agility, Low-Resource"

quadrant-3 "Low-Agility, Low-Resource"

quadrant-4 "Low-Agility, High-Resource"

"Venture-Backed Startups": [0.25, 0.8]

"Incumbent Tech Giants": [0.8, 0.25]

"Open-Source Projects": [0.4, 0.6]

"Acquired Startups": [0.7, 0.65]

A key characteristic of the AI startup world is the rapid pace of innovation. The time from a research breakthrough to a commercial product can be remarkably short, and the most successful startups are those that can effectively bridge the gap between academic research and real-world application. This often involves a deep understanding of both the technical nuances of AI and the specific needs of a particular market.

Warning: The AI startup world is also characterized by a high degree of risk. For every successful company, there are many more that fail to find a product-market fit or are unable to compete with larger, better-funded rivals. Aspiring AI professionals considering a career in a startup should be prepared for a fast-paced, often chaotic environment.

The Open-Source Community: The Connective Tissue of the AI Industry

Underpinning both the tech giants and the startups is the vibrant and essential open-source community. Open-source software is the lifeblood of the modern AI industry, with a vast array of libraries, frameworks, and tools that are freely available for anyone to use, modify, and distribute.

The most prominent examples of open-source AI technologies are the deep learning frameworks TensorFlow (developed by Google) and PyTorch (developed by Meta). These frameworks provide the building blocks for creating and training complex neural networks, and they are used by researchers and engineers around the world. Other critical open-source projects include Scikit-learn for traditional machine learning, Hugging Face Transformers for natural language processing, and a wide range of tools for data processing, visualization, and model deployment.

The open-source model has several key benefits for the AI industry. It accelerates innovation by allowing researchers and engineers to build on each other’s work. It promotes transparency and reproducibility, which are essential for scientific progress. And it lowers the barrier to entry, making it possible for individuals and small organizations to participate in the AI revolution.

Prominent Open-Source AI/ML Projects

| Project | Primary Use | Key Contributor(s) |

|---|---|---|

| TensorFlow | Deep Learning Framework | |

| PyTorch | Deep Learning Framework | Meta |

| Scikit-learn | Traditional Machine Learning | Community-driven (Inria) |

| Hugging Face Transformers | Natural Language Processing (NLP) Models | Hugging Face |

| Pandas | Data Manipulation & Analysis | Community-driven |

| MLflow | MLOps / ML Lifecycle Management | Databricks |

The open-source community is not a monolithic entity but rather a collection of individuals, academic institutions, and companies that contribute to a shared pool of knowledge and resources. This collaborative spirit is one of the defining features of the AI field and a key reason for its rapid progress.

graph TD

subgraph Industry Ecosystem

A[Tech Giants<br><i>Google, Microsoft, AWS</i>]

B[Venture-Backed Startups<br><i>Agile & Specialized</i>]

C[Open-Source Community<br><i>TensorFlow, PyTorch, Hugging Face</i>]

end

A -- "Acquisitions &<br>Strategic Investments" --> B

B -- "Disruption &<br>Niche Innovation" --> A

A -- "Framework Development &<br>Corporate Contributions" --> C

C -- "Foundation for<br>Infrastructure & Tooling" --> A

B -- "Builds on Open-Source<br>Foundations" --> C

C -- "Accelerates Startup<br>Development & Innovation" --> B

classDef giants fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee

classDef startups fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

classDef opensource fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

class A giants

class B startups

class C opensourceKey Market Trends and Economic Drivers

The AI industry is not just a technological phenomenon; it is also a powerful economic force. Understanding the market trends and economic drivers that are shaping the industry is crucial for making informed career decisions.

The Generative AI Boom

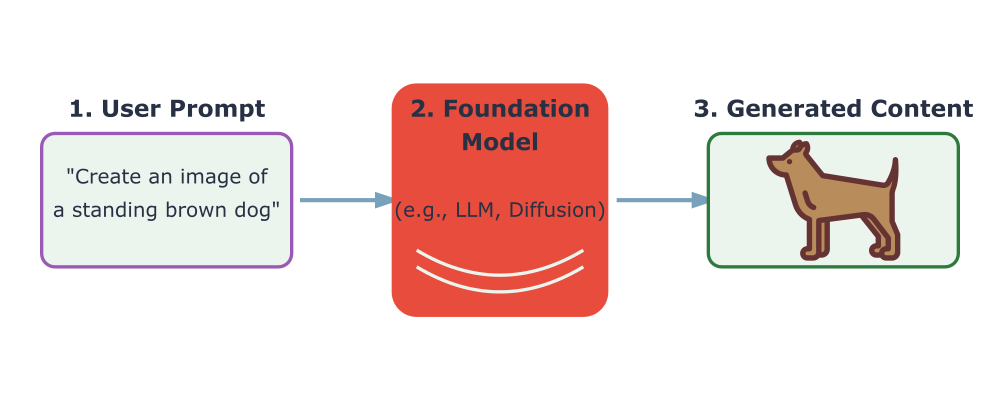

The most significant trend in the AI industry in recent years has been the explosion of interest in generative AI. Models like OpenAI’s GPT series and Google’s Gemini have captured the public imagination with their ability to generate human-like text, images, and code. This has led to a massive wave of investment and a flurry of new startups aiming to capitalize on this technology.

The economic impact of generative AI is still being assessed, but it is expected to be transformative. Companies are exploring a wide range of applications, from automating customer service and content creation to accelerating scientific research and drug discovery. The demand for professionals with skills in generative AI, particularly in areas like prompt engineering and fine-tuning large language models (LLMs), has skyrocketed.

The Shift to AI-as-a-Service (AIaaS)

As mentioned earlier, the rise of cloud computing has led to the emergence of AI-as-a-Service (AIaaS). This model allows companies to access sophisticated AI capabilities without the need to build and maintain their own infrastructure. This has had a profound impact on the economics of AI, making it more accessible to a wider range of organizations.

AIaaS offerings can be broadly categorized into three levels: Infrastructure-as-a-Service (IaaS), which provides raw computing power (e.g., GPU instances); Platform-as-a-Service (PaaS), which offers tools and frameworks for building and deploying models (e.g., Amazon SageMaker); and Software-as-a-Service (SaaS), which provides pre-built AI applications that can be accessed via an API (e.g., Google’s Vision AI).

graph TD

subgraph "AI-as-a-Service (AIaaS) Stack"

direction TB

SaaS["<b>SaaS (Software-as-a-Service)</b><br><i>Ready-to-use AI Applications</i><br>e.g., Google Vision API, Azure Cognitive Services"]

PaaS["<b>PaaS (Platform-as-a-Service)</b><br><i>Managed ML Development Environment</i><br>e.g., Amazon SageMaker, Vertex AI"]

IaaS["<b>IaaS (Infrastructure-as-a-Service)</b><br><i>Raw Compute & Storage Resources</i><br>e.g., AWS EC2 GPU Instances, Google TPUs"]

end

IaaS --> PaaS

PaaS --> SaaS

subgraph User Control vs. Abstraction

direction RL

IaaS_Control["High Control<br><i>(Manage OS, Runtimes)</i>"]

PaaS_Control["Medium Control<br><i>(Focus on Code & Data)</i>"]

SaaS_Control["Low Control<br><i>(Consume via API)</i>"]

end

IaaS --- IaaS_Control

PaaS --- PaaS_Control

SaaS --- SaaS_Control

classDef saas fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

classDef paas fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

classDef iaas fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee

classDef control fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044,rx:5,ry:5

class SaaS saas

class PaaS paas

class IaaS iaas

class IaaS_Control,PaaS_Control,SaaS_Control control

The growth of AIaaS is creating new career opportunities for professionals who can help companies navigate the complex landscape of cloud-based AI services and integrate them into their existing workflows.

The Importance of Data and MLOps

While the models themselves often get the most attention, the AI industry is increasingly recognizing the critical importance of data and MLOps. The performance of any AI model is ultimately limited by the quality and quantity of the data it is trained on. As a result, there is a growing demand for professionals who specialize in data engineering, data annotation, and data governance.

MLOps, or Machine Learning Operations, is the practice of applying DevOps principles to the machine learning lifecycle. It encompasses everything from data management and model training to deployment, monitoring, and governance. The goal of MLOps is to make the process of developing and deploying AI models more efficient, reliable, and scalable. As AI moves from the research lab to production environments, the need for robust MLOps practices and skilled MLOps engineers is becoming increasingly critical.

graph TD

A[Data Ingestion<br>Collect raw data from sources] --> B{Data Preparation<br>Cleaning, Labeling, Augmentation};

B --> C[Feature Engineering<br>Select and transform variables];

C --> D(Model Training<br>Train ML model on prepared data);

D --> E{Model Validation<br>Evaluate performance on test data};

E -- "Meets Thresholds?" --> F[Model Deployment<br>Serve model for predictions];

E -- "Needs Improvement" --> D;

F --> G((Monitoring<br>Track performance, drift, and errors));

G -- "Retraining Triggered" --> A;

G -- "Stable" --> F;

subgraph "CI/CD for ML"

direction LR

subgraph "Continuous Integration (CI)"

B -- "Data Versioning" --> C;

C -- "Model Code Versioning" --> D;

end

subgraph "Continuous Delivery/Deployment (CD)"

E -- "Automated Testing" --> F;

F -- "Automated Deployment" --> G;

end

end

classDef data fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

classDef process fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

classDef model fill:#e74c3c,stroke:#e74c3c,stroke-width:1px,color:#ebf5ee

classDef decision fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

classDef deploy fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

class A,B,C data;

class D,G model;

class E decision;

class F deploy;

Conceptual Framework and Analysis

Mapping Career Paths in the AI Ecosystem

The AI industry offers a diverse and expanding range of career paths, each with its own unique set of responsibilities, required skills, and career progression. Understanding these different roles is the first step toward charting your own course in the field. We can broadly categorize AI careers into several archetypes, though in practice, there is often significant overlap between them.

The Technical Core: Engineers and Scientists

At the heart of the AI industry are the technical professionals who build, train, and deploy AI models. This group includes several distinct but related roles:

- Machine Learning Engineer (MLE): This is perhaps the most common and well-defined role in the AI industry. MLEs are software engineers who specialize in machine learning. They are responsible for the entire lifecycle of an AI model, from data preprocessing and feature engineering to model training, evaluation, and deployment. A successful MLE needs a strong foundation in software engineering principles, as well as a deep understanding of machine learning algorithms and frameworks.

- AI Research Scientist: Research scientists are focused on pushing the boundaries of what is possible in AI. They typically work in academic institutions or the research labs of large tech companies. Their work involves developing new algorithms, designing novel model architectures, and publishing their findings in academic journals and conferences. A career as a research scientist usually requires an advanced degree, typically a Ph.D., in a relevant field.

- Data Scientist: While the role of a data scientist can be broad, in the context of AI, it often involves a focus on exploratory data analysis, statistical modeling, and generating insights from data. Data scientists work closely with business stakeholders to understand their needs and then use their analytical skills to design experiments, build predictive models, and communicate their findings. A strong foundation in statistics, mathematics, and data visualization is essential for this role.

- Computer Vision Engineer / NLP Engineer: These are specialized roles that focus on specific subfields of AI. A computer vision engineer works on problems related to image and video analysis, such as object detection, image segmentation, and facial recognition. An NLP engineer, on the other hand, focuses on problems related to human language, such as text classification, machine translation, and sentiment analysis. These roles require deep expertise in their respective domains, as well as strong programming skills.

The Strategic Layer: Product and Management Roles

Building successful AI products requires more than just technical expertise. It also requires a deep understanding of business needs, user experience, and market dynamics. This is where the strategic layer of AI professionals comes in.

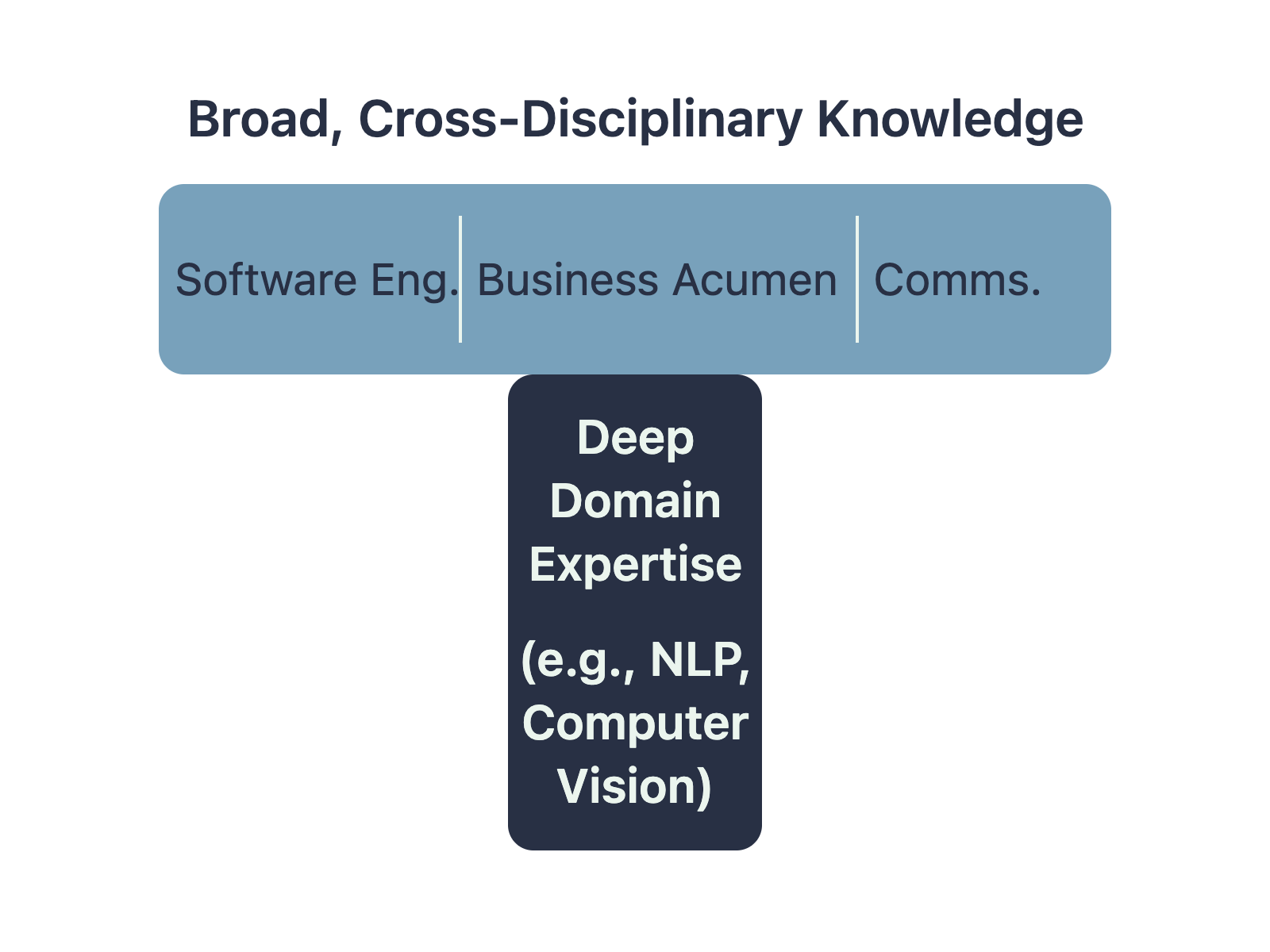

- AI Product Manager (PM): The AI PM is responsible for defining the vision, strategy, and roadmap for an AI-powered product. They work at the intersection of business, technology, and user experience, translating customer needs into technical requirements for the engineering team. A successful AI PM needs a unique blend of technical literacy, business acumen, and communication skills.

- AI Project Manager: While similar to a product manager, a project manager is typically more focused on the execution of a specific AI project. They are responsible for planning, organizing, and managing resources to ensure that the project is completed on time and within budget. Strong organizational and leadership skills are key for this role.

- AI Ethicist / Responsible AI Specialist: As AI becomes more pervasive, the need for professionals who can navigate the complex ethical challenges it presents is growing. AI ethicists work to ensure that AI systems are developed and deployed in a way that is fair, transparent, and accountable. This is an emerging role that requires a deep understanding of both the technical aspects of AI and the philosophical and societal implications of its use.

The Hybrid Roles: Bridging the Gap

In addition to the core technical and strategic roles, there are a number of hybrid roles that are emerging at the intersection of different disciplines.

- MLOps Engineer: As discussed earlier, the MLOps engineer is responsible for building and maintaining the infrastructure and workflows that support the machine learning lifecycle. This role requires a blend of software engineering, DevOps, and machine learning expertise.

- AI Solutions Architect: A solutions architect is a customer-facing role that involves designing and implementing AI solutions for specific business problems. They work closely with clients to understand their needs and then design a solution that leverages the appropriate AI technologies and platforms. This role requires strong technical skills, as well as excellent communication and consulting abilities.

- Prompt Engineer: This is a relatively new role that has emerged with the rise of large language models. A prompt engineer specializes in designing and refining the prompts that are used to interact with these models. The goal is to elicit the most accurate, relevant, and useful responses from the model. This role requires a deep understanding of how LLMs work, as well as a creative and analytical mindset.

Comparative Analysis of Career Paths

Choosing an AI career path is a significant decision that depends on your individual interests, skills, and long-term goals. The following table provides a comparative analysis of some of the key roles in the AI industry to help you make a more informed choice.

Comparative Analysis of AI Career Paths

| Career Path | Primary Focus | Core Skills | Typical Education | Career Progression |

|---|---|---|---|---|

| Machine Learning Engineer | Building & deploying production-ready ML models. | Software Engineering, Python, TensorFlow/PyTorch, MLOps, Cloud Platforms. | Bachelor’s or Master’s in CS or related field. | Senior MLE, Tech Lead, Engineering Manager. |

| AI Research Scientist | Developing new AI algorithms and advancing the state-of-the-art. | Advanced Math/Stats, Deep Learning Theory, Experimentation, Publishing. | Ph.D. in CS or a specialized AI field is common. | Senior Scientist, Research Lead, Professor. |

| Data Scientist | Analyzing data, generating insights, and building predictive models to solve business problems. | Statistics, SQL, Python (Pandas, Scikit-learn), Data Visualization, Business Acumen. | Bachelor’s or Master’s in a quantitative field. | Senior Data Scientist, Analytics Manager, Chief Data Officer. |

| AI Product Manager | Defining the vision, strategy, and roadmap for AI-powered products. | Business Acumen, User Experience (UX), Technical Literacy, Communication. | Bachelor’s or MBA, often with a technical background. | Senior PM, Director of Product, VP of Product. |

| MLOps Engineer | Automating and streamlining the ML lifecycle from data to deployment. | DevOps, CI/CD, Cloud Computing (AWS, GCP, Azure), Docker, Kubernetes. | Bachelor’s in CS or related field, or equivalent experience. | Senior MLOps Engineer, Infrastructure Architect. |

| Prompt Engineer | Designing, refining, and optimizing prompts for large language models (LLMs). | Linguistic Intuition, Creativity, Analytical Skills, Deep LLM understanding. | Varies widely; can be from humanities, CS, or other fields. | Senior Prompt Engineer, AI Interaction Designer. |

Tip: Don’t feel locked into a single career path. The AI industry is highly dynamic, and it is common for professionals to move between different roles as their interests and skills evolve. The most successful AI professionals are often those who are adaptable and committed to lifelong learning.

Industry Applications and Case Studies

The transformative power of AI is best understood through its real-world applications. Here are a few case studies that illustrate how AI is creating value across different industries.

- Healthcare: AI-Powered Medical Imaging

- Application: Companies like PathAI are using computer vision models to analyze pathology slides and assist pathologists in diagnosing cancer. These AI systems can identify subtle patterns in tissue samples that may be missed by the human eye, leading to earlier and more accurate diagnoses.

- Business Value: Improved diagnostic accuracy, reduced workload for pathologists, and the potential for better patient outcomes.

- Technical Challenges: Acquiring and annotating large datasets of medical images, ensuring the fairness and interpretability of the models, and navigating the complex regulatory landscape of the healthcare industry.

- Finance: Algorithmic Trading and Fraud Detection

- Application: Hedge funds and investment banks are increasingly using machine learning models to analyze market data and execute trades at superhuman speeds. At the same time, retail banks and credit card companies are using AI to detect fraudulent transactions in real time.

- Business Value: Increased profitability from trading, reduced losses from fraud, and improved security for customers.

- Technical Challenges: Dealing with noisy and non-stationary financial data, building models that are robust to market shocks, and ensuring compliance with strict financial regulations.

- Retail: Personalized Recommendations and Supply Chain Optimization

- Application: E-commerce giants like Amazon and streaming services like Netflix are famous for their sophisticated recommendation engines, which use collaborative filtering and other machine learning techniques to suggest products and content to users. Behind the scenes, AI is also being used to optimize supply chains, from forecasting demand to managing inventory.

- Business Value: Increased customer engagement and sales, improved operational efficiency, and reduced waste.

- Technical Challenges: Scaling recommendation systems to millions of users, dealing with sparse data (the “cold start” problem), and adapting to changing consumer preferences.

Best Practices and Common Pitfalls

Navigating a career in the AI industry can be challenging. Here are some best practices to follow and common pitfalls to avoid.

- Build a Strong Foundation: Don’t be seduced by the hype around the latest AI models. Before you can master the cutting edge, you need a solid understanding of the fundamentals of mathematics, statistics, and computer science.

- Develop T-Shaped Skills: As discussed earlier, the most valuable AI professionals have both deep expertise in a specific area and a broad understanding of the entire AI ecosystem. Strive to become a “T-shaped” individual.

- Stay Curious and Keep Learning: The AI field is in a constant state of flux. To stay relevant, you must be a lifelong learner. Read research papers, take online courses, and experiment with new tools and technologies.

- Build a Portfolio of Projects: A strong portfolio of personal projects is often more valuable than a long list of credentials. Build things that you are passionate about and that demonstrate your skills to potential employers.

- Network and Collaborate: The AI community is highly collaborative. Attend conferences, participate in online forums, and contribute to open-source projects. Building a strong professional network can open doors to new opportunities.

- Don’t Neglect Soft Skills: Technical skills are essential, but they are not enough. The ability to communicate effectively, work in a team, and understand business needs is just as important for a successful career in AI.

Common Pitfalls to Avoid:

- Chasing the Hype: Don’t jump on every new trend without a clear understanding of its underlying principles and practical applications.

- Working in a Silo: Don’t be afraid to ask for help or to collaborate with others. The best AI products are built by diverse teams with a wide range of skills and perspectives.

- Ignoring Ethics: Always consider the ethical implications of your work. AI has the potential to do great good, but it can also be used to cause harm.

- Underestimating the Importance of Data: Remember that even the most sophisticated AI model is useless without high-quality data.

Hands-on Exercises

- Career Path Self-Assessment:

- Objective: To identify your personal interests and skills and map them to potential AI career paths.

- Task: Create a two-column list. In the first column, list your current skills and interests (e.g., “strong in Python,” “enjoy solving complex math problems,” “good at explaining technical concepts to non-technical audiences”). In the second column, list the AI career paths discussed in this chapter. Draw lines connecting your skills and interests to the career paths that seem like a good fit. Write a short paragraph explaining which path you find most appealing and why.

- AI Industry Landscape Analysis (Team Activity):

- Objective: To gain a deeper understanding of the competitive landscape in a specific sector of the AI industry.

- Task: Form a team of 3-4 students. Choose a specific application of AI (e.g., autonomous vehicles, drug discovery, natural language translation). As a team, research the key players in this space, including both large companies and startups. Create a short presentation that outlines the competitive landscape, the key technologies being used, and the major challenges and opportunities in the sector.

- Building a Personal Learning Roadmap:

- Objective: To create a concrete plan for acquiring the skills needed for your target AI career.

- Task: Based on your self-assessment from the first exercise, choose one or two target career paths. Research the specific skills and technologies that are most in-demand for these roles (you can use job postings from sites like LinkedIn as a resource). Create a personal learning roadmap that outlines the steps you will take over the next 6-12 months to acquire these skills. This could include taking specific online courses, reading certain books or papers, or working on personal projects.

Tools and Technologies

The AI industry is supported by a rich ecosystem of tools and technologies. While it is impossible to be an expert in all of them, it is important to have a working knowledge of the most common ones.

- Programming Languages: Python is the undisputed lingua franca of the AI world. Its simple syntax, extensive libraries, and strong community support make it the ideal language for AI development. Other languages like C++ and Java are also used in some contexts, particularly for high-performance computing and enterprise applications.

- Deep Learning Frameworks: As mentioned earlier, TensorFlow and PyTorch are the two dominant deep learning frameworks. Both have their strengths and weaknesses, and the choice between them often comes down to personal preference or the specific needs of a project.

- Cloud Platforms: Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure are the three major cloud providers. Each offers a suite of AI and machine learning services, and familiarity with at least one of them is essential for most AI roles.

Cloud Platform ML Services Comparison (AIaaS)

| Service Category | Amazon Web Services (AWS) | Google Cloud Platform (GCP) | Microsoft Azure |

|---|---|---|---|

| ML Platform (PaaS) | Amazon SageMaker | Vertex AI | Azure Machine Learning |

| Pre-trained APIs (SaaS) | Rekognition (Vision), Polly (Speech), Comprehend (NLP) | Vision AI, Speech-to-Text, Natural Language API | Cognitive Services (Vision, Speech, Language) |

| Compute for Training (IaaS) | EC2 Instances (P, G, Trn series) | Compute Engine (with GPUs/TPUs) | Virtual Machines (N-series) |

| Key Differentiator | Market leader with the broadest set of services and largest community. | Strong in custom hardware (TPUs) and cutting-edge AI research integration. | Deep integration with enterprise software and strong hybrid cloud offerings. |

- MLOps Tools: The MLOps landscape is vast and constantly evolving. Some of the most common tools include Docker and Kubernetes for containerization and orchestration, MLflow for experiment tracking, and a wide range of platforms for data versioning, model monitoring, and more.

Summary

- The AI industry is a dynamic ecosystem composed of tech giants, startups, and the open-source community.

- Key market trends include the rise of generative AI, the shift to AI-as-a-Service, and the growing importance of data and MLOps.

- There are a wide range of career paths in AI, from technical roles like Machine Learning Engineer to strategic roles like AI Product Manager.

- Success in the AI industry requires a combination of strong technical skills, business acumen, and a commitment to lifelong learning.

- The practical applications of AI are vast and are transforming industries from healthcare to finance to retail.

Further Reading and Resources

- State of AI Report: An annual report that provides a comprehensive overview of the latest trends in AI research, industry, and policy. (e.g., from Nathan Benaich and Air Street Capital)

- AI and Compute by OpenAI: A blog post that analyzes the relationship between the computational power used to train AI models and the progress in the field.

- “Designing Machine Learning Systems” by Chip Huyen: An essential guide to the practical aspects of building and deploying machine learning systems.

- Coursera’s Deep Learning Specialization by Andrew Ng: A foundational online course that provides a comprehensive introduction to the theory and practice of deep learning.

- Hugging Face: A platform that provides a vast collection of open-source models, datasets, and tools for natural language processing. https://huggingface.co

- Distill.pub: An online journal that publishes clear, interactive articles that explain complex machine learning concepts. https://distill.pub

- ArXiv.org: A preprint server where you can find the latest research papers in AI and other scientific fields. https://arxiv.org

Glossary of Terms

- AI-as-a-Service (AIaaS): A cloud computing model that allows users to access AI capabilities on a pay-as-you-go basis.

- Foundation Model: A large-scale AI model, typically trained on a massive dataset, that can be adapted to a wide range of downstream tasks.

- Generative AI: A class of AI models that can generate new content, such as text, images, or code.

- Large Language Model (LLM): A type of foundation model that is specialized for natural language processing tasks.

- Machine Learning Engineer (MLE): A software engineer who specializes in building and deploying machine learning models.

- Machine Learning Operations (MLOps): The practice of applying DevOps principles to the machine learning lifecycle.

- Prompt Engineering: The process of designing and refining the prompts that are used to interact with large language models.

- TensorFlow: An open-source deep learning framework developed by Google.

- PyTorch: An open-source deep learning framework developed by Meta.