A Definitive Guide to AI Development Boards and Tools

For years, the term “Artificial Intelligence” conjured images of vast, humming data centers and disembodied voices in the cloud. AI was something distant, a utility you tapped into over the internet, owned and operated by tech giants. But a quiet revolution has been happening on our desktops, in our workshops, and inside the gadgets we use every day. This is the revolution of edge computing, and it’s bringing the power of AI out of the cloud and into our hands.1 At the heart of this movement is a new class of hardware: the AI development board. These compact, powerful, and surprisingly affordable devices are making it possible for developers, hobbyists, and innovators to build products with their own, localized intelligence.

To navigate this exciting new landscape, it helps to start with a simple but powerful analogy. Think of an AI development board as a physical brain—the intricate hardware designed for a specific kind of thinking.3 The AI model you run on it is the abstract

mind—the software, the knowledge, the personality that inhabits the hardware.4 Just as a philosopher’s brain is wired differently from a painter’s, and a mathematician’s from a musician’s, different AI development boards are engineered for different kinds of “thought.” Some are lightning-fast analytical thinkers, others are flexible polymaths, and some are hyper-efficient specialists. Choosing the right board is not just a technical decision; it’s about picking the right brain for the mind you want to create. This guide is your map to that choice, demystifying the hardware, the software, and the incredible potential of tabletop AI.

Chapter 1: The New Frontier: What Gives an AI Board its Smarts?

Before diving into the specific contenders, it’s crucial to understand what separates an AI development board from the single-board computers (SBCs) and microcontrollers that makers have used for years. While they may look similar, their internal architecture is fundamentally different, designed for the unique demands of artificial intelligence.

More Than Just a Tiny Computer

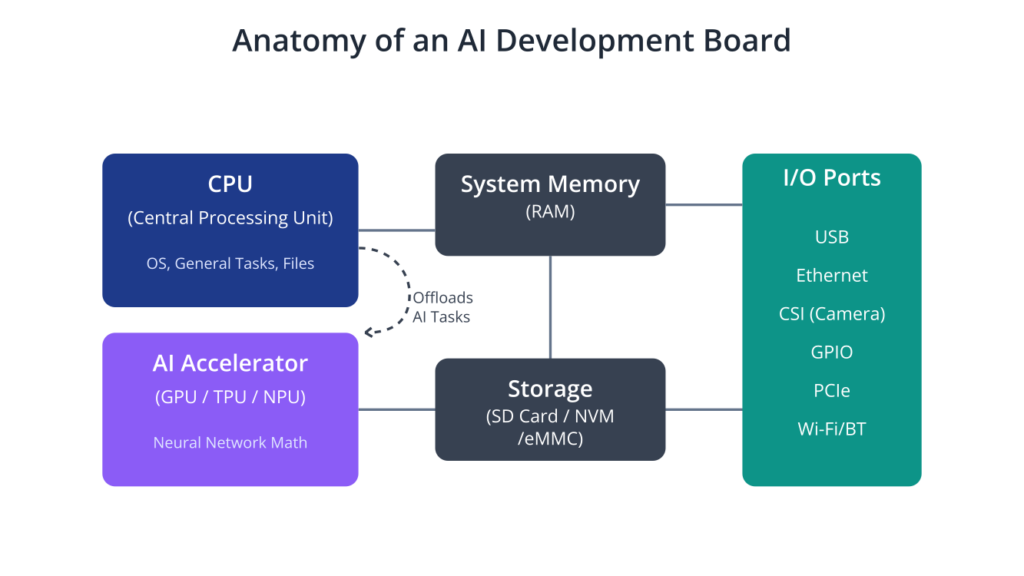

At its core, an AI development board is a compact, all-in-one platform engineered to build, test, and deploy AI models locally, without relying on a connection to the cloud.1 These boards are complete systems, typically featuring a central processing unit (CPU), memory, and input/output ports, much like a Raspberry Pi.5 However, their defining feature is the inclusion of specialized hardware known as an

AI accelerator.

If the CPU is the brain’s general manager—handling a wide variety of tasks from running the operating system to managing files—then the AI accelerator is the specialized neuro-circuitry dedicated to the heavy lifting of AI. This specialized silicon comes in several forms:

- GPU (Graphics Processing Unit): Originally designed for rendering graphics in video games, GPUs are masters of parallel processing. Their architecture, which consists of hundreds or thousands of simple cores, is perfectly suited for the massive matrix multiplication and vector operations that form the mathematical foundation of neural networks.6

- TPU (Tensor Processing Unit): A custom-designed Application-Specific Integrated Circuit (ASIC) developed by Google. An ASIC is a chip built from the ground up to do one thing exceptionally well. In this case, that one thing is executing the operations used in neural networks with incredible speed and power efficiency.7

- NPU (Neural Processing Unit): A more general term for a processor designed specifically to accelerate machine learning algorithms. Like TPUs, NPUs are built to handle the unique computational workloads of AI, offloading these tasks from the main CPU.9

Here is a summary table for AI accelerators:

By running AI models on these specialized accelerators, developers unlock three transformative benefits that define edge computing:

- Real-Time Processing: Performing calculations locally eliminates the round-trip delay of sending data to a cloud server and waiting for a response. For applications like autonomous robotics or real-time video analysis, this reduction in latency from seconds to milliseconds is not just an improvement; it’s an enabling technology.1

- Enhanced Privacy and Security: When data is processed on the device, sensitive information—like video feeds from a home security camera or biometric data from a medical sensor—never has to leave the local network. This dramatically improves user privacy and reduces the attack surface for potential breaches.1

- Energy Efficiency: AI accelerators are optimized for performance-per-watt. They can perform complex AI tasks while consuming a fraction of the power of a general-purpose CPU, making them ideal for battery-operated devices like drones, wearables, and portable sensors.1

The Hardware-Software Symphony: A Rocket Analogy

Understanding the hardware is only one part of the equation. A powerful AI accelerator is useless without the right software to control it and the right AI model to run on it. To grasp how these elements work together, it’s helpful to think of building an edge AI project like launching a rocket.12

- The Board (Hardware): This is the rocket itself. It’s the physical structure, complete with the powerful engines (the GPU, TPU, or NPU) designed to achieve liftoff. A bigger rocket with more engines can carry a heavier payload, just as a more powerful board can run larger, more complex AI models.

- The AI Model & Data: This is the rocket fuel. An AI model, trained on vast amounts of data, is the potent energy source that drives the application. Without the right kind of fuel—a model that is compatible with and optimized for the rocket’s engines—the entire system is just an inert piece of metal on the launchpad.

- The Software (SDKs & Frameworks): This is the launch control system. It’s the complex web of drivers, libraries, and tools that manage the engines, interpret the flight plan, and guide the rocket to its destination. This software development kit (SDK) is what allows a developer to communicate with the hardware and deploy their model effectively

---

config:

theme: neo

themeVariables:

fontFamily: Open Sans

---

mindmap

root((Edge AI Project))

The Board (Hardware)

The Rocket

::icon(fa fa-rocket)

Structure & Engines

("GPU / TPU / NPU")

Determines payload capacity

(Model complexity)

The AI Model & Data

The Rocket Fuel

::icon(fa fa-fire)

Trained on Data

("TensorFlow, PyTorch Models")

Must be compatible with engines

(e.g., TFLite for Coral)

The Software (SDKs)

Launch Control

::icon(fa fa-cogs)

Drivers, Libraries, Tools

("JetPack, Hailo Suite, TFLite Runtime")

Manages engines

Guides the rocket

This analogy reveals a fundamental truth about this field: you are not just buying a piece of silicon; you are buying into an entire ecosystem. The hardware is often inextricably linked to its software. Google’s Coral platform, for instance, is built around its Edge TPU (the rocket engine) but is designed exclusively to run models created with TensorFlow Lite (the specific rocket fuel).13 NVIDIA’s powerful Jetson boards are defined by the comprehensive JetPack SDK (the launch control), which provides all the tools needed to harness its GPU.15 The Raspberry Pi, a versatile rocket frame, derives its AI power from third-party engine modules, like the Hailo NPU, which comes with its own dedicated software suite.17

Therefore, choosing a board is a strategic decision that dictates your entire development workflow. A board with incredible hardware specifications but poor or limited software support is like a state-of-the-art rocket with no launch control—an impressive but ultimately useless piece of engineering. A successful project requires a perfect symphony between the hardware, the software, and the AI model.

Chapter 2: Meet the Contenders: The Titans of Tabletop AI

The market for AI development boards is vibrant and diverse, but three main contenders have emerged, each with a distinct philosophy, architecture, and ideal user. These are the platforms you are most likely to encounter, and understanding their unique strengths and weaknesses is the key to choosing the right tool for your project.

NVIDIA Jetson: The All-Round Powerhouse

If there is a professional’s choice in the world of edge AI, it is the NVIDIA Jetson family. Positioned as a line of compact “AI supercomputers,” Jetson boards are designed for maximum performance and flexibility, making them the go-to platform for developers building complex, multi-faceted AI applications that demand serious computational horsepower.1 While the family scales up to the formidable Jetson AGX Orin, the most accessible and modern entry point for most developers is the

Jetson Orin Nano.6

The “brain” of the Jetson Orin Nano is a formidable piece of engineering. It combines a powerful multi-core Arm CPU for general-purpose tasks with a cutting-edge NVIDIA Ampere-architecture GPU. This isn’t just any GPU; it features 1,024 CUDA cores for parallel processing and 32 specialized Tensor Cores designed explicitly to accelerate the matrix operations at the heart of AI workloads.6 This potent combination allows the Orin Nano to deliver up to 40 Trillion Operations Per Second (TOPS) of AI performance, making it a true powerhouse for its size.21

However, the true “mind” of the Jetson platform is its software ecosystem, encapsulated in the NVIDIA JetPack SDK.15 JetPack is far more than just a collection of drivers; it’s a complete, end-to-end development environment. It includes Jetson Linux (a full-featured Ubuntu desktop environment), the CUDA toolkit for GPU programming, cuDNN for deep neural network primitives, and TensorRT, a high-performance inference optimizer that can dramatically speed up models.10 This robust foundation supports virtually all major AI frameworks, including PyTorch and TensorFlow, giving developers unparalleled flexibility. Furthermore, NVIDIA provides high-level SDKs like

DeepStream for building sophisticated multi-camera video analytics pipelines and Isaac for accelerating the development of robotics applications, offering a massive head start on complex projects.10

This combination of raw power and a mature software stack makes the Jetson Orin Nano the ideal platform for ambitious projects. It excels in applications like autonomous mobile robots that need to perform simultaneous localization and mapping (SLAM) while also running object detection, multi-camera security systems that analyze several video streams in real-time, and even on-device natural language processing for advanced conversational AI.11

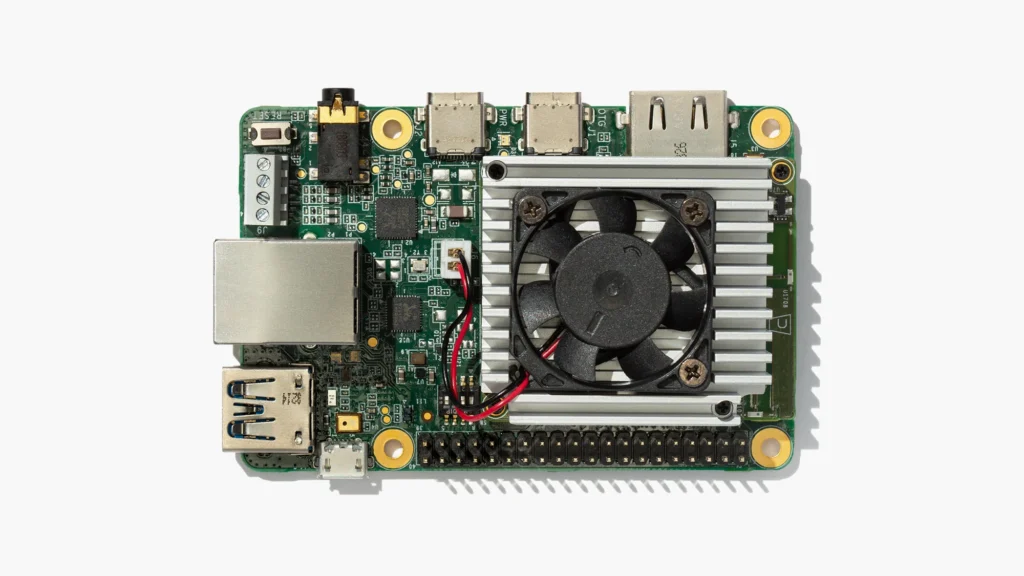

Google Coral: The Efficiency Expert

Where NVIDIA Jetson offers broad, flexible power, Google Coral delivers focused, hyper-efficient performance. The Coral platform is the master of specialization, designed for developers who need to squeeze the maximum amount of AI performance out of every watt of power.1 This makes it the perfect choice for applications in power-constrained or thermally sensitive environments, where efficiency is not just a feature but a requirement.

The brain of the Coral platform is the Google Edge TPU, a custom-designed ASIC.7 Unlike a general-purpose GPU, the Edge TPU was engineered with a single purpose: to execute neural network inference operations as fast and efficiently as possible. This singular focus allows it to achieve a remarkable 4 TOPS of performance while consuming only about 2 watts of power (an efficiency of 2 TOPS-per-watt).13 This hardware is available in two main form factors for developers: the

Coral Dev Board, a self-contained single-board computer, and the Coral USB Accelerator, a dongle that can add the Edge TPU’s capabilities to any compatible system, including a Raspberry Pi.13

The mind of the Coral ecosystem is just as specialized as its hardware. The platform is built exclusively around TensorFlow Lite (TFLite), Google’s framework for deploying models on mobile and embedded devices.13 To run on the Edge TPU, a standard TensorFlow model must undergo a specific process. First, it is converted to the TFLite format. Then, it is “quantized,” a process that converts the model’s 32-bit floating-point weights into more efficient 8-bit integers. Finally, it is compiled by a special tool that maps the model’s operations directly onto the Edge TPU’s hardware.27 This pipeline is the source of Coral’s incredible efficiency, but it’s also its primary limitation: if your model isn’t compatible with TFLite or uses operations not supported by the compiler, you can’t take advantage of the accelerator.

This specialized nature makes Coral the undisputed champion for a specific class of projects. It is perfect for building smart security cameras that perform on-device person detection, intelligent bird feeders that can identify species in real-time, and countless other IoT devices that need to perform a well-defined AI task continuously without draining a battery or generating excess heat.11

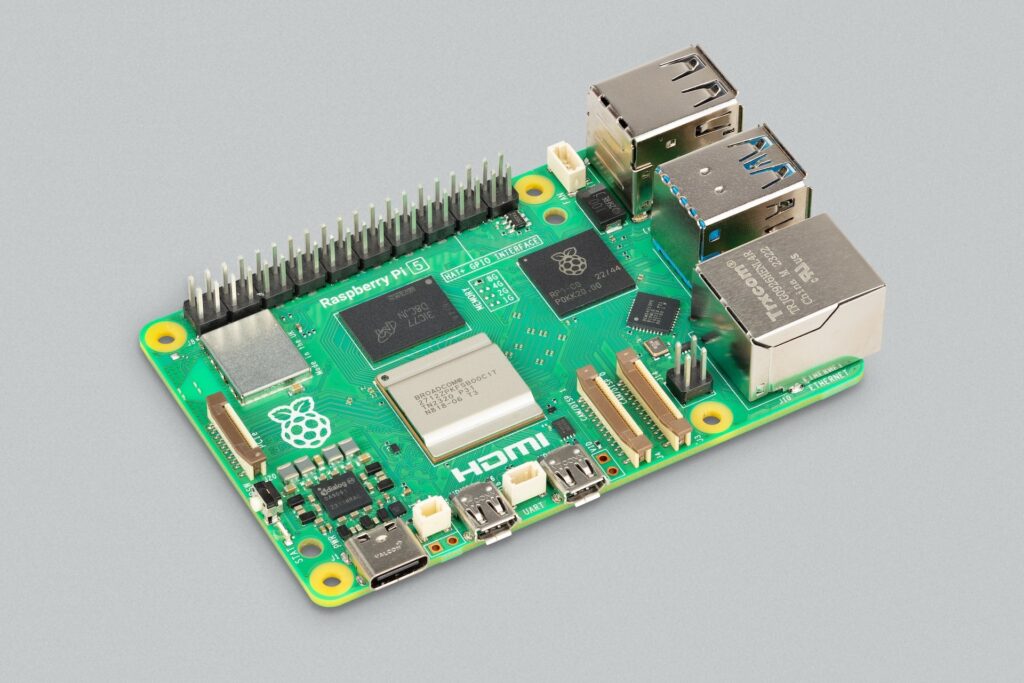

Raspberry Pi: The People’s Champion, Accelerated

For over a decade, the Raspberry Pi has been the heart of the maker movement—an accessible, affordable, and endlessly versatile tool for learning and creation. For most of its life, its AI capabilities were limited to running small models on its CPU. However, with the release of the Raspberry Pi 5, the platform has been elevated into a serious AI contender.5

The brain of this new AI-capable system starts with the Raspberry Pi 5’s solid quad-core Arm CPU foundation.5 But the game-changing feature is its new

PCI Express (PCIe) interface. This high-speed connection, previously found only on desktop motherboards, allows the Pi to communicate with addon hardware at extremely high bandwidth and with very low latency. This is a monumental leap from the older USB standard, and it has opened the door to a new ecosystem of powerful AI accelerators that can be attached directly to the Pi.9

This has led to the emergence of the “attachable lobe”—specialized AI accelerators that augment the Pi’s brain. The two most prominent choices are:

- The Official Choice: Raspberry Pi AI Kit with Hailo-8L: Recognizing the demand, Raspberry Pi partnered with AI chipmaker Hailo to release an official AI Kit. This bundle includes an M.2 HAT+ (an adapter board for the PCIe port) and a Hailo-8L NPU module.17 The Hailo-8L is a powerhouse in its own right, delivering an impressive 13 TOPS of performance. Crucially, it is also known for its exceptional power efficiency, rivaling that of the Google Coral.11 The tight integration with the Raspberry Pi ecosystem means it works seamlessly with the official camera software stack, including

rpicam-apps and picamera2, allowing developers to easily build real-time vision applications.17

- The Classic Choice: Coral USB Accelerator: For years, the go-to method for adding AI power to a Pi was to plug in a Coral USB Accelerator.26 This combination remains a viable and popular option, testament to the Raspberry Pi’s incredible flexibility and the strength of the Coral ecosystem for TFLite models.

The mind of an accelerated Raspberry Pi is dictated by the chosen accelerator. Using the official AI Kit means working within the Hailo AI Software Suite, which provides drivers and tools compatible with major frameworks like TensorFlow and PyTorch.18 Opting for the Coral USB stick means using the

Edge TPU runtime and committing to the TensorFlow Lite workflow.33 Both of these run on top of the standard

Raspberry Pi OS, giving developers access to a familiar Linux environment and the largest, most active, and most creative community in the world of computing.34

This unique blend of affordability, flexibility, and massive community support makes the accelerated Raspberry Pi the ultimate platform for tinkerers, educators, and developers moving from hobbyist to professional. It is the ideal choice for building custom home automation systems, DIY smart security cameras, creative robotics projects, and citizen science instruments where customization and community knowledge are paramount.30

Chapter 3: The Head-to-Head: Choosing Your AI Brain

With a clear picture of the main contenders, the next step is a direct comparison. Choosing the right board is a matter of trade-offs. Raw performance, power efficiency, software flexibility, and community support are all critical factors that must be weighed against the specific needs of your project.

The Contenders at a Glance

For a quick overview, the following table summarizes the key differentiators between the leading platforms. This provides a high-level snapshot to anchor the more detailed discussion of the critical trade-offs involved.

The “TOPS War”: Performance vs. Practicality

On paper, the battle for edge AI supremacy often looks like a “TOPS War,” where manufacturers boast about the trillions of operations per second their chips can perform. The Jetson Orin family, for example, can scale to a staggering 275 TOPS on the high-end AGX module, with the more accessible Nano variant offering a still-impressive 40 TOPS.11 However, for the edge developer, this headline number can be misleading. A far more critical metric is

performance-per-watt, or TOPS/W.7

This reveals a fundamental difference in design philosophy. TOPS is a marketing metric; efficiency is an engineering constraint. For any device that will be untethered from a wall outlet or placed in a small, unventilated enclosure, power consumption and heat dissipation are the true physical limits. A 100 TOPS chip that requires a 50W power supply and a massive heatsink is a non-starter for a handheld scanner or a smart drone. This is where platforms like Hailo and Coral shine. The Hailo-8 architecture is a class leader in efficiency, achieving up to 10 TOPS/W, while the Coral Edge TPU offers a solid 2 TOPS/W.11 They are designed from the ground up for low-power applications. The NVIDIA Jetson, in contrast, is designed for maximum throughput, often in applications like industrial robotics or roadside computing units where a stable, high-wattage power source is readily available.

This means the first and most important question a developer must ask is not “How fast can it go?” but “What is my power budget?” This single constraint will do more to narrow the field of potential hardware than any other factor.

The Software Straitjacket: Flexibility vs. Optimization

The second critical trade-off is between software freedom and specialized performance. This choice is best illustrated by what can be called the “golden handcuffs” of optimization.

Google’s Coral platform is the prime example. The Edge TPU’s incredible speed is a direct result of it being an ASIC, a piece of hardware physically designed to execute the operations found in TensorFlow Lite models.13 This provides a massive performance boost, but it comes at a cost: the developer is locked into the TFLite ecosystem. If a new, state-of-the-art model is released in PyTorch, or if a custom model uses an operation not supported by the Edge TPU compiler, the accelerator is rendered useless.34 These are the golden handcuffs: you get amazing, optimized performance, but you sacrifice the freedom to use other tools.

At the other end of the spectrum is the NVIDIA Jetson. Its GPU is a general-purpose parallel processor. The comprehensive CUDA ecosystem allows it to run a vast array of models from nearly any framework, including PyTorch, TensorFlow, and others.34 This offers the ultimate flexibility for developers who want to experiment, pivot to new model architectures, or work with the latest research. The accelerated Raspberry Pi, with a partner like Hailo, sits comfortably in the middle. Hailo’s software suite provides support for several major frameworks, including TensorFlow, PyTorch, and ONNX, offering a good balance between optimization and flexibility.18

The developer’s choice of hardware, therefore, dictates their entire software workflow and their options for future development. One must decide whether to commit to a specific, optimized framework for a guaranteed performance boost or to prioritize the flexibility to adapt and explore the rapidly evolving world of AI.

Ecosystem and Community: Who Has Your Back?

Finally, no developer is an island. The quality of the ecosystem and community surrounding a platform can be the difference between a successful project and a frustrating dead end. Each of the major contenders offers a different flavor of support.

NVIDIA provides a professional, top-down ecosystem. It is characterized by extensive official documentation, enterprise-grade development and profiling tools, and highly active developer forums staffed by NVIDIA engineers.22 This is a support structure designed for professionals building commercial products.

Google Coral’s support is more product-focused. The official documentation includes excellent getting-started guides and a well-curated set of example projects.28 The community is smaller and more niche, centered on users who have bought into the TFLite-on-the-edge ecosystem.

The Raspberry Pi, unsurprisingly, boasts the largest, most diverse, and most creative grassroots community on the planet.34 Whatever problem a developer encounters, it is almost certain that someone else has already solved it and written a blog post, filmed a tutorial, or answered a question about it on a forum. This massive, bottom-up support network is arguably the platform’s greatest strength.

Chapter 4: Your First Project: From Zero to AI Hero

Getting started with edge AI can seem daunting, but the process is more accessible than ever. This chapter provides a high-level, conceptual walkthrough of a first project. It is not a line-by-line tutorial but rather a map of the territory, designed to demystify the workflow and make the journey from zero to a working AI application feel achievable.

The Three-Step Dance: Setup, Model, Inference

Regardless of the platform you choose, the fundamental workflow for a first project can be broken down into three main stages.

1. The Setup: Preparing the Brain

This initial phase is all about getting the hardware and base software running. For a Jetson board, this typically involves using NVIDIA’s SDK Manager on a host computer to flash the device with the latest JetPack, which installs the operating system and all necessary drivers.15 For a Raspberry Pi, it’s the familiar process of flashing Raspberry Pi OS to a microSD card.41 For a Coral Dev Board, it involves flashing its custom Mendel Linux OS.42 Once the OS is running, the next step is to install the core libraries needed to communicate with the AI accelerator. This could mean installing the

pycoral library for a Coral device, the Hailo AI drivers for the Raspberry Pi AI Kit, or simply ensuring the tflite-runtime is present for a CPU-based Pi project.43

2. Acquiring Your Model: Installing the Mind

One of the biggest misconceptions for newcomers is the belief that they need to train a complex AI model from scratch. For a first project, this is almost never the case. The community has already done the heavy lifting. This step involves tapping into “model zoos”—online repositories of pre-trained models. The Hailo Model Zoo, for example, offers a wide range of models optimized for its hardware.45 The goal is to find a common, well-understood model, such as an object detection model like YOLOv5 or MobileNet SSD.30 Once downloaded, some platforms require a final compilation or conversion step to prepare the model to run on the specific accelerator. This process optimizes the model’s architecture for the target hardware, ensuring maximum performance.

3. Running Inference: The Moment of Thought

This is the final and most rewarding step. It typically involves writing a simple Python script that performs three actions: loads the optimized model into the memory of the AI accelerator, captures an image or video frame from a connected camera, and feeds that image to the model for “inference.” The model processes the image and returns a result—for an object detection model, this would be the coordinates of a bounding box around a detected object and a label identifying what it is (e.g., “person,” “car,” “dog”).41 Displaying this bounding box on a live video feed is the “Hello, World!” of edge AI—a tangible demonstration of a machine thinking for itself.

A typical workflow for edge AI looks like this:

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph TD

subgraph "Phase 1: Development Environment"

A[Start: Choose AI Board]

B{OS & SDK Setup}

C["Flash OS to Board<br><i>(JetPack, RPi OS, Mendel)</i>"]

D["Install Core Libraries & Drivers<br><i>(CUDA, Hailo AI Suite, PyCoral)</i>"]

end

subgraph "Phase 2: AI Model Preparation"

E["Acquire Pre-Trained Model<br><i>(e.g., from a Model Zoo)</i>"]

F{Model Compatible?}

G["Convert & Quantize Model<br><i>(e.g., to TFLite, ONNX)</i>"]

H["Compile for Accelerator<br><i>(Map operations to TPU/NPU)</i>"]

I[Find a Different Model]

end

subgraph "Phase 3: Application & Deployment"

J[Write Inference Script in Python]

K[Load Compiled Model into Accelerator]

L["Capture Input Data<br><i>(e.g., Camera Frame)</i>"]

M[Run Inference:<br><b>Feed Data to Model</b>]

N["Process & Display Results<br><i>(e.g., Draw Bounding Box)</i>"]

O[Success: AI runs on the Edge!]

end

A --> B;

B --> C --> D;

D --> E;

E --> F;

F -- Yes --> G;

F -- No --> I --> E;

G --> H;

H --> J;

J --> K --> L --> M --> N --> O;

%% Styling

classDef primary fill:#1e3a8a,stroke:#1e3a8a,stroke-width:2px,color:#ffffff;

classDef success fill:#10b981,stroke:#10b981,stroke-width:2px,color:#ffffff;

classDef decision fill:#f59e0b,stroke:#f59e0b,stroke-width:1px,color:#ffffff;

classDef process fill:#0d9488,stroke:#0d9488,stroke-width:1px,color:#ffffff;

classDef system fill:#8b5cf6,stroke:#8b5cf6,stroke-width:1px,color:#ffffff;

class A primary;

class B,F decision;

class C,D,E,G,H,I,J,K,L,M,N process;

class O success;

Your Launchpad: Official Project Repositories

The best way to learn is by doing, and the best way to start doing is by building on the work of others. Instead of following a static, potentially outdated tutorial, the most durable approach is to dive into the official, actively maintained project repositories for each platform. These are living collections of code that provide the best starting points for your own creations.

- For NVIDIA Jetson: The “Hello AI World” repository is a comprehensive collection of tutorials and examples that guide you through image classification, object detection, and segmentation using TensorRT. Beyond that, the broader Jetson Community Projects page showcases dozens of inspiring projects, from autonomous robots to real-time language translation.23

- For Google Coral: The official Coral examples repository on GitHub is the definitive launchpad. It contains clean, well-documented code for everything from PoseNet human pose estimation and real-time object tracking to person segmentation and keyphrase detection.28

- For Raspberry Pi with Hailo: The best place to start is with the examples integrated into Raspberry Pi’s own camera applications. The rpicam-apps repository includes built-in post-processing stages for the Hailo accelerator.17 For more advanced use cases, Hailo maintains its own repository of Raspberry Pi 5 examples that demonstrate detection, pose estimation, and segmentation.18

Chapter 5: The Horizon: What’s Next for Edge AI?

The world of AI development boards is not static; it is a rapidly evolving frontier. The platforms available today are already incredibly powerful, but the trends shaping the hardware and software of tomorrow promise to make on-device intelligence even more pervasive, efficient, and capable.

The Inevitable Shift to the Edge

The movement of AI from the cloud to the device is not a temporary fad; it is a fundamental architectural shift in computing. This transition is driven by undeniable real-world needs. The demand for lower latency in applications like robotics and autonomous vehicles, the growing imperative for user privacy in an age of constant data collection, and the physical constraint of battery life in mobile devices are all powerful forces pushing computation to the edge.1 As more industries, from healthcare and automotive to smart retail and industrial automation, adopt AI, the need for real-time, on-device intelligence will only grow, solidifying the importance of edge AI hardware.48

The Future of the “Brain”: Smaller, Smarter, More Diverse

The hardware itself is on a clear trajectory. While the “TOPS War” will likely continue for marketing purposes, the real engineering innovation will focus on two key areas: efficiency and specialization.11 The most important metric for the future of edge AI is not raw power but performance-per-watt.

This focus is leading to a diversification of AI processors rather than a convergence on a single dominant architecture. The future of edge AI hardware is not a single tool, but a heterogeneous toolbox. This toolbox will contain:

- High-performance GPUs, like NVIDIA’s, for complex, multi-task robotics and vision systems.11

- Ultra-low-power NPUs, like those from Hailo and Renesas, for “always-on” sensor applications in battery-powered devices.11

- Cost-effective Systems-on-a-Chip (SoCs) with integrated NPUs, like those from Rockchip, for mass-market multimedia devices and smart displays.50

- Adaptable FPGAs, like those from AMD/Xilinx, for applications requiring custom hardware pipelines alongside AI acceleration.11

- And versatile CPUs, which will remain the backbone for managing the entire system and running tasks not suited for specialized accelerators.49

In this future, the developer’s role will increasingly be that of a systems integrator. The key skill will not be finding a single, perfect “AI chip” for every problem, but rather selecting the right combination of processors from the toolbox to build the most effective and efficient solution for a specific task.

The Future of the “Mind”: Smaller Models and On-Device Learning

The software side of the equation is evolving just as rapidly. Researchers and companies are now developing smaller, more efficient AI models specifically designed to run on resource-constrained edge devices.48 This focus on model optimization is just as important as the hardware advancements.

The true holy grail of edge AI, however, is robust on-device training. Currently, most edge platforms are used for “inference”—running a model that has already been trained on a powerful server in the cloud. The ability to learn and adapt directly from new data on the device itself is still limited on most of today’s boards.51 Achieving true, continuous on-device learning without sacrificing performance or efficiency represents the next major frontier for the field.

Conclusion: Your Turn to Build

The journey from abstract, cloud-based AI to tangible, on-device intelligence has been a rapid one. The tools to build your own intelligent devices—the brains, the minds, and the ecosystems to support them—are more powerful, more affordable, and more accessible than ever before. The power to create a camera that recognizes faces, a robot that navigates a room, or a sensor that understands the world around it is no longer the exclusive domain of large corporations. It is on your workbench, waiting for your ingenuity. The choice of platform—be it the flexible power of a Jetson, the focused efficiency of a Coral, or the customizable and community-driven spirit of an accelerated Raspberry Pi—is simply the first step. The real adventure begins when you pick a board, dive into the community, and start building the future of AI, one device at a time.

References

- AI-Powered Development Boards – Driving Innovation in Edge Computing – Xilabs, access date July 16, 2025, https://xilabs.in/AI-Development-Boards

- xilabs.in, access date July 16, 2025, https://xilabs.in/AI-Development-Boards#:~:text=in%20Edge%20Computing-,AI%2DPowered%20Development%20Boards%20%2D%20Driving%20Innovation%20in%20Edge%20Computing,capabilities%20in%20compact%2C%20affordable%20packages.

- What’s the best analogy for describing artificial intelligence? – Quora, access date July 16, 2025, https://www.quora.com/Whats-the-best-analogy-for-describing-artificial-intelligence

- About a mind / brain analogy regarding computers [closed] – Philosophy Stack Exchange, access date July 16, 2025, https://philosophy.stackexchange.com/questions/11241/about-a-mind-brain-analogy-regarding-computers

- Top 10 Embedded Development Boards for Robotics & Automation – The IoT Academy, access date July 16, 2025, https://www.theiotacademy.co/blog/embedded-development-boards/

- Top Development Boards in 2025 for AI, Robotics and IoT – Electromaker.io, access date July 16, 2025, https://www.electromaker.io/blog/article/the-best-development-boards-for-every-project

- Google Coral Edge TPU vs NVIDIA Jetson Nano: A quick deep dive into EdgeAI performance | by Sam Sterckval | Medium, access date July 16, 2025, https://medium.com/@samsterckval/google-coral-edge-tpu-vs-nvidia-jetson-nano-a-quick-deep-dive-into-edgeai-performance-bc7860b8d87a

- Dev Board datasheet | Coral, access date July 16, 2025, https://coral.ai/docs/dev-board/datasheet/

- Raspberry Pi AI Kit review – magazin Mehatronika, access date July 16, 2025, https://magazinmehatronika.com/en/raspberry-pi-ai-kit-review/

- JetPack SDK – NVIDIA Developer, access date July 16, 2025, https://developer.nvidia.com/embedded/jetpack

- Top 10 Edge AI Hardware for 2025 – Jaycon | Product Design, PCB & Injection Molding, access date July 16, 2025, https://www.jaycon.com/top-10-edge-ai-hardware-for-2025/

- Two Useful Analogies for Understanding and Working With AI – Sysabee, access date July 16, 2025, https://www.sysabee.com/index.php/articles/two-useful-analogies-for-understanding-and-working-with-ai/

- Dev Board | Coral, access date July 16, 2025, https://coral.ai/products/dev-board/

- Products | Coral, access date July 16, 2025, https://coral.ai/products/

- JetPack SDK 5.1.1 – NVIDIA Developer, access date July 16, 2025, https://developer.nvidia.com/embedded/jetpack-sdk-511

- Introduction to NVIDIA JetPack SDK, access date July 16, 2025, https://docs.nvidia.com/jetson/jetpack/introduction/index.html

- Getting Started with the New AI HAT+ for the Raspberry Pi 5 – Buyzero, access date July 16, 2025, https://buyzero.de/blogs/news/getting-started-with-the-new-ai-hat-for-the-raspberry-pi-5

- hailo-ai/hailo-rpi5-examples – GitHub, access date July 16, 2025, https://github.com/hailo-ai/hailo-rpi5-examples

- Top AI Hardware Boards in 2025 – Artificial Intelligence University, access date July 16, 2025, https://aiu.ac/top-ai-hardware-boards-in-2025/

- Top Development Boards in 2025 for AI, Robotics and IoT – YouTube, access date July 16, 2025, https://www.youtube.com/watch?v=XneRrmRVgdQ

- Buy the Latest Jetson Products – NVIDIA Developer, access date July 16, 2025, https://developer.nvidia.com/buy-jetson

- Jetson AGX Orin for Next-Gen Robotics – NVIDIA, access date July 16, 2025, https://www.nvidia.com/en-au/autonomous-machines/embedded-systems/jetson-orin/

- Jetson Community Projects – NVIDIA Developer, access date July 16, 2025, https://developer.nvidia.com/embedded/community/jetson-projects

- Community Projects – NVIDIA Jetson AI Lab, access date July 16, 2025, https://www.jetson-ai-lab.com/community_articles.html

- Dev Board Mini | Coral, access date July 16, 2025, https://coral.ai/products/dev-board-mini/

- USB Accelerator | Coral, access date July 16, 2025, https://coral.ai/products/accelerator/

- How to deploy a tensorflow lite model on a low resource edge device – General Discussion, access date July 16, 2025, https://discuss.ai.google.dev/t/how-to-deploy-a-tensorflow-lite-model-on-a-low-resource-edge-device/29468

- Examples | Coral, access date July 16, 2025, https://coral.ai/examples/

- 12 Google Coral Dev Board Projects & Tutorials for Beginners and Up – Hackster.io, access date July 16, 2025, https://dev.hackster.io/google/products/coral-dev-board?ref=project-f5f056

- Raspberry Pi AI Kit Review: Brainiac | Tom’s Hardware, access date July 16, 2025, https://www.tomshardware.com/raspberry-pi/raspberry-pi-ai-kit-review

- Testing Raspberry Pi’s AI Kit – 13 TOPS for $70 | Jeff Geerling, access date July 16, 2025, https://www.jeffgeerling.com/blog/2024/testing-raspberry-pis-ai-kit-13-tops-70

- Image classification with Google Coral | AI & Data | Coding projects for kids and teens, access date July 16, 2025, https://projects.raspberrypi.org/en/projects/image-id-coral

- Coral Edge TPU on a Raspberry Pi with Ultralytics YOLO11, access date July 16, 2025, https://docs.ultralytics.com/guides/coral-edge-tpu-on-raspberry-pi/

- [D] Would you prefer Google coral to Raspberry Pi 4, or Jetson Nano? – Reddit, access date July 16, 2025, https://www.reddit.com/r/MachineLearning/comments/c876nx/d_would_you_prefer_google_coral_to_raspberry_pi_4/

- Course on AI Kit + Raspberry Pi, access date July 16, 2025, https://forums.raspberrypi.com/viewtopic.php?t=377004

- Raspberry Pi + AI integrations : r/raspberry_pi – Reddit, access date July 16, 2025, https://www.reddit.com/r/raspberry_pi/comments/1iw74jy/raspberry_pi_ai_integrations/

- Is Google Coral worthy to buy? Better than Rasp Pi 4 or Jetson Nano? | by Artificial – Medium, access date July 16, 2025, https://medium.com/@deve321/is-google-coral-worthy-to-buy-better-than-rasp-pi-4-or-jetson-nano-819cac61a537

- Nvidia jetson nano or raspberry pi 4 + google coral usb accelerator : r/JetsonNano – Reddit, access date July 16, 2025, https://www.reddit.com/r/JetsonNano/comments/jouz00/nvidia_jetson_nano_or_raspberry_pi_4_google_coral/

- Latest Jetson Projects topics – NVIDIA Developer Forums, access date July 16, 2025, https://forums.developer.nvidia.com/c/robotics-edge-computing/jetson-embedded-systems/jetson-projects/78

- google-coral – GitHub, access date July 16, 2025, https://github.com/google-coral

- How to Set Up Raspberry Pi 5 for AI Projects & Machine Learning – Elecrow, access date July 16, 2025, https://www.elecrow.com/blog/setup-raspberry-pi-5-for-ai-projects-and-machine-learning.html

- Get started with the Dev Board – Coral, access date July 16, 2025, https://coral.ai/docs/dev-board/get-started/

- Build a Smart AI Assistant with Your Raspberry Pi (Easier Than You Think) – Pidora, access date July 16, 2025, https://pidora.ca/build-a-smart-ai-assistant-with-your-raspberry-pi-easier-than-you-think/

- RPi Compact AI Camera Feat. Coral USB Accelerator : 5 Steps (with Pictures) – Instructables, access date July 16, 2025, https://www.instructables.com/RPi-Compact-AI-Camera-Feat-Coral-USB-Accelerator/

- Hailo Raspberry Pi AI Kit and Python code examples – General, access date July 16, 2025, https://community.hailo.ai/t/hailo-raspberry-pi-ai-kit-and-python-code-examples/3467

- Get started with the Dev Board Micro | Coral, access date July 16, 2025, https://coral.ai/docs/dev-board-micro/get-started/

- Getting Started With the Hailo AI Kit For Raspberry Pi 5 – YouTube, access date July 16, 2025, https://www.youtube.com/watch?v=9UCOQj6ep2A

- AI shifts to the edge as smaller models and smarter chips redefine compute, access date July 16, 2025, https://www.edgeir.com/ai-shifts-to-the-edge-as-smaller-models-and-smarter-chips-redefine-compute-20250715

- Edge AI Hardware Industry worth $58.90 billion by 2030 – MarketsandMarkets, access date July 16, 2025, https://www.marketsandmarkets.com/PressReleases/edge-ai-hardware.asp

- Top 10 Hardware Platforms for Embedded AI in 2025 – Promwad, access date July 16, 2025, https://promwad.com/news/top-hardware-platforms-embedded-ai-2025

- Deep learning with Raspberry Pi and alternatives in 2024 – Q-engineering, access date July 16, 2025, https://qengineering.eu/deep-learning-with-raspberry-pi-and-alternatives.html

- Commercial comparison Nvidia Jetson Nano / Raspberry Pi + Coral USB / Coral Devboard, access date July 16, 2025, https://pi3g.com/commercial-comparison-nvidia-jetson-nano-raspberry-pi-coral-usb-coral-devboard/