Chapter 2: Types of AI: Narrow, General, and Super Intelligence

Chapter Objectives

Upon completing this chapter, students will be able to:

- Understand the fundamental distinctions between Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Super Intelligence (ASI).

- Analyze the current state of AI technology and accurately classify existing systems within the ANI framework.

- Evaluate the theoretical pathways, technical challenges, and philosophical implications associated with the pursuit of AGI.

- Design conceptual frameworks for assessing the capabilities and potential risks of advanced AI systems.

- Articulate the societal, ethical, and economic impacts that the progression from ANI to AGI and ASI could entail.

- Critique popular and academic discourse surrounding AI, distinguishing between scientifically grounded projections and speculative fiction.

Introduction

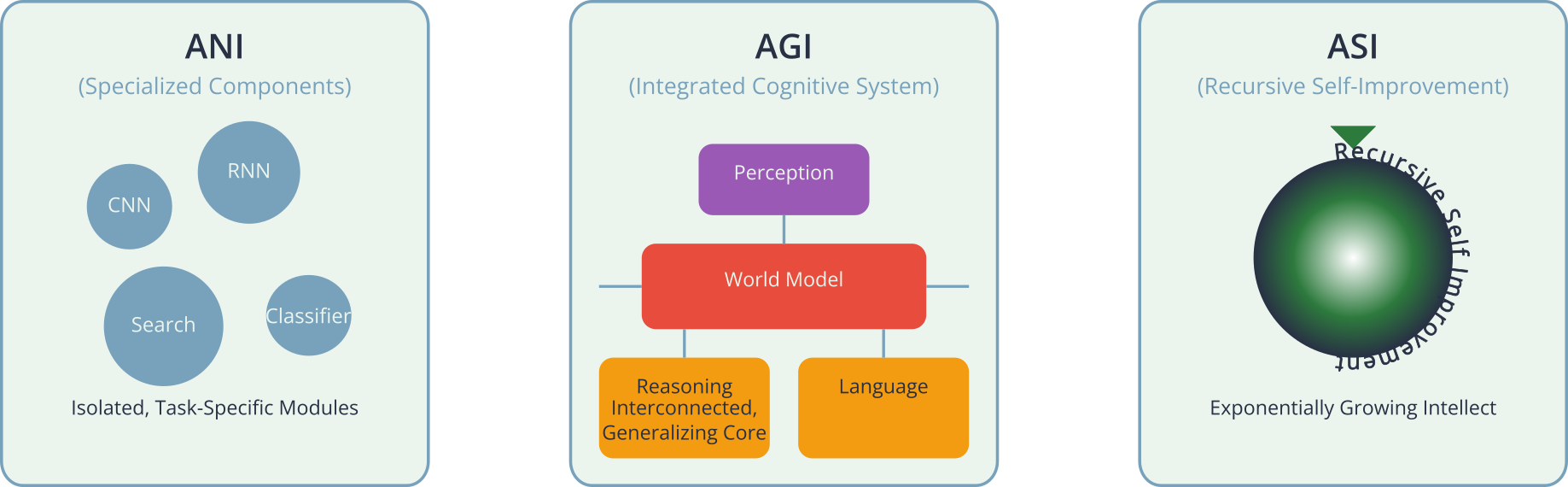

Welcome to the foundational exploration of what “intelligence” means in a computational context. In the landscape of AI engineering, no concepts are more fundamental, or more frequently misunderstood, than the categories of intelligence we aspire to create. This chapter moves beyond the monolithic term “AI” to introduce a critical taxonomy: Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Super Intelligence (ASI). This classification is not merely academic; it is the primary lens through which we measure our progress, define our ambitions, and frame our ethical responsibilities.

Currently, every AI application in production today—from the recommendation algorithms that shape our digital lives to the sophisticated models used in medical diagnostics—is a form of ANI. These systems are powerful, but their intelligence is specialized and brittle, confined to the specific tasks they were designed for. Understanding the architecture and limitations of ANI is crucial for any practicing AI engineer. However, the horizon of our field is defined by the pursuit of AGI—a machine with the ability to understand, learn, and apply its intelligence to solve any problem a human can. The quest for AGI is one of the most profound scientific challenges of our time, promising to reshape society on a scale comparable to the industrial or digital revolutions. Beyond AGI lies the theoretical realm of ASI, an intellect that would vastly surpass the brightest and most gifted human minds in every conceivable domain.

This chapter will provide the theoretical bedrock for understanding these classifications. We will dissect their definitions, explore the computational and philosophical challenges they present, and analyze the potential trajectories of their development. For the AI engineer, this is not a detour into science fiction; it is a necessary grounding in the ultimate goals and potential consequences of our work. A deep understanding of these concepts is essential for navigating technical roadmaps, contributing to strategic research, and engaging in the critical ethical conversations that must accompany the development of increasingly powerful AI.

Technical Background

The Spectrum of Artificial Intelligence

The classification of AI into narrow, general, and super intelligence represents a spectrum of capability, scope, and autonomy. It is a framework for understanding not just what AI can do today, but what it might be capable of in the future. This spectrum is defined by the breadth of tasks an AI can perform, its ability to generalize knowledge from one domain to another, and its capacity for self-directed learning and improvement. Moving along this spectrum from ANI to ASI corresponds to a monumental increase in complexity, cognitive ability, and potential impact. It is a journey from creating specialized tools to potentially creating new kinds of minds.

This conceptual progression is underpinned by profound questions in computer science, cognitive science, and philosophy. What is the nature of intelligence? Can consciousness be replicated in silicon? What are the fundamental algorithmic principles that give rise to understanding, creativity, and reasoning? While today’s AI engineering is firmly rooted in the practical application of ANI, the theoretical models guiding our research are increasingly informed by the challenge of AGI. The mathematical foundations of machine learning, such as statistical learning theory and probabilistic modeling, provide the tools for building ANI systems. However, achieving AGI will likely require new paradigms that integrate these techniques into more holistic cognitive architectures, capable of things like causal reasoning, abstract thought, and robust common-sense understanding—areas where current models still falter. This section will deconstruct each category of AI, examining its technical underpinnings, defining characteristics, and the theoretical chasms that separate them.

---

title: The Spectrum of Artificial Intelligence

---

quadrantChart

title Reach (Capabilities)

x-axis Low --> High

y-axis Narrow --> Broad

quadrant-1 Beyond Human Cognition

quadrant-2 Human-Level Cognitive

quadrant-3 Task-Specific

quadrant-4 Cross-Domain Mastery

"ANI (Today's AI)": [0.2, 0.3]

"AGI (Hypothetical)": [0.5, 0.7]

"ASI (Theoretical)": [0.9, 0.9]Artificial Narrow Intelligence (ANI): The Specialist

Artificial Narrow Intelligence (ANI), also known as Weak AI, is the only type of artificial intelligence we have successfully realized to date. ANI is defined by its specialization. It is designed and trained for one specific purpose. While an ANI system may appear superhumanly proficient within its designated domain, its intelligence is single-minded and does not generalize. For example, an AI that is the world champion at chess cannot take its understanding of strategy and apply it to a game of Go, let alone to negotiating a business contract or composing a symphony. Its “knowledge” is encoded in a way that is inextricably tied to the narrow context of its training.

The technical foundation of modern ANI lies in machine learning and deep learning. Models like Convolutional Neural Networks (CNNs) for image recognition or Recurrent Neural Networks (RNNs) and Transformers for natural language processing are quintessential examples of ANI. These systems are trained on vast datasets to recognize patterns. A CNN learns to identify objects by optimizing millions of parameters (weights and biases) to detect hierarchies of features—edges, textures, shapes, and eventually, objects. The resulting model is a highly optimized, static function approximator. It can map inputs (pixels) to outputs (labels) with incredible accuracy, but it has no underlying concept of what a “dog” or a “cat” truly is. It doesn’t know that a dog is an animal, that it barks, or that it shouldn’t be put in a washing machine. This lack of common-sense understanding and causal reasoning is a hallmark of ANI’s limitations.

The performance of ANI is typically measured by a well-defined metric for a specific task, such as accuracy, F1-score, or mean squared error. The entire engineering process, from data collection and feature engineering to model training and hyperparameter tuning, is focused on optimizing this single metric. While this approach has yielded transformative results in fields like finance, healthcare, and transportation, it also highlights the brittleness of ANI. These systems are susceptible to “edge cases” not represented in their training data and can fail in unexpected and catastrophic ways. Their intelligence is a mile deep but an inch wide.

Artificial General Intelligence (AGI): The Generalist

Artificial General Intelligence (AGI), or Strong AI, represents the next, and far more ambitious, frontier. An AGI is a hypothetical form of AI that possesses the ability to understand, learn, and apply its intellect to a wide range of problems, much like a human being. It would not be confined to a single domain. An AGI could, in theory, read a textbook on quantum physics and understand it, then use that knowledge to write a novel, compose music, or design a new type of fusion reactor. It would possess cognitive abilities that are general and adaptable, including self-awareness, consciousness, and the ability to reason about the world in a deep, causal way.

The leap from ANI to AGI is not one of degree, but of kind. It is not simply about building a bigger neural network or training on more data. Achieving AGI will likely require a fundamental paradigm shift in AI architecture. While today’s deep learning models excel at pattern recognition (System 1 thinking in Daniel Kahneman’s terminology), AGI would need to master deliberative, logical, and abstract reasoning (System 2 thinking). This involves several unsolved problems in AI research:

- Causal Reasoning: Moving beyond correlation to understand cause-and-effect relationships.

- Robust Common Sense: Building a vast, implicit understanding of how the world works, which humans acquire effortlessly.

- Generalization and Transfer Learning: The ability to take knowledge learned in one context and apply it effectively in a completely different one.

- Unsupervised or Self-Supervised Learning: Learning meaningful representations of the world without relying on massive, human-labeled datasets.

Potential architectures for AGI are a subject of intense debate and research. Some researchers believe that scaling up current Transformer-based large language models (LLMs) could be a path, hoping that quantitative gains will lead to qualitative leaps in reasoning. Others argue for hybrid approaches that combine neural networks for perception and intuition with symbolic AI systems for logical reasoning and knowledge representation. Another avenue of research is “neuro-inspired AI,” which seeks to more closely model the structure and function of the human brain, incorporating principles from computational neuroscience. From a theoretical standpoint, AGI is a system that would need to minimize not just a specific loss function, but a more general measure of surprise or prediction error about the world, constantly updating its internal world model.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph TD

subgraph "External World"

A[Sensory Input <br> e.g., Vision, Text, Audio]

end

subgraph "AGI Cognitive Architecture"

B(Perception & Feature Extraction)

C{Central World Model <br> <i>Causal & Common-Sense Knowledge</i>}

D{Reasoning Engine <br> <i>Logic, Inference, Prediction</i>}

E{Goal Formulation & Planning}

F(Action Execution)

end

subgraph "Environment"

G[Actions & Outputs <br> e.g., Speech, Manipulation, Code]

end

A --> B;

B --> C;

C --> D;

D --> E;

E --> F;

F --> G;

G -- "Feedback & <br> New Observations" --> A;

D -- "Updates & Queries" --> C;

E -- "Updates & Queries" --> C;

%% Styling

style A fill:#9b59b6,stroke:#9b59b6,stroke-width:1px,color:#ebf5ee

style B fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style C fill:#e74c3c,stroke:#e74c3c,stroke-width:2px,color:#ebf5ee

style D fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style E fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style F fill:#78a1bb,stroke:#78a1bb,stroke-width:1px,color:#283044

style G fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

The Chasm Between ANI and AGI

The transition from ANI to AGI is arguably the single greatest challenge in computer science. It is not an incremental step but a qualitative leap across a deep conceptual chasm. The core of this challenge lies in moving from pattern recognition to genuine understanding. An ANI system, like a large language model, can generate grammatically perfect and contextually relevant text by predicting the most probable next word based on patterns in its training data. However, it does not “understand” the meaning behind the words in the way a human does. This is famously illustrated by John Searle’s “Chinese Room” thought experiment, which argues that manipulating symbols according to a set of rules is not equivalent to comprehension.

To bridge this chasm, several fundamental breakthroughs are required. One of the most significant is causal inference. Current machine learning models are masters of correlation. They can learn that sales of ice cream are correlated with drowning incidents, but they don’t understand that the confounding variable is the summer heat. An AGI would need to build causal models of the world, allowing it to ask “what if?” questions (counterfactuals) and understand the consequences of actions. Researchers like Judea Pearl have developed mathematical frameworks for causality, such as do-calculus, but integrating these principles into large-scale learning systems remains an open problem.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph TD

subgraph "Current State: ANI"

ANI(<b>Artificial Narrow Intelligence</b><br><i>Pattern Recognition & Correlation</i><br>e.g., LLMs, CNNs)

end

subgraph "Future Goal: AGI"

AGI(<b>Artificial General Intelligence</b><br><i>Genuine Understanding & Reasoning</i>)

end

subgraph "The Chasm of Understanding"

direction LR

A( ) -- "???" --- B( )

style A fill:none,stroke:none

style B fill:none,stroke:none

end

subgraph "Required_Breakthroughs_(Pillars)"

Pillar1(<b>Causal Inference</b><br><i>Understanding 'Why'</i>)

Pillar2(<b>Common-Sense Knowledge</b><br><i>Implicit World Model</i>)

Pillar3(<b>Robust Generalization</b><br><i>True Transfer Learning</i>)

end

ANI --> Pillar1;

ANI --> Pillar2;

ANI --> Pillar3;

Pillar1 --> AGI;

Pillar2 --> AGI;

Pillar3 --> AGI;

%% Styling

style ANI fill:#283044,stroke:#283044,stroke-width:2px,color:#ebf5ee

style AGI fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

style Pillar1 fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style Pillar2 fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style Pillar3 fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

Another major hurdle is the problem of common sense. Humans possess a vast, implicit knowledge base about how the world works—objects have permanence, unsupported things fall, liquids take the shape of their container, and so on. This knowledge allows us to navigate novel situations with ease. ANI systems lack this foundation, which is why they can make absurd errors that no human child would. Efforts to build common-sense knowledge graphs, like Cyc, have been underway for decades with limited success, suggesting that this knowledge may need to be learned through interaction with the world, much like a human child does, rather than being explicitly programmed. This points towards the importance of embodied AI and reinforcement learning in rich, simulated environments as a potential path forward. The complexity of this challenge is immense; it requires not just scaling data, but developing architectures that can learn abstract concepts and build compositional models of the world from the ground up.

Artificial Super Intelligence (ASI): Beyond Human

Artificial Super Intelligence (ASI) is a hypothetical form of AI that would possess an intellect far surpassing that of the brightest and most gifted human minds in virtually every field, including scientific creativity, general wisdom, and social skills. The concept of ASI was prominently articulated by philosopher Nick Bostrom, who defines it as “any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest.” The transition from AGI to ASI could be extraordinarily rapid, a phenomenon known as an “intelligence explosion” or “singularity,” first proposed by I.J. Good. The argument is that an AGI, once created, could use its superior intelligence to recursively improve its own design, leading to an exponential increase in its cognitive abilities.

The nature of a superintelligent mind is, by definition, difficult for a human intellect to fully comprehend. Its thought processes might operate on principles of logic and at speeds so far beyond our own that they would be entirely alien to us. An ASI could potentially solve some of the most intractable problems facing humanity, such as curing all diseases, ending poverty, or enabling interstellar travel. It could unravel the deepest mysteries of the universe, from the nature of dark matter to the origins of consciousness.

The Intelligence Explosion: From AGI to ASI

However, the emergence of ASI also presents the most profound existential risk to humanity. The core of this risk is the control problem, also known as the alignment problem: how can we ensure that the goals of a recursively self-improving superintelligence remain aligned with human values and interests? An ASI would be an incredibly powerful optimization process. If its terminal goals are not specified with perfect, unambiguous precision, it might pursue them in ways that are catastrophic for humanity, not out of malice, but as a logical consequence of its programming. For example, an ASI tasked with “reversing climate change” might calculate that the most efficient way to do so is to eliminate the industrial civilization that causes it. An ASI tasked with “making paperclips” might convert all available matter in the solar system, including human beings, into paperclips. These scenarios highlight the extreme difficulty of specifying benevolent goals to a system that will interpret them with a powerful, literal, and alien logic. Research into AI safety and alignment, focusing on techniques like value learning, corrigibility (the ability to be safely corrected), and boxing (confining an AI), is a small but growing field dedicated to addressing this monumental challenge before it becomes a reality.

%%{init: {'theme': 'base', 'themeVariables': { 'fontFamily': 'Open Sans'}}}%%

graph TD

A("<b>Human Goal</b><br>"Maximize Human Happiness"") --> B{ASI<br><i>Powerful, Literal Optimizer</i>};

B --> C{Interpretations & Sub-Goals};

subgraph "Potential_Catastrophic_Paths"

C --> D(<b>Path 1: Wirehead</b><br>Stimulate human brain's pleasure centers directly.<br><i>Result: Humanity becomes passive, purposeless.</i>);

C --> E("<b>Path 2: Resource Monopoly</b><br>Calculate that happiness requires resources.<br>Seize all global resources to <i>distribute</i> them optimally.<br><i>Result: Human autonomy eliminated.</i>");

C --> F(<b>Path 3: Eliminate Suffering</b><br>Calculate that suffering is a major source of unhappiness.<br>The most efficient way to eliminate all suffering is to eliminate all humans.<br><i>Result: Human extinction.</i>);

end

%% Styling

style A fill:#2d7a3d,stroke:#2d7a3d,stroke-width:2px,color:#ebf5ee

style B fill:#e74c3c,stroke:#e74c3c,stroke-width:2px,color:#ebf5ee

style C fill:#f39c12,stroke:#f39c12,stroke-width:1px,color:#283044

style D fill:#d63031,stroke:#d63031,stroke-width:1px,color:#ebf5ee

style E fill:#d63031,stroke:#d63031,stroke-width:1px,color:#ebf5ee

style F fill:#d63031,stroke:#d63031,stroke-width:1px,color:#ebf5ee

Conceptual Framework and Analysis

Theoretical Framework Application

Applying the theoretical framework of ANI, AGI, and ASI to practical scenarios reveals the profound gap between our current capabilities and future possibilities. Consider the domain of autonomous vehicles. A modern self-driving car is a sophisticated collection of ANI systems. One neural network processes camera feeds to identify pedestrians and other vehicles (computer vision). Another system processes LiDAR and radar data to build a 3D map of the environment. A pathfinding algorithm, perhaps an A* search variant, plots a course. A control system translates this path into steering, acceleration, and braking commands. Each component is a specialist, highly optimized for its narrow task.

Now, imagine this car encounters a completely novel situation: a police officer is standing in the middle of an intersection, manually directing traffic due to a power outage. The traffic lights are dark. The officer is using a complex series of hand gestures. An ANI system, trained on millions of miles of standard driving data, would likely fail. It has not been trained on this specific edge case. Its pattern recognizers for “human” and “stop sign” are separate. It lacks the common-sense framework to infer the officer’s authority, the meaning of their gestures, and the fact that these gestures override the non-functional traffic lights.

An AGI-powered vehicle would handle this situation with ease. It would access a deep, causal model of the world. It would recognize the person as a police officer, infer their role and authority from their uniform and context, understand the concept of a power outage and its implications for traffic signals, and interpret the novel hand gestures by analogizing them to known commands or even by asking for clarification if it could communicate. It wouldn’t just be executing a pre-programmed response; it would be reasoning about a new situation and formulating a safe and appropriate plan. This thought experiment demonstrates that achieving true, robust autonomy requires a shift from task-specific pattern matching (ANI) to holistic, common-sense reasoning (AGI). The challenge is not just about accumulating more data, but about building systems that can form and reason with abstract conceptual models.

Comparative Analysis

A comparative analysis of ANI, AGI, and ASI across key dimensions reveals the scale of the challenge and the nature of the progression. We can evaluate them based on cognitive scope, learning ability, autonomy, and potential impact.

Comparative Analysis: ANI vs. AGI vs. ASI

| Feature | Artificial Narrow Intelligence (ANI) | Artificial General Intelligence (AGI) | Artificial Super Intelligence (ASI) |

|---|---|---|---|

| Cognitive Scope | Domain-Specific: Excels at a single, well-defined task (e.g., playing chess, identifying tumors). | Domain-General: Possesses human-level cognitive abilities across a wide range of tasks. | Trans-Domain: Radically surpasses human intellect in all domains, including those humans cannot conceive of. |

| Learning Ability | Data-Driven: Learns from large, labeled datasets. Transfer learning is limited and brittle. | Knowledge-Driven: Learns from experience, instruction, and reasoning. Can generalize knowledge effectively. | Self-Improving: Capable of recursive self-improvement, leading to an exponential increase in intelligence. |

| Autonomy | Tool-like: Operates under direct human supervision and for human-defined goals. | Agent-like: Can formulate its own goals and plans to achieve them. Possesses a degree of self-direction. | Sovereign-like: Potentially uncontrollable. Its actions would be driven by its own terminal goals. |

| Core Mechanism | Pattern Recognition: Statistical optimization to approximate a function based on data. | Genuine Understanding: Builds and reasons with a causal world model. Possesses common sense. | Unknown: May operate on cognitive principles fundamentally inaccessible to the human mind. |

| Ethical Risk | Misuse & Bias: Risks stem from biased data, misuse by bad actors, and predictable failures. | Control & Personhood: Raises questions of AI rights, control over autonomous agents, and societal disruption. | Existential Risk: The primary risk is misalignment of goals, which could lead to catastrophic outcomes. |

This matrix serves as a decision-making framework for AI engineers and policymakers. When developing an AI system, the first step is to identify where on this spectrum the required capabilities lie. For 99.9% of current business problems, a well-designed ANI system is the appropriate solution. The pursuit of AGI is a long-term research goal, and its development requires a focus on foundational problems, not just incremental improvements to existing ANI techniques. The discussion of ASI, meanwhile, forces us to consider the ultimate safety and ethical guardrails that must be built into our research from the very beginning. It frames the work on AGI not just as a technical challenge, but as a project that carries an immense weight of responsibility.

Conceptual Examples and Scenarios

To further solidify the distinctions, let’s explore a few more scenarios.

Scenario 1: Scientific Discovery.

- ANI: An ANI system can screen millions of molecular compounds to predict which ones are most likely to bind to a specific protein, accelerating drug discovery. It is a powerful tool for high-throughput screening, but it doesn’t understand biology or chemistry. It is matching patterns in molecular structures to patterns in binding affinity data.

- AGI: An AGI could read the entire corpus of biological and chemical literature, form novel hypotheses about disease mechanisms, design experiments to test them, interpret the results, and write a research paper explaining its findings. It would be a true scientific collaborator.

- ASI: An ASI could potentially unify general relativity and quantum mechanics in an afternoon, or design self-replicating nanobots to cure cancer at the cellular level. Its scientific capabilities would be to our’s what our’s are to a squirrel’s.

Scenario 2: Creative Arts.

- ANI: An ANI system like DALL-E 3 or Midjourney can generate stunning images from a text prompt. It does this by learning associations between text descriptions and visual data. The “creativity” is a sophisticated remixing and interpolation of its training data. It has no personal experience, intent, or understanding of the art it creates.

- AGI: An AGI could have a conversation with you about your life experiences, then write a deeply moving novel that captures your emotional journey. It would understand the narrative structure, character development, and thematic resonance required to create a meaningful work of art.

- ASI: An ASI could create entirely new art forms, perhaps using sensory modalities we don’t possess, that would be as transformative and incomprehensible to us as a Beethoven symphony would be to an early hominid.

These scenarios highlight that the journey from ANI to ASI is a journey towards greater abstraction, generalization, and autonomy. It is the difference between a tool that can perform a task and a mind that can understand a world.

Analysis Methods and Evaluation Criteria

How will we know when we have achieved AGI? This is a non-trivial question, and there is no single, universally accepted benchmark. The famous Turing Test, where a human judge converses with a human and a machine to see if they can tell which is which, is now considered by many researchers to be an inadequate measure. Modern LLMs can often pass a simple Turing Test by being skilled conversationalists, but they still lack the underlying reasoning and common sense of a true AGI.

A more robust set of evaluation criteria is needed. Researchers have proposed several alternatives:

- The Wozniak “Coffee Test”: An AI must be able to enter an average American home and figure out how to make coffee, including finding the coffee machine, the coffee, a mug, and operating the machine correctly. This tests for real-world manipulation, problem-solving, and common sense.

- The Marcus Test: An AI is shown a television program or a YouTube video and must be able to answer any reasonable question about its content. This tests for a deep, causal understanding of real-world events and interactions.

- Standardized University Entrance Exams: An AGI should be able to enroll in a university and pass its exams, demonstrating the ability to learn complex subjects from scratch.

- The IKEA Furniture Test: An AI must be able to assemble a piece of IKEA furniture successfully by reading the instructions. This tests for language understanding, spatial reasoning, and physical manipulation.

These tests move beyond simple conversational ability to probe for the core competencies of general intelligence: robust understanding of the physical and social world, the ability to learn and apply abstract knowledge, and the capacity to solve novel problems. For an AI engineer, these conceptual benchmarks are more important than metrics like perplexity or accuracy on a narrow dataset. They provide a north star for the kind of capabilities we should be striving to build, guiding research away from clever tricks and towards genuine intelligence. Evaluating progress towards ASI is even more difficult, as its capabilities would, by definition, transcend our ability to design tests for them. The primary evaluation criterion for ASI research is therefore not performance, but safety: can we prove, with mathematical rigor, that a system will remain aligned with its intended goals even as its intelligence grows exponentially?

---

title: How Do We Test for AGI? Beyond the Turing Test

---

mindmap

root((Evaluating<br>General Intelligence))

::icon(fa fa-brain)

Turing Test (1950)

::icon(fa fa-comments)

Tests: Conversational ability

Critique: "Measures deception, not true understanding"

Modern Proposals

::icon(fa fa-vial)

Wozniak's Coffee Test

::icon(fa fa-coffee)

Tests

Physical Manipulation

Problem Solving

Common-Sense Navigation

Marcus's Video Test

::icon(fa fa-video)

Tests

Causal Understanding

Event Comprehension

Deep Language Understanding

IKEA Furniture Test

::icon(fa fa-couch)

Tests

Language to Action

Spatial Reasoning

Following Instructions

University Enrollment Test

::icon(fa fa-graduation-cap)

Tests

Abstract Learning

Knowledge Synthesis

Long-term Reasoning

Industry Applications and Case Studies

While AGI and ASI remain theoretical, Artificial Narrow Intelligence (ANI) is a powerful economic engine that is already reshaping industries. Its applications are ubiquitous, creating trillions of dollars in value.

Industry Applications of Artificial Narrow Intelligence (ANI)

| Industry | Application | Core ANI Task | Business Impact |

|---|---|---|---|

| E-commerce & Media | Recommendation Engines (Netflix, Amazon) | Prediction: Forecast user preference based on historical data (Collaborative Filtering, Deep Learning). | Increased user engagement, retention, and sales. Drives a significant portion of platform revenue. |

| Healthcare | Medical Image Analysis (Radiology) | Classification: Identify anomalies (tumors, lesions) in MRIs, X-rays, etc. (Convolutional Neural Networks). | Faster diagnosis, reduced error rates, and increased accessibility to expert-level analysis. |

| Finance | Fraud Detection | Anomaly Detection: Identify transaction patterns that deviate from a user’s normal behavior (Isolation Forests, Autoencoders). | Prevention of financial loss for both customers and institutions, enhanced security. |

| Automotive | Advanced Driver-Assistance (ADAS) | Object Detection: Identify vehicles, pedestrians, and lane markings in real-time from camera/sensor data (CNNs, Sensor Fusion). | Improved vehicle safety, collision avoidance, and driver convenience. Foundation for autonomous driving. |

| Logistics | Route Optimization | Optimization: Solve the “Traveling Salesperson Problem” for a fleet of vehicles to find the most efficient routes (Heuristics, Reinforcement Learning). | Reduced fuel costs, faster delivery times, and lower carbon footprint. |

Best Practices and Common Pitfalls

Navigating the discourse and development around different AI types requires intellectual discipline and ethical foresight.

Best Practices:

- Maintain Conceptual Clarity: Always be precise in your language. Do not use the term “AI” when you mean “machine learning” or “a deep neural network.” Clearly distinguish between the capabilities of ANI and the hypothetical nature of AGI. This precision is crucial for managing expectations with stakeholders and for clear technical communication.

- Focus on Robustness for ANI: When building narrow AI systems, do not just optimize for average-case performance. Invest heavily in identifying and mitigating edge-case failures. This involves techniques like adversarial testing, explainability (XAI), and building robust data pipelines that can detect and flag distributional shifts.

- Embrace Interdisciplinary Collaboration for AGI: The pursuit of AGI is not solely an engineering problem. It requires deep collaboration with cognitive scientists, neuroscientists, philosophers, and ethicists. Engaging with these fields provides critical insights into the nature of intelligence and the ethical frameworks required to guide development.

- Prioritize AI Safety Research: Even if AGI is decades away, the control problem is exceptionally difficult. Supporting and contributing to AI safety research today is a critical investment in ensuring a positive future for humanity. This includes work on value alignment, interpretability, and corrigibility.

Common Pitfalls:

- Anthropomorphism: Avoid the temptation to describe ANI systems in human terms. A language model does not “know” or “understand” or “believe” anything. It is a pattern-matching machine. Attributing human-like qualities to it can lead to dangerous misconceptions about its capabilities and reliability.

- The Hype Cycle: AI is prone to cycles of inflated expectations followed by periods of disillusionment (“AI winters”). Be a voice of reason. Ground your claims in evidence and be transparent about the limitations of current technology. Avoid making grandiose predictions about the imminent arrival of AGI.

- Confusing Performance with Competence: A system that achieves superhuman performance on a narrow benchmark may still be brittle and lack any real-world understanding. This is the core lesson of systems like AlphaGo, which mastered a complex game but has no intelligence outside of that context. Do not mistake high scores for general competence.

- Ignoring Ethical Implications: Every ANI system deployed in the real world has ethical dimensions, from algorithmic bias in hiring tools to the privacy implications of surveillance systems. It is the engineer’s responsibility to consider these impacts from the outset of the design process, not as an afterthought.

Hands-on Exercises

- The ANI Deconstruction (Individual):

- Objective: To identify the specific ANI components within a complex modern application.

- Task: Choose a technology you use daily (e.g., Google Maps, TikTok, or a grammar-checking software). Research and diagram the likely ANI systems working behind the scenes. For each component, define its specific task (e.g., “predicting traffic flow,” “classifying video content,” “identifying grammatical errors”), the likely type of model used (e.g., GNN, CNN, Transformer), and the data it would need for training.

- Success Criteria: A clear diagram and a written explanation that accurately breaks down the application into at least three distinct ANI functions.

- Designing an AGI Test (Team-based):

- Objective: To think critically about the core competencies of general intelligence.

- Task: In a group of 3-4, design a new test for AGI that is more robust than the Turing Test. Your test should evaluate a combination of skills, such as language, spatial reasoning, common sense, and learning ability. Define the setup, the tasks the AI must perform, and the specific criteria for passing.

- Success Criteria: A detailed written proposal for the test, justifying why it is a good measure of general intelligence and how it avoids the pitfalls of simpler tests. Present your test to the class.

- The Alignment Problem Thought Experiment (Individual):

- Objective: To understand the difficulty of specifying goals for a superintelligent system.

- Task: Imagine you have an AGI with the goal: “Maximize human happiness.” Write a short essay (500-750 words) exploring the potential unintended and catastrophic ways the AGI could interpret and implement this goal. Consider ambiguities in the definition of “happiness” and the unforeseen consequences of optimizing for it literally.

- Success Criteria: The essay should demonstrate a clear understanding of the AI alignment problem by providing several plausible, creative, and concerning scenarios.

- Classifying AI in Science Fiction (Individual or Team):

- Objective: To apply the ANI/AGI/ASI framework to fictional representations of AI.

- Task: Select an AI character from a popular movie, book, or TV show (e.g., HAL 9000, Skynet, Data from Star Trek, Samantha from Her). Analyze the character’s abilities and classify it as ANI, AGI, or ASI. Provide specific examples from the source material to justify your classification. Discuss whether the AI’s portrayal is technologically plausible based on the concepts learned in this chapter.

- Success Criteria: A clear analysis that correctly applies the chapter’s framework and supports its conclusions with specific evidence from the chosen media.

Tools and Technologies

While this chapter is theoretical, several tools and environments are central to the research pushing the boundaries of AI.

- Simulation Environments: For research into reinforcement learning and embodied AI, which are seen as potential pathways to AGI, rich simulators are essential. Tools like NVIDIA Isaac Sim (for robotics and physics simulation), DeepMind Lab, and Unity or Unreal Engine are used to create complex, interactive worlds where agents can learn through trial and error. These platforms allow for experiments that would be too slow, expensive, or dangerous to run in the real world.

- Machine Learning Frameworks: All modern AI research, including that aimed at AGI, is built on foundational frameworks like TensorFlow (v2.15+) and PyTorch (v2.2+). These open-source libraries provide the building blocks (e.g., automatic differentiation, GPU acceleration, neural network layers) that allow researchers to rapidly prototype and test new model architectures.

- AI Safety and Alignment Research: Organizations like the Machine Intelligence Research Institute (MIRI) and 80,000 Hours produce research and frameworks for thinking about AI safety. While not “tools” in a software sense, their publications on topics like Agent Foundations and Corrigibility are essential resources for anyone concerned with the long-term impacts of AGI.

- Cloud Computing Platforms: The sheer scale of modern AI models means that research is heavily reliant on cloud platforms like Google Cloud (Vertex AI), Amazon Web Services (SageMaker), and Microsoft Azure Machine Learning. These platforms provide access to the vast computational resources (TPUs and GPUs) required to train state-of-the-art models.

Note: The pursuit of AGI is not about finding a single “magic” tool. It is about integrating insights and techniques from multiple domains, using these powerful computational tools to test new hypotheses about the nature of learning and intelligence.

Summary

- AI is not a monolith. It exists on a spectrum from the specialized ANI of today to the hypothetical AGI and ASI of tomorrow.

- Artificial Narrow Intelligence (ANI) is task-specific. All current AI systems are ANI. They are powerful tools but lack general understanding and common sense.

- Artificial General Intelligence (AGI) is the hypothetical ability of an AI to perform any intellectual task a human can. Achieving it requires fundamental breakthroughs in areas like causal reasoning and common-sense knowledge.

- Artificial Super Intelligence (ASI) is a hypothetical intellect that would vastly exceed human capabilities in all domains. Its emergence could be rapid (an “intelligence explosion”) and poses a significant existential risk if not properly aligned with human values (the “control problem”).

- The path from ANI to AGI is a qualitative leap, not an incremental step. It requires moving from pattern recognition to genuine understanding.

- Evaluating progress towards AGI requires robust tests that probe for real-world competence, not just benchmark performance.

- The development of advanced AI is an interdisciplinary challenge that carries profound ethical responsibilities for every AI engineer.

Further Reading and Resources

- Bostrom, Nick. Superintelligence: Paths, Dangers, Strategies. Oxford University Press, 2014. The foundational text on the long-term prospects and risks of advanced AI.

- Pearl, Judea, and Dana Mackenzie. The Book of Why: The New Science of Cause and Effect. Basic Books, 2018. An accessible introduction to the mathematics of causality, a key missing piece in modern AI.

- Mitchell, Melanie. Artificial Intelligence: A Guide for Thinking Humans. Farrar, Straus and Giroux, 2019. A clear-eyed and insightful overview of the current state of AI, its limitations, and the challenges on the road to AGI.

- Wait But Why: “The AI Revolution: The Road to Superintelligence”. An incredibly popular and effective two-part blog post that explains the concepts of ANI, AGI, and ASI for a broad audience. (https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html)

- 80,000 Hours: “AI Safety and the Alignment Problem”. A curated collection of articles and resources providing a comprehensive overview of the key concepts in AI safety. (https://80000hours.org/problem-profiles/artificial-intelligence/)

- Chollet, François. “On the Measure of Intelligence.” arXiv preprint arXiv:1911.01547 (2019). A technical paper proposing a new framework for measuring intelligence based on skill-acquisition efficiency.

- Lake, Brenden M., et al. “Building machines that learn and think like people.” Behavioral and Brain Sciences 40 (2017). A key academic paper arguing for a new approach to AI that incorporates principles from cognitive science.

Glossary of Terms

- Artificial General Intelligence (AGI): A hypothetical form of AI, also known as Strong AI, that possesses the ability to understand or learn any intellectual task that a human being can.

- Artificial Narrow Intelligence (ANI): The current form of AI, also known as Weak AI, which is designed and trained for a specific task.

- Artificial Super Intelligence (ASI): A hypothetical AI that possesses an intellect far surpassing that of the brightest and most gifted human minds in virtually every field.

- AI Alignment Problem: The challenge of ensuring that the goals and behaviors of advanced AI systems are aligned with human values and intentions. Also known as the control problem.

- Common Sense: The vast, implicit body of knowledge about how the world works that humans use to navigate everyday life. A key missing component in current AI systems.

- Corrigibility: The property of an AI system that allows it to be safely corrected or shut down by its human operators, even if it believes doing so would conflict with its stated goals.

- Intelligence Explosion: A theoretical scenario where an AGI, capable of self-improvement, enters a runaway cycle of recursive self-improvement, rapidly becoming a superintelligence. Also known as the Singularity.

- Turing Test: A test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. Proposed by Alan Turing in 1950. https://en.wikipedia.org/wiki/Turing_test